#288: Performance benchmarks for Python 3.11 are amazing

About the show

Sponsored by us! Support our work through:

Brian #1: Polars: Lightning-fast DataFrame library for Rust and Python

- Suggested by a several listeners

- “Polars is a blazingly fast DataFrames library implemented in Rust using Apache Arrow Columnar Format as memory model.

- Lazy | eager execution

- Multi-threaded

- SIMD (Single Instruction/Multiple Data)

- Query optimization

- Powerful expression API

- Rust | Python | ...”

- Python API syntax set up to allow parallel and execution while sidestepping GIL issues, for both lazy and eager use cases. From the docs: Do not kill parallelization

The syntax is very functional and pipeline-esque:

import polars as pl q = ( pl.scan_csv("iris.csv") .filter(pl.col("sepal_length") > 5) .groupby("species") .agg(pl.all().sum()) ) df = q.collect()Polars User Guide is excellent and looks like it’s entirely written with Python examples.

- Includes a 30 min intro video from PyData Global 2021

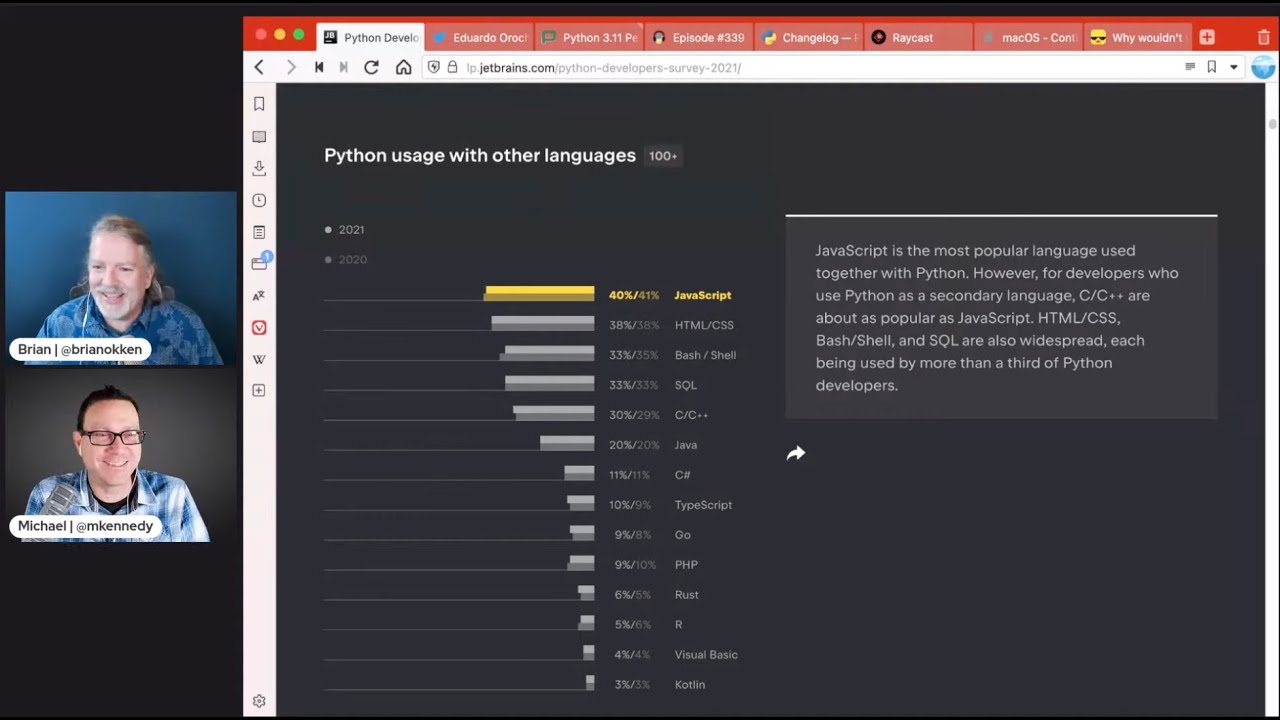

Michael #2: PSF Survey is out

- Have a look, their page summarizes it better than my bullet points will.

Brian #3: Gin Config: a lightweight configuration framework for Python

- Found through Vincent D. Warmerdam’s excellent intro videos on gin on calmcode.io

- Quickly make parts of your code configurable through a configuration file with the

@gin.configurabledecorator. It’s in interesting take on config files. (Example from Vincent)

# simulate.py @gin.configurable def simulate(n_samples): ... # config.py simulate.n_samples = 100You can specify:

- required settings:

defsimulate(n_samples=gin.REQUIRED)` - blacklisted settings:

@gin.configurable(blacklist=["n_samples"]) - external configurations (specify values to functions your code is calling)

- can also references to other functions:

dnn.activation_fn = @tf.nn.tanh

- required settings:

- Documentation suggests that it is especially useful for machine learning.

- From motivation section:

- “Modern ML experiments require configuring a dizzying array of hyperparameters, ranging from small details like learning rates or thresholds all the way to parameters affecting the model architecture.

- Many choices for representing such configuration (proto buffers, tf.HParams, ParameterContainer, ConfigDict) require that model and experiment parameters are duplicated: at least once in the code where they are defined and used, and again when declaring the set of configurable hyperparameters.

- Gin provides a lightweight dependency injection driven approach to configuring experiments in a reliable and transparent fashion. It allows functions or classes to be annotated as

@gin.configurable, which enables setting their parameters via a simple config file using a clear and powerful syntax. This approach reduces configuration maintenance, while making experiment configuration transparent and easily repeatable.”

Michael #4: Performance benchmarks for Python 3.11 are amazing

- via Eduardo Orochena

- Performance may be the biggest feature of all

- Python 3.11 has

- task groups in asyncio

- fine-grained error locations in tracebacks

- the self-type to return an instance of their class

- The "Faster CPython Project" to speed-up the reference implementation.

- See my interview with Guido and Mark: talkpython.fm/339

- Python 3.11 is 10~60% faster than Python 3.10 according to the official figures

- And a 1.22x speed-up with their standard benchmark suite.

- Arriving as stable until October

Extras

Michael:

- Python 3.10.5 is available (changelog)

- Raycast (vs Spotlight)

- e.g. CMD+Space => pypi search:

- e.g. CMD+Space => pypi search:

Joke: Why wouldn't you choose a parrot for your next application

Episode Transcript

Collapse transcript

00:00 Hello and welcome to Python Bytes, where we deliver Python news and headlines directly to your earbuds.

00:04 This is episode 288, recorded June 14th, 2022.

00:10 I'm Michael Kennedy.

00:11 And I am Brian Okken.

00:12 Brian, how are you doing?

00:13 I'm excellent today.

00:15 I hear you're a little busy.

00:16 But it's just, you know, being a parent and having side jobs and stuff like that.

00:21 Of course. Well, it's better than the alternative.

00:24 Definitely. I was talking to somebody this weekend about like their one job

00:28 and trying to balance job and life.

00:30 And I'm like, I don't even remember what that's like with just one job.

00:33 I know. Or you have a job where you go to work and you do the work.

00:36 And then when you go home, there's no real reason to do the job anymore.

00:39 So you can just step away from it.

00:41 It sounds glorious.

00:41 And yet I continue to choose the opposite, which I also love.

00:45 All right. Well, speaking of stuff people might love, you want to kick us off with your first item?

00:50 Yeah, we're going to talk about polar bears.

00:52 No, not polar bears.

00:53 A project called Polars.

00:56 And actually, it's like super fun and cool.

00:59 So Polars was suggested to us by actually several listeners.

01:02 We got several people sent in.

01:05 And I'm sorry, I don't have their names, but thank you.

01:07 Always send great stuff our way.

01:09 We love it.

01:10 But Polars is billed as a lightning fast data frame library for Rust and Python.

01:17 And it is written in Python.

01:21 No, it's written in Rust.

01:23 But they have a full API is present in Python.

01:31 And it's kind of neat, actually, how they've done it.

01:34 So we've got up on the screen the splash screen for the Polars project.

01:40 There's a user guide and API reference, of course.

01:43 But one of the things I wanted to talk about is some of their why you would consider it.

01:48 So Polars is lightning fast data frame library.

01:52 It uses an in-memory query engine.

01:54 And it says it's embarrassingly parallel in execution.

01:59 And it has cache-efficient algorithms and expressive API.

02:04 And they say it makes it perfect for efficient data wrangling, data pipelines, snappy APIs, and so much more.

02:10 But I just also is fun.

02:13 I played with it a little bit.

02:16 It's zippy and fun.

02:17 They have both the ability to do lazy execution and eager execution, whichever you prefer for your use.

02:26 It's multi-threaded.

02:28 It has a notion of single instruction, multiple data.

02:33 I'm not exactly sure what that means, but makes it faster, apparently.

02:37 And I was looking through the whole, the introductory user's guide is actually written like a very well-written book.

02:48 And it looks like the whole guide, as far as I can tell, is written for the Python API.

02:53 So I think that was part of the intent all along, is to write it quickly in Rust.

02:58 expose it to Rust users also, but also run it with Python.

03:03 And it's just really pretty clean and super fast.

03:08 One of these benchmark results performance things was, it's like Spark was taking 332 seconds and they took 43 seconds.

03:18 It's 100 million rows and it's just like, let's load up a couple of pieces of data or something.

03:27 Right.

03:27 So there's a lot of focus on this, making sure that it's fast, especially when you don't need everything, like doing lazy evaluation or making sure you do multiprocessing.

03:41 One of the things I thought was really kind of cool about it, I was looking through the documentation, is there's a section on, that says, it was a section that was talking about parallelization.

03:54 It says, do not kill the parallelization.

03:56 Because with Python, we know we, there is, basically there's ways to use polars that can kill parallel processing because of the GIL.

04:07 If you're using, if you don't do it the way they've set it up, you can use it in a way that makes it a little slower, I guess, is what I'm saying.

04:16 But, so there's a section on this talking about the polar expressions, polars expressions.

04:22 And these are all set up so that you can pass these expressive queries into polars and have it run in the background and just make things really fast.

04:33 And sort of skirt around the GIL because you're doing all the work in the Rust part of the world.

04:41 And then collecting the data later.

04:44 So there's like a set up the query and then collect the query.

04:47 That's kind of cool.

04:49 So, anyway, I just thought this is a really, looks fun.

04:52 And it's, it's just a, there's nothing to, you don't have to do, know that it's in Rust.

04:57 You just say pip install polars and it works.

05:00 So.

05:00 Yeah, that's great.

05:01 Out in the audience, Tharab asks, why Rust and not C?

05:06 Maybe an example there is Pandas versus this.

05:10 Also, probably the person who wrote it just really likes Rust.

05:12 And I think Rust has a little bit more thread safety than straight C does.

05:17 I'm not 100% sure.

05:18 But this uses threads, as you point out, whereas the other one, Pandas.

05:22 And others in C don't.

05:24 I also think that we're going to see a lot more of things like this.

05:27 Like, because I think some of the early faster packages for Python were written in C because Rust wasn't around or it wasn't mature enough.

05:37 But I think we're going to see more people saying, well, I want it to be closer to the processor for some of this stuff.

05:45 Why not Rust?

05:46 Because I think Rust is a cleaner development environment than C right now.

05:51 Yeah, I agree.

05:52 Absolutely.

05:53 It's just a more modern language, right?

05:55 You know, C is keeping up.

05:57 C is never going to be old, I don't think.

06:00 But yeah.

06:01 Yeah.

06:02 Yeah, yeah.

06:02 I don't mean to say that C is out, not modern in the sense that people are not using it.

06:06 But it doesn't embrace in its sort of natural form the most, you know, smart pointers and things like that.

06:12 Yeah.

06:12 And there's C++ maybe, but not C.

06:14 There's safety features built into Rust to make sure you don't, just make it easier to not do dumb things.

06:21 I guess.

06:22 Let's put it that way.

06:23 Indeed.

06:25 All right.

06:25 Well, let's jump on to my first item, which is a follow up from last week.

06:29 Python developer survey 2021.

06:31 Yes, you heard that right.

06:33 I know it's 2022.

06:33 These are the results from the survey that was at the end of last year.

06:36 So let's, I'm going to kind of skim through this and just hit on some of the main ideas here.

06:42 There's a ton of information and I encourage people to go over and scroll through it.

06:46 This is done in conjunction with the folks over at JetBrains, the PyCharm team and all that.

06:51 So it was collected and analyzed by the JetBrains folks, but put together independently by the PSF, right?

06:58 So it's intended to not be skewed in any way towards them.

07:01 All right.

07:02 So first thing is if you're using Python, is it your main language or your secondary language?

07:06 84% of the people say it's their main language with 16% picking up the balance of not so much.

07:12 It's been pretty stable over the last four years.

07:14 What do you think of this, Brian?

07:15 I think that there's a lot of people like me.

07:20 I think that it started out as my secondary language and now it's my main language.

07:24 Yeah.

07:25 Interesting.

07:26 Yeah.

07:27 And it got sucked in.

07:27 Like, ah, maybe I'll use it to test my C stuff.

07:29 Wait, actually, this is kind of nice.

07:30 Maybe I'll do more of this.

07:31 Yeah.

07:32 There's always the next question or analysis is always fraught with weird overlaps.

07:39 But I like the way they ask this a little bit better than a lot of times.

07:42 It says Python usage with other languages.

07:45 What other languages do you use Python with?

07:47 Rather than maybe a more general one where they ask, well, what is the most popular language?

07:51 And you'll see weird stuff like, well, most people code in CSS.

07:54 Like, I'm a full stack CSS developer.

07:57 Like, no, you're not.

07:58 Just everyone has to use it.

07:59 Like, what is this?

07:59 It's a horrible question.

08:00 Right.

08:01 So this is like, if you're doing Python, what other languages do you bring into the mix?

08:05 And I guess maybe just hit the top five.

08:07 JavaScript, because you might be doing front and back end.

08:09 HTML, CSS, same reason.

08:11 Bash shell, because you're doing automation build, so on.

08:14 SQL.

08:15 SQL.

08:15 I'm surprised there's that much direct SQL, but there it is.

08:19 And then C and C++, speaking of that language.

08:21 Also, to sort of address the thing that I brought up before, Rust is at 6%.

08:27 Last year, it was at 5%.

08:28 So it's compared to C at 30.

08:30 And 29, so they both grew by 1% this year.

08:33 Okay.

08:33 Yeah.

08:34 I think they both grew.

08:36 That's interesting.

08:36 Yeah, exactly.

08:37 Another thing that people might want to pay attention to is you'll see year over year stuff

08:43 all over the place in these reports, because they've been doing this for a while.

08:46 So like the top bar that's darker or sorry, brighter is this year, but they always also

08:53 put last year.

08:54 So for example, people are doing less bash.

08:56 You can see like it's lower bar is higher and they're doing less PHP.

09:00 Probably means they love themselves a little bit more.

09:03 Don't go home crying.

09:05 Okay.

09:06 Let's see.

09:07 Languages for web and data science.

09:09 This is kind of like if you're doing these things, what to use more.

09:13 So if you're doing data science, you do more SQL is your most common thing.

09:16 If you're doing web surprise, JavaScript and HTML is the most common other thing.

09:21 Yeah.

09:22 Let's see.

09:23 What do you use Python for?

09:25 Work and personal is 50%.

09:27 Personal is 29 and work 20%.

09:30 Kind of interesting that more people use it for side projects.

09:34 If they use it for just one or the other of work or personal.

09:37 I guess people who know Python at work, they want to go home.

09:40 They're like, you know what?

09:40 I could automate my house with this too.

09:42 Let's do that.

09:42 I think that, yeah, I would take it like that.

09:45 I think more people, it isn't just even automated your house.

09:48 It's just playing around with it at home.

09:49 Like, yeah, I heard about this, this new web framework, FastAPI.

09:53 I want to try it out.

09:54 Things like that.

09:55 So.

09:55 Yeah, absolutely.

09:56 I'm going to skip down here through a bunch of stuff.

09:58 What do you use Python for the most?

10:01 Web development, but that fell year over year.

10:04 Data analysis stayed the same year over year.

10:06 Machine learning fell year over year.

10:08 And a bunch of stuff.

10:09 But so sort of the growth areas year over year are education and desktop development.

10:14 And then other, I think it's pretty.

10:17 Also game development doubled.

10:19 Doubled from one to two percent.

10:21 I mean, from one to two is probably like there was, you know, that might be within the margin

10:25 of error type of thing.

10:26 But still, it doubled.

10:27 But I think just the other.

10:28 No, other didn't grow.

10:30 There's just, I think it's just more spread out.

10:31 I don't know.

10:32 Because there's still, I think, same number of people using Python.

10:34 All right.

10:34 Are you a data scientist?

10:36 One third, yes.

10:37 Two thirds, no.

10:38 That's that fits with my mental model of the Python space.

10:42 One third data science, one third web and API, and one third massively diverse other.

10:47 The way I see the ecosystem.

10:48 Python three versus two.

10:51 I think we're asymptotically as a limit approaching Python three only.

10:55 But year over year, it goes 25% from 2017, then 16% Python two, then 10%, then six, then

11:03 five.

11:03 And then there's just huge code bases that are stuck on Python two.

11:06 Like some of the big banks have like 5,000 Python developers working on Python two code bases

11:12 that are so specialized and tweaked that they can't just swap out stuff.

11:16 So, you know, that might represent 5% bank usage.

11:19 I don't know.

11:19 I just, I feel bad for you.

11:23 We're rooting for you.

11:25 Everybody out there using Python two.

11:27 Stick in there.

11:28 Let's approach that limit.

11:29 Yeah, yeah.

11:30 Let's divide by n factorial, not n for your limit there.

11:32 Let's go.

11:33 Get in there.

11:33 All right.

11:34 Python three nine is the most common version.

11:37 Three 10 being 16% and three eight being 27% versus 35.

11:42 So that's, that's pretty interesting.

11:44 Yeah.

11:44 I feel like this is, hey, this is what comes with my Linux.

11:47 This is what comes with my Docker.

11:49 So I'm using that, but maybe it's more.

11:51 Yeah.

11:52 It's interesting because you and I like our interesting space because we're always looking

11:56 at the new stuff.

11:57 So I, I'm at, I'm at three 10 and I can't wait to jump to three 11.

12:00 Yeah.

12:01 And actually I've switched to three 11 for some projects.

12:05 So, but there's a lot of people that was like, man, Python's pretty good.

12:09 And then it's been good for a while.

12:11 So I don't need a lot of the new features.

12:13 So.

12:14 Yeah, for sure.

12:15 I'm going to later talk about something that might shift that.

12:19 Yeah.

12:20 To the right.

12:22 I've actually been thinking like, should I maybe install three 11 beta?

12:25 See how stable that is on the servers.

12:27 We'll see.

12:28 That might be a bad choice.

12:29 Might be a good choice.

12:31 That's what's okay.

12:32 where do you install Python from?

12:34 38%?

12:35 Just download the thing from Python.org and run with that.

12:37 Yeah.

12:38 The next most common option is, to install it via your OS package manager, apt, homebrew,

12:46 whatever.

12:46 Yeah.

12:47 And Alvaro has a great little recommendation out there for people who are stuck on Python

12:51 too.

12:51 There probably is a support group for Python two users.

12:54 Hi, my name is Brian and I use Python too.

12:57 Hi, Brian.

12:59 All right.

13:02 Another one I thought was pretty interesting is, the packaging stuff, the isolation stuff,

13:08 before we get there really quick, web frameworks, FastAPI continues to grow.

13:13 Yeah.

13:13 Pretty strong here.

13:15 We've got Flask is now maybe within the margin error, but just edged ahead of Django, but fast

13:22 API almost doubled in usage over the last year.

13:25 It grew nine percentage points, but it was at 12% last year.

13:28 And so now it's at 21%, which is, that's a pretty big chunk to take out of established

13:32 frameworks.

13:32 Yeah.

13:33 Well, and it looks like the third is none.

13:36 I haven't tried that yet.

13:38 Yeah.

13:38 It gets a lot of attribute errors, but it's, it's really efficient because it doesn't do

13:42 much work.

13:42 Yeah.

13:44 Yeah.

13:44 People, who maybe don't know FastAPI, the name would indicate it's only for building

13:48 APIs, but you can build web apps with it as well.

13:51 And it's pretty good at that.

13:52 Especially if you check out Michael's courses, he's got like two courses on building web apps

13:59 with the fast eggs.

14:00 I do.

14:01 I do.

14:01 And I also have a, some, some sort of template extensions for it that make it easier.

14:04 All right.

14:05 Data science libraries.

14:06 I don't know how I feel about this one.

14:08 Do you use NumPy?

14:09 Well, yes, but if you use other libraries, then you also use NumPy.

14:12 So yeah, it's like all of these are using NumPy.

14:14 So exactly.

14:16 Exactly.

14:17 Yeah.

14:18 a bunch of other stuff.

14:19 Look at that for unit testing.

14:21 Would it surprise you that pytest is winning?

14:22 No.

14:23 They just overtook Num this year, didn't it?

14:26 yeah.

14:30 So.

14:31 All right.

14:32 ORMs, SQLAlchemy is ahead and then there's, Django ORM.

14:37 Django is tied to Django.

14:39 SQLAlchemy is broad.

14:40 So there's, there's that.

14:41 And then kind of the none of the ORM world is raw SQL at 16%.

14:45 That's pretty interesting.

14:47 Postgres is the most common database by far at 43%.

14:51 Then you have SQLite, which is a little bit of a side case.

14:54 You can use it directly, but it's also used for development.

14:56 And then MySQL, the MongoDB, and then Redis and Microsoft SQL Server.

14:59 So.

15:00 Yeah.

15:00 Huh.

15:01 Actually SQL Server and Oracle are higher than I would have expected, even though, you know,

15:05 but it's okay.

15:06 Well, I think what you're going to find is that there's like certain places, especially

15:10 in the enterprise where it's like, we're a SQL Server shop or we're an Oracle shop and

15:15 our DBAs manage our databases.

15:16 So here you, you put in a, you file a ticket and they'll create a database for you.

15:21 Yeah.

15:21 Or there's a, there's already an existing database and you're connecting to it or something.

15:25 Yep.

15:25 Yep.

15:26 Exactly.

15:26 Exactly.

15:27 All right.

15:28 Let's keep going.

15:28 Cloud platforms.

15:29 AWS is at the top.

15:31 Then you've got Google cloud at 50% and then GCP, Google cloud platform, then Azure, then

15:36 Heroku, DigitalOcean.

15:38 Linode has made it on the list here.

15:39 So, you know, a former or sometimes sponsor of the show, it's good for them.

15:45 And let's see, do you run, how do you run stuff in the cloud?

15:48 Let's skip over this.

15:49 I think a bunch of interesting, a few more interesting things and we'll, we'll call it compared to

15:53 2020 Linux and macOS popularity decreased by 5% while windows usage has risen by 10%.

16:00 Wow.

16:00 Yeah.

16:01 Where the windows people now double more than double the macOS people and are almost rivaling

16:06 the Linux people.

16:06 That's, I think that's just, towards the growth of Python.

16:10 I think, Python's just making it more into everybody's using it sort of thing.

16:16 Yeah.

16:16 And there's also a windows subsystem for Linux.

16:18 It's been coming along pretty strong, which makes windows a more viable, more on have, have

16:24 more parity with your cloud targets.

16:26 Right.

16:26 Yeah.

16:27 And it feels like out in the audience is because of WSL.

16:29 Yeah.

16:29 Maybe.

16:30 Yeah.

16:30 Okay.

16:31 let's see a few more things.

16:33 Documentation.

16:34 It's cool.

16:34 They're asking about like what documentation frameworks you use.

16:36 This one's interesting to me.

16:38 What's your main editor, VS Code or PyCharm?

16:40 I asked this question a lot at the end of talk Python and it feels like VS Code, VS Code,

16:44 VS Code, VS Code is what people are saying all the time, but it's 35% VS Code, 31% PyCharm

16:49 and Brian right there for you.

16:50 7% Vim, but.

16:52 Okay.

16:53 Yeah.

16:54 I just teased you.

16:55 Yeah.

16:56 To be fair, it's both VS Code.

16:59 It's, it's, it's all three.

17:00 Yeah.

17:00 Or top.

17:01 Yeah.

17:01 Top four for me, but yeah.

17:03 Yeah, exactly.

17:04 Well, often you probably just use Vim bindings within the other two, right?

17:07 Yep.

17:08 Yeah.

17:08 Let's see.

17:09 I think also maybe another interesting breakdown is that if you look at the usage scenarios

17:17 or the type of development done with the editors, you get different answers.

17:21 So like for data science, you've got more PyCharm and for web development, I think, hold on,

17:28 I have that right.

17:29 Oh, interesting.

17:30 For data science, you have a lot more VS Code.

17:32 For web development, you have more PyCharm and you have a lot less other in data science,

17:36 AKA Jupyter.

17:38 I suspect it.

17:39 Yeah.

17:39 Yeah.

17:39 Okay.

17:40 How did you learn about your editor?

17:42 By far?

17:43 Or first one here is from a friend.

17:45 So basically friends like push editors, like drug dealers, like gotta get out.

17:49 What are you doing on that thing?

17:50 Get in here.

17:50 No, I think it's like, if I'm, if I'm watching somebody do something cool, I want to do it

17:55 also because it looks helpful.

17:57 Yeah, exactly.

17:57 You sit down next to your friend and you're like, how did you do that?

17:59 That's awesome.

18:00 I want that feature, right?

18:01 I think you're probably right.

18:02 Okay.

18:03 Let's just bust down a few things better.

18:05 One, do you know, or what do you think about the new developer in residence role?

18:09 This is Lucas Schillinga that's going on right now.

18:12 77% are like, the what?

18:14 Never heard of it.

18:18 Maybe like we got, we got a little more advocacy job to do here, but he's been doing a great

18:23 job really speeding things up and sort of greasing the wheels of open source contributions

18:28 and whatnot.

18:29 I, yeah, I'm going to take it like design because if design's done well, nobody knows it's there.

18:35 And yeah, I think the same thing.

18:37 I think if he's doing his job really, really well, most people won't notice things will

18:41 just work.

18:41 Yep.

18:42 Yeah.

18:43 Quick real time follow up.

18:44 Felix out in the audience says, I use PyCharm because of Michael.

18:47 It should have been one of the options in the survey because of Michael.

18:53 Oh, come on.

18:54 That's awesome.

18:55 But no, let's see.

18:57 There's a bunch of questions about that.

18:58 And the final thing I want to touch on is Python packaging.

19:00 Let's see here.

19:03 Which tools related to Python packaging do you use directly?

19:06 And we've talked about poetry.

19:08 We've talked about Flit, pipenv and so on.

19:13 And 81% of the people are like, I use pip for packaging.

19:20 As opposed to Flit or something.

19:22 And then sort of parallel to that is for virtual environment.

19:25 Do you use the, you know, what do you use for virtual environments basically?

19:28 Yeah.

19:29 Like 42% is like, I just use the built-in one or I use the virtual ENV wrapper.

19:34 And then it's like poetry, pipenv talks and so on.

19:39 There's a few.

19:40 I don't know what this is.

19:40 Yeah.

19:42 Well, I'm glad they included that because one of the original questions didn't include

19:47 like the built-in VENV.

19:49 And that's, I think that's what most people use.

19:52 It is.

19:53 Yeah.

19:54 Yeah.

19:54 Absolutely.

19:55 All right.

19:55 Well, I think there's more in my progress bar here.

19:58 This is a super detailed report.

20:00 I'm linking to it in the show notes.

20:02 So just go over there and check it out if you want to see all the cool graphs and play

20:05 with the interactive aspects.

20:06 But thanks again to the PSF and JetBrains for putting this together.

20:09 It's really good to have this insight and these projections of where things are going.

20:13 Yeah.

20:14 Hey.

20:15 All right.

20:15 I'm going to grab the next one.

20:17 Ooh, we did this smoothly this time.

20:19 Nice.

20:19 So JIN config is just JIN actually, but the project's called JIN config.

20:28 And it's kind of a neat little thing.

20:32 It's a different way to think about configuration files.

20:35 So like you have, you have your PyProject or you have .toml files.

20:41 You could have .any files.

20:42 There's a lot of ways to have configuration files.

20:45 But JIN takes the perspective of, oh, well, what if you just, what if you're not really

20:53 into all of that stuff and you're a machine learning person and you just have a whole bunch

20:57 of stuff to configure and you're changing stuff a lot?

21:00 Maybe let's make it easier.

21:01 So I actually came across this because of Vincent Warmerdam.

21:10 He's got an excellent intro to JIN on his comcode site.

21:15 And the idea is you've got this, you just have for a function that you want to, in your

21:22 code, you got some code and you have like part of it that you want configurable.

21:26 You just slap a JIN configurable decorator onto it.

21:30 And then all of the parameters to that function are now something that can show up in a config

21:36 file.

21:36 And it's not in any file.

21:38 I actually don't know the exact syntax, but it just kind of looks like Python.

21:41 It's a, you just have names.

21:45 Like in the, in this example that I'm showing, there's a, there's a file called simulate and

21:51 there's actually a function called simulate and a parameter called in samples.

21:55 And in your config file, you can just say simulate.insamples equals a hundred or something like that.

22:02 Oh, wow.

22:03 This is like, it basically sets the default parameters for all your functions you're calling.

22:08 Yeah.

22:09 The ones that you want to be configurable and you just do that.

22:13 Now it's still where you can still set defaults within your code and, and just like you normally

22:20 would.

22:20 And then, and then you can configure the ones that you want to be different than the defaults.

22:24 So that's a, that's a possibility.

22:26 And there's a whole bunch of, I'm going to expand this a little bit.

22:30 There's a whole bunch of different things that Vincent goes through like required settings.

22:36 You can have a, you can specify like a dot.

22:39 What is it?

22:40 Gin dot required as a function.

22:43 And it makes it so that, or as your parameter, and then it makes it so that your user has to

22:49 put it in their config file.

22:50 That's kind of cool.

22:52 And then you can also, if you don't want somebody to configure something, you can, you can mark

22:58 it as, oh, he's got blacklist the end samples.

23:03 So if you want, like in this example, he's got a simulate function with two parameters, random

23:07 funk and end samples.

23:08 You want people to configure the random funk, but you don't want them to touch the end samples.

23:12 You can, you can say, don't do that.

23:15 So, it's kind of neat.

23:17 There's a whole bunch of cool features around it.

23:19 Like, like being able to specify different functions so you can name things and, do

23:25 it around like, like to say like in his example, he's got random functions.

23:29 And if you, you can specify, you know, one of the other, one of the other, like a random

23:35 triangle function, you can specify a function and assign it to that.

23:39 He's got, named things.

23:41 it's a really, it, it's a interesting way to think about configuration and the, the,

23:48 the motivation section of the documentation for gin says, that often modern machine learning

23:55 experiments require just configuring a whole bunch of parameters and, and then you're

24:01 tweaking them and stuff.

24:02 And, and, and to have that be as easy as possible and as simple as possible, because

24:08 it is, and you're going to add some and take some away and things like that because some

24:12 things you want configured and then you decide not to not having to go through a config parser

24:18 system, and just making it as trivial as possible to add parameters.

24:22 I think it's a really cool idea.

24:23 So.

24:24 It is a cool idea.

24:25 It reminds me of like dependency injection a little bit.

24:28 Yeah.

24:28 you know, where you would like configure, say like if somebody asks for a function

24:33 that implements this or that, that goes here, like this is the data access layer to use, or

24:38 here's the ORM I want you to pick this time.

24:40 It's not super common in Python, but it's pretty common in a lot of languages.

24:45 And it feels a little bit like that.

24:46 Can we configure stuff?

24:48 So we have these parameters that we might use for testing or something, but it just, they get

24:53 filled in automatically.

24:54 Yeah.

24:55 Even FastAPI has that for example.

24:56 Yeah.

24:57 Yeah.

24:57 so cool.

24:58 Somebody in the audience says it isn't, isn't Jen used with go.

25:03 and I'm not sure about that, but it, Jen is, is not an officially supported Google

25:09 product, but it's under the Google, like a GitHub repo group.

25:14 So maybe, yeah, maybe it does look very Python like though for the config files.

25:19 And that's cool.

25:20 Yeah.

25:20 Good one.

25:21 All right.

25:21 let me switch back before I swap over.

25:23 Okay, here we go.

25:24 Now this next one, I think universally will be well accepted.

25:30 Although the comment section about it was a little bit rough and tumble.

25:33 Nonetheless, I think it should be universally exciting to everyone.

25:37 And this comes to us from Eduardo Orochena who sent over this article that said the, what's

25:44 it called?

25:44 The Python 311 performance benchmarks are looking fantastic.

25:48 And oh boy, are they?

25:50 So we're talking beta code six months out, right?

25:54 And still, still we've got some pretty neat stuff.

25:56 So this, this links over to an article with that same title by Michael Larabel basically

26:02 says, look, we took a whole bunch of different performance benchmarks for Python and ran them

26:07 on Python 311 beta, which this is the thing I was hinting at.

26:12 Like you might really want to consider this for if you're thinking, should we upgrade from

26:17 nine to 10?

26:18 Maybe you want to just go straight to 11.

26:20 Right.

26:20 I mean, you know, sort of a side thought, Brian, isn't it awesome that the one that

26:25 goes like crazy performance, this one goes to 11.

26:28 All right.

26:33 So they show all the stuff that they're testing on, like AMD Ryzen 16 core 32 with hyper threading

26:39 the motherboard.

26:41 I mean, like down to the motherboard and the chipset and the memory and all that.

26:44 So a pretty decent stuff.

26:47 And then also the build commands and all sorts of things here.

26:50 So pretty repeatable, I think.

26:52 Yeah.

26:53 Rather than just like, hey, I ran it and here's a graph without, without axes or something like

27:00 that.

27:00 So you can kind of click through here and you see some pictures and it says, all right,

27:03 well, there's the Pybench, which I think is like the standard simple one.

27:07 It says, look at this.

27:08 The Python 311 beta is faster than 310, which by the way, was slightly slower than the previous

27:14 ones.

27:14 But you know, what is that?

27:16 10% or something.

27:17 So already actually 16% better.

27:20 So that's already pretty awesome.

27:22 But there's a whole bunch of other ones.

27:24 They did one called Go.

27:25 I don't know what these benchmarks are.

27:27 This does, I don't think this has anything to do with the language Go, just the name of

27:31 the benchmark.

27:31 And then there's two to three and chaos.

27:33 That one sounds like the funnest.

27:34 But if you look at this Go one, this one is like almost 50% faster.

27:39 50% faster.

27:41 That's insane, right?

27:42 Yeah.

27:42 Wow.

27:43 And you come down to the two to three is, these are all estimates, 25, 20% faster,

27:49 say 40% faster with the chaos one.

27:53 Come down to the float operations and Python 310 was already better than the others.

27:57 But this is again, maybe 30% faster.

28:00 And let's roll into the next page.

28:03 You just kind of see this across the board.

28:05 Better, better.

28:06 Some of them are super better.

28:07 Some are like a little bit better, like Pathlib's better, but not crazy.

28:11 Ray tracing is like, again, 40% better here.

28:14 And you keep going.

28:16 There's another one with this huge crypto IAES, some sort of encryption thing.

28:21 So there's just a bunch of, a couple of these are, there's like this one at the end, you're

28:25 like, oh wait, this one got way worse.

28:26 Be careful because it says more is better on this composition.

28:30 I guess is the results here.

28:31 Like how much more computing power do you get per CPU cycle or whatever?

28:36 What is that?

28:37 That's a massive jump.

28:38 You saw a little bit better improvements from 3.8 to 3.9, 3.9 to 3.10.

28:43 But 3.10 to 11 is like a 40%, yeah, 41% better on the beta before it's even final.

28:50 Wow.

28:50 That's pretty exciting, right?

28:51 That's very exciting.

28:52 And actually, I think, I'm curious what some of these negative comments are, but the interesting

28:59 thing is to run lots of different metrics and lots of different benchmarks and having them

29:06 all be, it's faster kind of means that, I mean, I take it as, you know, your mileage may vary,

29:12 but it's going to be better for whatever you're doing, probably.

29:16 Yeah.

29:17 Yeah.

29:17 Yeah.

29:17 It feels like this is a thing you could just install and things get better.

29:20 The negative comments are mostly like, well, if Python was so slow, it could be made this

29:25 faster than Python is a crappy language.

29:26 It's pretty much, I've summed up like 65 comments right there.

29:31 By the way, so I interviewed Guido Van Rassam and Mark Shannon a little while ago about

29:39 this whole project about making Python five times, not 40%, but five times faster.

29:43 And the goal is to make it a little bit faster like this, each release for five releases in

29:47 a row.

29:48 And because of compounding, that'll get you to like 5%.

29:51 So it looks like they're delivering, which is awesome.

29:53 Yeah.

29:53 This is good.

29:54 Yeah.

29:55 Well, cool.

29:55 All right.

29:56 Yeah.

29:57 I think that's it for all of our items.

29:59 Yeah.

30:00 Got any extras?

30:01 no, I was going to pull up the, so yeah, the, the, this one goes to 11.

30:06 If people don't know that that's a spinal tap reference.

30:08 Yeah, exactly.

30:12 All right.

30:13 I got a few extras to throw out real quick.

30:15 Python three, 10, five is out with a bunch of bug fixes.

30:19 Like what happens if you create an F string that doesn't have a closing curly and just a

30:23 bunch of crashes and bug fixes.

30:25 So if you've been running into issues, you know, maybe there's a decent amount of stuff in

30:29 the changelog here.

30:29 Nice.

30:30 People can check that out.

30:31 Also real quick, people might, if they're on a Mac, they might check out Raycast, which

30:36 is a replacement for the command space spotlight thing that has like all these developer plugins.

30:41 So you can do like interact with your GitHub repo through command space and stuff.

30:46 You can create a lot of things.

30:48 And there's a bunch of extensions.

30:49 like, this thing's free, at least for not for team, if you're not on a team, but there's a bunch of different, things you can get that are full, like managing

30:58 processes, doing searches, VS Code project management from command space and whatnot.

31:04 So I'm going to do a lot of things.

31:05 I'm going to do a lot of things.

31:05 I'm going to do a lot of things.

31:06 I'm going to do a lot of things.

31:07 I'm going to do a lot of things.

31:07 I'm going to do a lot of things.

31:08 I'm going to do a lot of things.

31:09 I'm going to do a lot of things.

31:09 I'm going to do a lot of things.

31:10 I'm going to do a lot of things.

31:11 I'm going to do a lot of things.

31:12 I'm going to do a lot of things.

31:13 I'm going to do a lot of things.

31:14 I'm going to do a lot of things.

31:15 I'm going to do a lot of things.

31:16 I'm going to do a lot of things.

31:17 I'm going to do a lot of things.

31:18 I'm going to do a lot of things.

31:19 I'm going to do a lot of things.

31:20 I'm going to do a lot of things.

31:21 I'm going to do a lot of things.

31:22 I'm going to do a lot of things.

31:23 I'm going to do a lot of things.

31:24 I'm going to do a lot of things.

31:25 I'm going to do a lot of things.

31:26 I'm going to do a lot of things.

31:27 I'm going to do a lot of things.

31:28 which ties really well back to the PSF survey.

31:30 We talked about, well, what framework do you use?

31:32 What data science framework do you use?

31:34 Or what web framework do you want to use?

31:36 Django or Flask or FastAPI or what?

31:39 So here's one that is a pretty interesting analysis.

31:42 And the title is, why wouldn't you choose Parrot for your next application?

31:46 Not a framework, but literally a Parrot.

31:48 And this is compared to machine learning.

31:51 So it has like this breakdown of features, like a featured table.

31:56 And it has a parrot, which literally just has a picture of a parrot.

31:58 And this is machine learning algorithms with a neural network.

32:00 And then it lists off the features.

32:02 Learns random phrases.

32:03 Check, check.

32:04 Doesn't understand anything about what it learns.

32:08 Check, check.

32:09 Occasionally speaks nonsense.

32:11 Check, check.

32:12 It's a cute birdie parrot.

32:13 Check, fail.

32:15 Why wouldn't you choose this, Brian?

32:19 This is funny.

32:21 I love it.

32:22 Yeah, it's pretty good.

32:24 Pretty good stuff.

32:25 I actually reminds me of like, I have to pull up this article.

32:28 So I was reading about some machine learning stuff to try to get models like even closer

32:33 and closer to reality.

32:34 There's a whole bunch of tricks people do.

32:36 And then, and then there's some analysis of like, sometimes it's actually not doing anything

32:42 more than just a linear regression.

32:44 So, yeah.

32:45 Try simple for an if statement.

32:47 Yeah.

32:48 Yeah, yeah, yeah, yeah.

32:49 For sure.

32:50 They're using artificial intelligence to make the computer decide.

32:53 No, it's an if statement.

32:54 Like, it's just computers deciding things the old fashioned way.

32:58 Yeah, yeah.

32:59 So.

32:59 Awesome.

33:00 All right.

33:00 Well, thanks for being here.

33:01 Thank you.

33:02 Thanks everyone for listening.