#222: Autocomplete with type annotations for AWS and boto3

Sponsored by Linode! pythonbytes.fm/linode

Special guest: Greg Herrera

YouTube live stream for viewers:

Michael #1: boto type annotations

- via Michael Lerner

boto3's services are created at runtime- IDEs aren't able to index its code in order to provide code completion or infer the type of these services or of the objects created by them.

- Type systems cannot verify them

- Even if it was able to do so, clients and service resources are created using a service agnostic factory method and are only identified by a string argument of that method.

boto3_type_annotationsdefines stand in classes for the clients, service resources, paginators, and waiters provided byboto3's services.

Example with “bare” boto3:

Example with annotated boto3:

Brian #2: How to have your code reviewer appreciate you

- By Michael Lynch

- Suggested by Miłosz Bednarzak

- Actual title “How to Make Your Code Reviewer Fall in Love with You”

- but 🤮

- even has the words “your reviewer will literally fall in love with you.”

- literally → figuratively, please

- Topic is important though, here are some good tips:

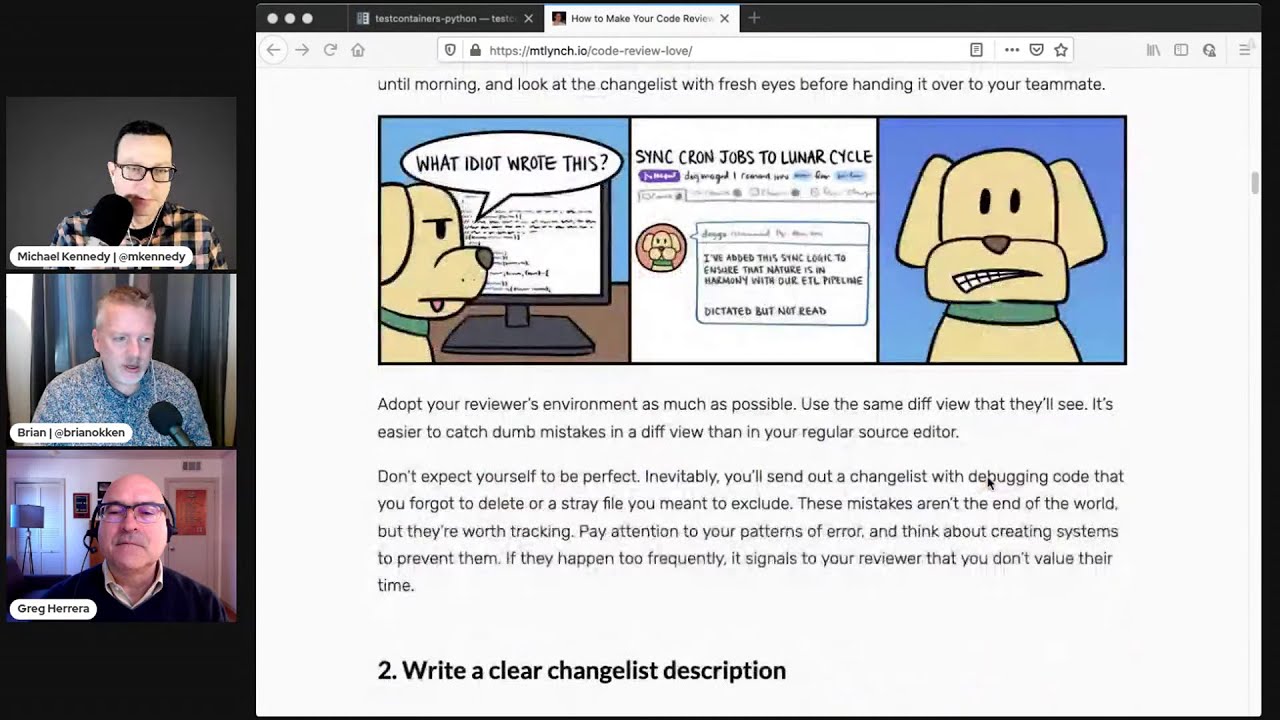

- Review your own code first

- “Don’t just check for mistakes — imagine reading the code for the first time. What might confuse you?”

- Write a clear change list description

- “A good change list description explains what the change achieves, at a high level, and why you’re making this change.”

- Narrowly scope changes

- Separate functional and non-functional changes

- This is tough, even for me, but important.

- Need to fix something, and the formatting is a nightmare and you feel you must blacken it. Do those things in two separate merge requests.

- Break up large change lists

- A ton to write about. Maybe it deserves 2-3 merges instead of 1.

- Respond graciously to critiques

- It can feel like a personal attack, but hopefully it’s not.

- Responding defensively will only make things works.

- Review your own code first

Greg #3: REPODASH - Quality Metrics for Github repositories

- by Laurence Molloy

- Do you maintain a project codebase on Github?

- Would you like to be able to show the maturity of your project at a glance?

- Walk through the metrics available

- Use-case

Michael #4: Extra, extra, extra, extra, hear all about it

- Python 3 Float Security Bug

- Building Python 3 from source now :-/ It’s still Python 3.8.5 on Ubuntu with the kernel patch just today! (Linux 5.4.0-66 / Ubuntu 20.04.2)

- Finally, I’m Dockering on my M1 mac via:

docker context create remotedocker --docker "host=ssh://user@server"docker context use remotedockerdocker run -it ubuntu:latest bashnow works as usual but remotely!

Why I keep complaining about merge thing on dependabot. Why!?! ;)

Anthony Shaw wrote a bot to help alleviate this a bit. More on that later.

Brian #5: testcontainers-python

- Suggested by Josh Peak

- Why mock a database? Spin up a live one in a docker container.

“Python port for testcontainers-java that allows using docker containers for functional and integration testing. Testcontainers-python provides capabilities to spin up docker containers (such as a database, Selenium web browser, or any other container) for testing.”

import sqlalchemy from testcontainers.mysql import MySqlContainer with MySqlContainer('mysql:5.7.32') as mysql: engine = sqlalchemy.create_engine(mysql.get_connection_url()) version, = engine.execute("select version()").fetchone() print(version) # 5.7.32The snippet above will spin up a MySql database in a container. The

get_connection_url()convenience method returns asqlalchemycompatible url we use to connect to the database and retrieve the database version.

Greg #6: The Python Ecosystem is relentlessly improving price-performance every day

- Python is reaching top-of-mind for more and more business decision-makers because their technology teams are delivering solutions to the business with unprecedented price-performance.

- The business impact keeps getting better and better.

- What seems like heavy adoption throughout the economy is still a relatively small-inroad compared to what we’ll see in the future. It’s like water rapidly collecting behind a weak dam.

- It’s an exciting time to be in the Python world!

Extras:

Brian:

- Firefox 86 enhances cookie protection

- sites can save cookies. but can’t share between sites.

- Firefox maintains separate cookie storage for each site.

- Momentary exceptions allowed for some non-tracking cross-site cookie uses, such as popular third party login providers.

Joke:

56 Funny Code Comments That People Actually Wrote: These are actually in a code base somewhere (a sampling):

/*

* Dear Maintainer

*

* Once you are done trying to ‘optimize’ this routine,

* and you have realized what a terrible mistake that was,

* please increment the following counter as a warning

* to the next guy.

*

* total_hours_wasted_here = 73

*/

// sometimes I believe compiler ignores all my comments

// drunk, fix later

// Magic. Do not touch.

/*** Always returns true ***/

public boolean isAvailable() {

return false;

}

Episode Transcript

Collapse transcript

00:00 Hello and welcome to Python Bytes, where we deliver Python news and headlines directly to

00:04 your earbuds. This is episode 222, recorded February 24th, 2021. I'm Michael Kennedy.

00:11 And I'm Brian Okken.

00:12 And I'm Greg Herrera.

00:13 Hey, Greg Herrera. Welcome, welcome. We have a special guest.

00:16 Thank you.

00:16 Welcome. Part of the Talk Python team and now part of the Python Bytes podcast. It's

00:21 great to have you here.

00:21 Happy to be here. Thank you.

00:23 Yeah, it's great. Also making us happy and many users throughout the world is Linode.

00:29 Linode is sponsoring this episode and you can get $100 credit for your next project at

00:34 pythonbytes.fm/Linode. Check them out. It really helps support the show.

00:38 So, Greg, you want to just tell people really quickly about yourself before we dive into the

00:42 topics?

00:42 Yeah. Before I joined the team at Python Bytes, I had run a data analytics consulting firm where

00:49 we built data warehouses and did data science type things. It was called business intelligence at the

00:56 time. And as I was learning, we started running into a lot of open source users, in particular,

01:04 Python. And so I dove into the Python ecosystem when I sold that company to get up to speed on how

01:10 things are going to be done in the future.

01:13 That's awesome. One of those Wayne Gretzky moments, right?

01:16 Yes, exactly.

01:17 Cool. Well, awesome. It's great to have you here. So I want to jump right into our first topic. We

01:23 have a lot of things to cover today. So I'll try to not delay too long. But I've got to tell you,

01:29 I'm a big fan of AWS S3. I'm a big fan of some of the services of AWS in general, right? Don't run the

01:37 main stuff over there. But many of the things, many of the services and APIs I use. That said,

01:42 I feel like the S3 or the Boto API, the Boto 3 API rather, is one of the worst programming interfaces

01:50 I've ever used in my life. I mean, it is so frustratingly bad. The way you work with it is

01:58 you go through and you say, I'd like to talk to Amazon. And then you say, I would like to get a

02:03 service. And instead of creating a class or a sub module or something like that, that would be

02:09 very natural in Python. What you do is you go to a function and say, give me the service and you give

02:13 it a string. Like I want quote S3, or I want quote EC2 or quote some other thing. And then you get a

02:20 generic object back and you have no idea what you got back, what you can do to it. You start passing

02:24 stuff over to it. Sometimes it takes keyword arguments, but sometimes you just put dictionaries,

02:29 which are one of the values of a keyword. There's just all this weirdness around it.

02:33 So every time I interact with them, I'm like, oh, I'm just probably doing this wrong. I have no

02:37 idea of even what type I'm working with because it's like this bizarro API that is like levels of

02:43 indirection. It's because it's generated at runtime or at least dynamically, right? There's not static

02:48 Python that is it like looks at the service you're asking for and then like dynamic up thing. So I feel

02:54 like there's a lot of work over there that could be done to just, you know, put a proper wrapper at a

02:58 minimum on top of those types of things. That said, wouldn't it be nice if your editor knew better

03:04 than AWS is willing to help you with? So we've got this really cool library that I want to talk about.

03:10 This was sent over by Michael Lerner. And the idea is you can add type annotations as an add-on to the

03:17 Bodo library. So then you get full-on autocomplete. So let me give you a little example here. For those

03:22 who are in the live stream, you can see it, but those are not, you can just like, I'll just describe it.

03:26 So for example, if I want to talk to S3, like I said, I say Bodo3.client, quote S3, as opposed to

03:32 quote EC2. And what comes back is a base client, figure it out. It can do things. It can get a

03:37 waiter and a paginator and it has the possibility to see exceptions about it. And that's it, right?

03:42 That's all you know. And this is the API you get when you're working with things like PyCharm and VS Code

03:47 and mypy and other type annotation validators, right? Lenters and whatnot. They get nothing.

03:54 So if you go and use this Bodo library, this Bodo type annotations, there's no runtime behavior.

04:01 It just reads, I think they're PYI files. I can't remember what the final letter is, but it's like

04:07 these kind of like a C++ header file. It just says these things have these, these fields, but no

04:11 implementation. They actually come from, you know, the Bodo library. So we just go and import, you know,

04:16 from Bodo three type annotations dot S3 import client. And we say S3 colon client equals this

04:24 weird factory thing. Boom. All of a sudden you get all the features of S3. You can say S3 dot and it

04:30 says, create bucket, get object, create multi-part upload. Hey, guess what? Here's all the parameters

04:34 that are super hard to find in the documentation. Thank you, Michael, for sending this over. I already

04:38 rewrote one of my apps to use this. It's glorious. Nice. What do you guys think? So does it, you said

04:44 you rewrote the app. Does it really change? No, I, well, let me rephrase that. I wanted to make a

04:50 change in the way one of my apps that was extremely S3 heavy, it basically shuffles a bunch of stuff

04:55 around and like on using S3 and some other stuff. And I wanted to change it. But before I changed it,

05:01 I'm like, well, let me fancy it up with all these types. And then it'll tell me whether I'm doing it

05:07 right or wrong and whatnot. So now if I have a function, I can say it takes an S3 dot client and my

05:13 pie will say, no, no, no. You gave that an S3 service locator or whatever the heck. There's like all these

05:18 different things you can sort of get that will do similar, but not the same stuff. So yeah, anyway, fantastic,

05:24 fantastic addition, because this really should be coming from Bodo three. I just don't, I feel, you know,

05:30 maybe it was a little bit harsh on them at the beginning, but the reason I, it's like one of

05:34 these things where you, you write a function, you just say, well, take star arcs, star, star KW arcs,

05:38 and you don't bother to write the documentation. You're like, well, how in the world am I supposed

05:42 to know what to do with this? Like there's, it could so easily help me. And it's just like, not right.

05:47 Like those could be keyword arguments with default values or whatever. So like, I feel like, you know,

05:53 a company as large as Amazon, they could probably justify writing like typed wrappers around these things

05:59 that really help people and help my pie and all these other like validation tools. But until then,

06:04 Bodo three type annotations. Awesome. Yeah. Oh, and Dean also threw out really quick before we move on

06:11 your next item, Brian, that Bodo types can literally, well, not literally save my life. Yes, I agree,

06:17 Dean. It's like, Oh, sorry. Did I like take down that EC2 machine? I didn't mean that. I wanted something

06:23 else. I want to delete the bucket. Sorry. Anyway. Awesome. Interesting. Literally.

06:27 Translate transition. Yes. Yes. Indeed. So yeah. So I want to cover code reviews. Brian,

06:35 you're such a romantic. So this was suggested by Milos, I think, and written by Michael Lynch. And it's

06:44 an article called how to make your code reviewer fall in love with you. And just, oh my gosh,

06:52 it's got great content, but the title, yuck. Maybe you're not a romantic. I mean, come on.

06:57 Well, I mean, I like my coworkers, but you know, anyway, even in the, in the article, it says,

07:06 it says even your reviewer will literally fall in love with you. Oh, they won't literally fall in love

07:13 with you. They might figuratively appreciate your code review. I mean, they may, but it could be an HR issue.

07:19 Yeah. Anyway. But I do want to cover it. There's, there's some really great tips in here. Cause

07:26 actually being nice to your, being nice to your reviewers will help you immensely. And one of the

07:33 things he covers is just value your reviewers time. And there's, and I just put a code review in this

07:39 morning, just to try this out, try some of these techniques. And it only takes like an extra 30

07:44 seconds, maybe a minute to do it right. And, and it saves everybody on your team time. So it's worth,

07:50 worth it. So let's cover a few of these. One of them is don't just check for mistakes. Imagine that

07:58 you're reading the code review for the first time. So you need to be the reviewer of your code first.

08:04 So that's, that's actually really important. And I encourage that with everybody on my team,

08:09 because there's times where the disc, you know, it just doesn't, there's stuff in there that's

08:13 not, it doesn't make sense. And why is that, why is that related to the thing? I guess we're, we'll

08:18 get there. Okay. Well, and you can also, you know, if you're in a rush, what you say can come across

08:23 feeling unkind or inconsiderate. And you're just like, I didn't really mean to be inconsiderate.

08:27 I just like, I've got four of these and I have 20 minutes. I just got to get it, you know,

08:30 but it, that's not how it's received. You know, it may be received really differently. So,

08:34 you know, from that perspective, right? Yeah. And even, even if the code review itself only takes

08:39 somebody a few minutes to review your code change, it's interrupted their, their day by a half an hour,

08:45 at least. So respect that entire time. one of the next suggestions is, write clear change

08:52 log description. So, right. And, and, and he, he describes this a little bit. One of the

08:58 things is, it's not just what you changed, but it it's what your change achieves and why you made

09:05 the change. That's the, why is always way more important than what you did. I can look at the

09:09 code change. I should be able to look at the code change and know, know what you changed. So don't

09:14 describe that too much in the, in the list at the top. next, narrowly that I want to talk

09:19 about narrowly scope your changes. So, they can skip down. Here's what I did this week. Yeah.

09:26 Have a look. Yeah. now it's easy to do that. Like I haven't checked in for a while. So here's

09:32 what I did. Yeah. No, no, no, no. And actually this is something that I even caught myself doing

09:36 yesterday. I noticed that a test, really kind of needed refactored cause it, I needed

09:42 to add a test to a, to a test module. And there was, there was some, there was the way the entire

09:49 test module was arranged. I could rearrange the fixtures so that it would run like

09:54 three times faster. if I changed the setup and common setup and stuff like that, I really wanted

10:00 to do that, but that's not what I really needed to do. What I really needed to do was just add a test.

10:05 So I added the test and that code review went through this morning. And then today I'm going to do a

10:11 cleanup of trying to make things faster. So separating them is important. Also, another thing is,

10:17 separating, functional and non-functional changes. So you're like, in this case, you're in a,

10:23 you're adding a test to a module. You got like, you notice that the formatting is just a

10:29 nightmare. just write that down on your to-do list, either do that merge first, clean it up and

10:35 then merge it and then add your change or add your change and then clean it up. Do them in two merge

10:40 requests. It'll be a lot easier for people to figure out, break up large change lists. If you've got,

10:46 if you've been working for a while, maybe you should merge them in a few times, a few, you know,

10:51 in pieces. If it's, if it's like a thousand lines of code and 80 files, that's too big. That's just

10:56 way too big. and then there's, there's actually quite a few, chunks in there that talk about

11:02 basically being a nice person. So, respond gracious. I'm just going to pick out one, respond

11:08 graciously to critiques. And that's the hardest one for me. If somebody picks apart your code,

11:13 they're not attacking you. They're talking about the code and they want to own the code also.

11:17 So think about those as, as, as, as the reviewer wanting to, make the code theirs as

11:24 well as yours and try to respond well and don't get too defensive about it because fights in code

11:30 reviews are not fun. Yeah. And often there's a power differential, right? A senior person is reviewing

11:35 junior person's type of work. So that's always true. Yeah. Yeah. Yeah. For sure. Greg is someone

11:40 who's relative to say Brian and me a little bit newer at, at, at Python. what are your

11:48 thoughts on this code review stuff? I mean, I know you don't necessarily write a lot of code in

11:52 teams that gets reviewed, but you see this as helpful, stressful. Yeah. Yeah. It's, it's, it's important

11:59 important to do the, if you have the interpersonal part of it, right? Like the, both they, they trust

12:04 each other, the, the, you know, the reviewer and the reviewee, it's going to go a lot more smoothly.

12:09 It's, it's, it's, we're in this together, a shared fate, and, and it'll go as opposed to,

12:15 conflict. it's going to, it's going to be much easier. Yeah, for sure. Brian, quick comment

12:20 from Magnus, I believe a code review should really review the current code, not just the diff

12:25 line. So the whole code comes out better after review. Yeah. Yeah, definitely. it depends

12:30 on how big it is, right? Like maybe like that little sub module or, or something, right? It could be

12:34 too massive, but yeah. Yeah. And actually this is, this is one of the times where I kind of put it on

12:39 the brakes and just say, you're right. We do need to fix that and, and put it on the to-do list, but

12:44 it shouldn't stop a merge just because, things are. Yeah. Brian, does your team do

12:49 internal PRs or do you just, do you just make changes? No, everything goes through a PR. Yeah.

12:54 I vary. Right. Sometimes I do some. All right. Greg, you're, you're up next on,

12:59 yeah. Yeah. Thank you. Speaking of repos and merges and PRs and all that stuff.

13:03 We thank Hector Munoz for sending, this suggestion in it, it started with a,

13:09 response to a blog, on Tidelift, by Tidelift about, Hey, if I'm making a decision on,

13:17 on which, library to use, how, how do, how could I, gauge the maturity of that library?

13:23 So, yeah, that's a question I get all the time from people like, Hey, I'm new to Python. I want to

13:28 know which library I should use. How do I know if the library is a good choice or a bad choice? And so

13:34 there's a lot of different metrics you might use, but maybe they're hard to find, right?

13:37 Exactly. So, Lawrence Malloy made, made this, library repo dash available so that you can,

13:45 you can track, the metrics about, you know, that give a clear indication of the health of,

13:51 of the, project. You got your open issues over any timeframe. this, this actually captures it,

13:57 you know, with, within the time range that the user specifies. So how many items were open,

14:03 how many issues are open, how many closed in that timeframe is still open. And, it will give you

14:09 a much better feel for the level of maturity and, and, and, activity. yeah, this is cool.

14:16 Like how long issues have been setting their open or, total number of open issues over time that,

14:23 like how fast should it be enclosed versus being opened versus unassigned. Yeah. All those kinds of

14:27 things are really important. Another one, probably in here somewhere, I just haven't seen it yet,

14:31 is, the number of PRs that are open. Like a real red flag to me is I go to a project and there's,

14:38 you know, significant number of PRs that are both open and maybe even not responded to. And they've

14:43 been there for like six months. You're like, okay, whoever's working on this, they've kind of lost

14:46 the love for it. Yeah. Yeah. And, yeah. And tying it together, it's, it's, might be the signal

14:53 of where you need code reviews if you're, if you're stuck somewhere. Yeah, that's right. I mean,

14:57 that's basically what a PR is. It's like a, it's waiting on a code review more or less. Yeah.

15:01 Yep. Yeah. Awesome. All right. Well, this is really cool. And I think it'll, it'll help people

15:05 who create repos or create projects, make sure that their repo is getting sort of the health of

15:11 what they're doing. But then also for people who are new or new to a project, they could quickly look

15:16 at it and go, red flags or, you know, green flags, which is it? Yeah. Yeah. Certainly. If

15:21 you're doing the things that are making your prod, your, your, it's all part of transparency.

15:26 This is, this is where we're the real deal over here on this team. Yeah. And they even have a cool

15:31 little categorization bar chart of the types of issues that are open, like feature requests versus,

15:36 good first issue versus bugs and so on. That's cool. So Ryan, what do you think?

15:40 Well, I guess I don't know if you covered this already, but I'm a little lost. is

15:44 this a service or is that something I add to my repo? You know, I think it's something you

15:49 run, you point it at a repo and you run it. Okay. But that's my understanding. I don't totally.

15:55 Yeah. I haven't used it, but I believe so. Yeah. Yeah. So it's a command, a CLI thing. You

16:01 just pointed at like some, some GitHub repo and you say, tell me how they're doing.

16:05 What I want to depend on this thing. Yes or no. No, I think that's cool. Like it. Yeah. You know what

16:10 else is cool? Sponsors. Sponsors that keep us going. Thank you. Thank you. And Linode is very

16:14 cool because, not only are they sponsoring the show, but they're giving, everyone a bunch of

16:20 credit, a hundred dollars credit for, just using our link. And, you know, you want to build

16:25 something on Kubernetes. You want to build some virtual servers or something like that. Here you go.

16:29 So you can simplify your infrastructure and cut your cloud bills in half. Linode's Linux virtual

16:33 machines, develop, deploy, and scale your modern applications faster and easier. And whether you're

16:39 working on a personal project or some of those larger workloads really should be thinking about

16:44 something affordable and usable and just focused on the job like Linode. So as I said, you'll get a

16:50 hundred dollars free credit. So be sure to use the link in your podcast player. You got data centers

16:54 around the world. it's the same pricing, no matter where you are, line up, tell them where your

16:58 customers are and you want to create your stuff there and that's pay the same price. You also get 24,

17:03 seven, three 65 human support. Oh my gosh. I'm working on another, some, something else with someone else.

17:08 Allison, this would be so appreciated right now, but not, and if it was a Linode, they'd be helping me

17:13 out. But oh my gosh, don't get me on a rant about, other things. Anyway, do you can choose

17:18 shared or dedicated compute and scale the price with your need and so on and use your a hundred dollars

17:23 credit, even on S3 compatible storage. How about that? You could, you know, use Boto, Boto three and the

17:29 type annotations that change where it's going to point it over there. So yeah, if it runs a Linode or if it

17:34 runs a Linux, it runs a Linode. So use pythonbytes.fm/Linode. Click the create for your account

17:39 button to get started. So, Brian, I'm not covering two topics this week, like normal,

17:44 you know, because no, because I have so many, I can't even possibly deal with it. So it's all about

17:49 extra, extra, extra, extra, extra here all about it. Okay. The first one, may know what a CVE is.

17:55 If it applies to your software, you don't like that. So, this sounds more scary than I believe it is,

18:01 but let me just do a quick little statement here. A reading from, nist.gov Python three up through

18:07 three nine one, which was the latest version of Python until five days ago has a buffer overflow

18:13 in pyCRG repper D types, which may lead to remote code execution. Remote code execution sounds

18:21 bad. That sounds like the internet taking my things and my data and other bad stuff. When you're accepting

18:26 a floating point number. Oh, wait a minute. a floating point number. Like I might get at a

18:31 Jason API. Somebody posts some data and here's my floating point number, but this one hacks my Python

18:37 web app with remote code execution. That sounds bad, right? Yeah. Yeah. Now it turns out the way it has

18:43 to be used. It's like, it's, it's a very narrow thing. It shouldn't send people's like hair on fire

18:47 running. Like, Hey, I've got to update the server. Right. But you should still probably update it. So

18:52 what do I do? I've logged into the various servers, Linux servers, Ubuntu latest version of Ubuntu that I

18:58 want. And I say, Oh my goodness. I heard about this. Please, date, you know, do an app update.

19:04 There better be a update for Python three. Oh no, no. There's no update for Python three. In fact,

19:10 it's still running three, eight, five where this was fixed in three, eight, eight or something like

19:15 that. And a week's gone by and there's still no update for Python on Ubuntu by default. Now,

19:20 what I can do is I can go to this like place that seems semi-official, but not really official

19:24 called dead snakes and add that as a package manager endpoint for apps. But I don't really

19:29 want to do that either. That sounds like maybe even worse than running old Python. So that sends me down

19:35 item number two of my, of my extra, extra, extra, extra, extra. And that is building Python from source

19:41 on Ubuntu. Because, I really don't want to be running the old Python in production,

19:48 even if it is unlikely, you know, unlikely yourself over dead snakes. Okay. I, well,

19:54 no, what I originally wanted to do maybe yes, but originally what I wanted to do was use pyenv because

20:01 pyenv lets you install all sorts of different versions, right? Yeah. Yeah. Well, the only one

20:05 available that was three, nine was three, nine, one, which was the one with the bug still.

20:09 And then locally I use homebrew on my machine and it just updated yesterday. I think it was,

20:14 but it was a little bit behind, but that's updated. So yeah, I guess I do. anyway,

20:19 so I've found a cool article that walks you through all the building, the stuff. And then, the thing

20:24 that makes me willing to try this and trust this, but also related to the next extra, extra, extra is

20:29 you can go instead of doing make install, which is the compile stuff takes a while, but then magic

20:35 Python comes out the other side, you can say make alt install. And what it'll do is it'll install the

20:40 version of Python under like a version name. So I can type Python 3.9 and get Python 3.9.2 with no

20:48 vulnerabilities. But if I just type Python or Python 3, it's just the system one. So that one didn't seem

20:52 too dangerous to me. Yeah. And then I just create a virtual environment for my stuff that runs on the

20:57 server. Python 3.9.9-mvenv create that. And then off it goes. And then it's just running this,

21:04 this one from here. So, pretty good. that worked out quite well. So anyway,

21:08 I've been doing that for a week and the world hasn't thrashed or blown up or anything. So

21:12 apparently this works. One has, yeah. One heads up though, is like, I have a bunch of machines that

21:16 are all the same version of Linux. They all seem to have different dependencies and ways of dealing

21:21 with this. Like one said, Oh, the SSL module is not installed as a system library, like apt install

21:26 LibSSL type thing. Another one, it had that, but it didn't have some other thing,

21:30 some other aspect that I forgot, but like, they all seem to have different stuff that you also got to

21:34 add in. So that was a little bit, walkie in the beginning, but it's all good now. All right.

21:38 That's extra number two. extra number three really probably should have preceded that because

21:44 to make all that work, I wanted to make sure that I had it just right. And so I wanted to do this

21:49 on Ubuntu 20, 20 Oh four LTS. And yet I cannot run Docker, which is exactly the place where you would

21:57 do this sort of thing to test it out. I couldn't do Docker on my Apple M one. Oh no. Okay. Now Docker

22:03 says it runs. Docker says you can run Apple, a Docker prototype on your M one, but I've installed it.

22:09 And all that does is sit there and say, starting, starting, starting, starting, starting,

22:12 indefinitely. And it will never run. I've uninstalled it. I've done different versions of

22:16 it. Like it just won't run. people that were listening said, Oh, what you got to do is you

22:20 probably installed parallels or this other thing. And it caused this problem and he could fix it this

22:24 way. Like, Nope, the problem isn't there. Cause I didn't install any of those things. I can't change.

22:27 So long story short. I, go ahead. No, I was just laughing. Yeah. Yeah. And so what I ended up doing

22:33 is I saw a really cool trick, not trick technique. I put this in the show notes. You can just say,

22:39 basically two lines on the command line prompt or do a Docker to say, you know what,

22:45 if you want to just do Docker stuff, don't do it here, do it over there. And so I have my Intel,

22:49 MacBook pro that can, that's running, Ubuntu and a virtual machine. So I just turned that on.

22:55 And I just said, Docker context, create that thing over there. And then Docker context use. And after

22:59 that, every Docker command without thinking about it, remember it just automatically runs over on that

23:05 machine. And I know it's working because my Mac one, my Mac M one mini, is super quiet. You'd never hear it or anything. But when I work with Docker, I can hear the thing

23:13 grinding away over in the corner. So that's, I know it's working. All right. Really quick. I know

23:17 I'm running low on time. The last one is people have heard me whinge on about depend upon and how it's

23:22 such a pain. And I'm sure they're thinking like, Oh, Michael, why are you whinging about this? Why are

23:25 you like just complaining? You know, it's, it can't be that bad. Yeah. So look what is on the screen

23:30 here. Depend upon merge conflict with itself. Like, so these are the things I have to do on Monday

23:37 morning. I have to log in and it says there's a merge conflict. Depend about put cryptography

23:42 equal, equal three, four, six, when it had unchanged for months, cryptography equal, equal three dot

23:49 four dot three. It's like though it's one line. It it's, it's conflicting with itself. Like this is

23:54 crazy. So anyway, this is not a big deal, but people are like, why does Michael keep complaining

23:59 about depend upon merges? Like, cause I have to go like the one line. It changes merges with

24:03 itself. Like this is not product. All right. Oh no, we're not looking at that one yet. That's

24:07 for later. All right. I guess that's it. Oh, final shout out though. I'll put this in the link in the

24:11 show notes. Anthony Shaw along with one of his coworkers, whose name I'm sorry, I forgot, built a

24:15 GitHub bot that will automatically merge all those things for you or specifically for depend about.

24:20 So I'll cover that more later when he writes it up, but he did it like a little shout out about it,

24:23 Twitter. So link to that since it's related. Yeah. That was a lot of extras. Yeah. I got a short one.

24:29 It's an extra tool also. So it's also about Docker. So yeah, yeah. This is quite related. Nice follow

24:34 on. So, Josh peak suggested, and I'm not sure what he was listening to, but he just,

24:40 or just wondering if we'd heard about it. that if one of the things people talk about with testing

24:45 is whether or not they should, mock or stub act activities to the database. And even if,

24:51 and then I've, you know, I've talked with a lot of people about that. And even if you've got a

24:56 database that's, that has in memory set up, so you can, you can configure it to be in memory

25:01 during your testing and stuff. It's still a different configuration. So, one of the

25:06 suggestions that we've gotten from a lot of, a lot of people is stick your database in a Docker

25:10 container and then test it. So, and then Josh peak suggested this, library called test

25:16 containers dash Python. And this is slick. I mean, this thing really is, you've got,

25:23 you just install this thing and you've, you can, so it covers what Selenium grid containers,

25:29 standalone containers, my SQL database containers, my SQL Maria DB, Neo 4J, Oracle DB,

25:36 Postgres, Microsoft SQL server, even wow. And then just normal Docker containers.

25:41 Yeah. It also even does a MongoDB, even though it's not listed. I saw some of the examples that

25:45 had Mongo as well.

25:46 Oh, that's great. I was, I was curious about that. So after you install this thing,

25:50 you can just, it provides context managers. It probably has other stuff too. I didn't read all

25:55 of it, but this is just really not that much code to create a, a Docker container that you can

26:01 throw your connect and fill your dummy data in or whatever.

26:05 I love it. It's like, I want to, I want to use Docker to help test stuff in isolation,

26:11 but I don't want to know about Docker or be able to use Docker or care about Docker. Right.

26:15 Right. So what it gives you is, it gives you a SQLAlchemy friendly URL, that you can,

26:23 just, just connect to your connect SQLAlchemy or whatever, but you, you just get this

26:31 URL out. So if you have, if you're configuring your, where your database is through URL, that you

26:37 can throw that in whatever configuration environment or variable or whatever, and test as you run with

26:43 that. And it's pretty neat. That's so cool. Yeah. Just with my SQL container, give it some

26:47 connection string you want or some, like host, address or whatever as my SQL. And then you just

26:53 off you go, right? Just the Docker thing exists while the context is open.

26:57 Yeah. And I didn't specifically see any documentation in here talking about pytest, but if anybody's curious,

27:02 I'm sure it'll work with that because, even if you have to write your own, fixture,

27:07 you can, you can return a context manager items in a fixture. So that'll work.

27:13 Yeah. Yeah. Yeah. Super cool. You know, I was, that's exactly what I was thinking when you were

27:17 talking about as a pytest fixture that maybe loads it and then fills it with test data and then hands

27:22 it off to the test or something like that. Yeah. Yeah. Greg, what do you think?

27:25 I like it.

27:26 Yeah. That's neat. Right. Hey, I got a quick, a quick follow-up from the last one. Magnus on the

27:30 Livestream asks, will using Pydantic mitigate the floating point overflow bug? using Pydantic

27:36 definitely makes exchanging JSON data really nice and does some validation, but I suspect it probably

27:40 doesn't. That said, you know, people really wish I could find this conversation. There was a

27:45 conversation with Dustin Ingram and I think Brett Cannon talking about this and how it's really not

27:49 that severe because I believe you got to take the input and directly hand it off at the C layer in

27:55 Python, like passing it to float parentheses in Python, I don't think is enough to trigger it. You've got to

28:00 like go down into something like NumPy or something super low level. So it's not as dangerous, but you

28:05 know, there's a lot of things that you see later. So who knows what's going on down there? so

28:09 that's why I'm building from source for the moment. Anyway, I should also throw out there really quick.

28:13 I was also just frustrated that the latest version I can get is three eight, which is over a year old.

28:18 And I was like, why am I on a year old version of Python when I could just take an hour and be on

28:22 the new version of Python? There's more to it than just the bug. All right. I guess, Greg,

28:26 we'll throw it back to you for this last one. Don't have a graph. Yeah. Yeah.

28:29 Thank you. the context on this was I had been in, in, data science in pretty much the

28:37 proprietary world. So proprietary software using, SQL server and Tableau and, Cognos and

28:44 those different tools. We started noticing we're Bay area based company. We started noticing that,

28:49 customers were leaving that proprietary world and going to, Python. And that's,

28:56 that actually is one of the things that led me to, to myself to start going in and understanding

29:01 the industry. And it just in the time that I've been with talk Python, which is a bit just a shy,

29:06 short of a year now, I'm seeing, a relentless March towards more and more adoption in, the

29:13 Python ecosystem for businesses that had traditionally always relied on proprietary software. And, and

29:21 it's, it's, it's reaching top of mind, to, to, a level that I didn't expect that it was

29:28 going to happen so fast. You know, you followed the Jeffrey Moore, the, adoption, you know,

29:33 the early adopters and then hitting the main street. This one is moving really fast. we're seeing

29:40 like, some of the largest corporations in the world moving, looking at Python as a means of looking,

29:47 moving away from Excel even. And, it's, it's, it's just, it's reaching top of mind because more

29:54 and more decision makers are hearing from their technology teams that they can deliver solutions

29:59 at unprecedented price performance. And, that's always going to talk.

30:03 Well, you weren't, you were talking this realm, like we should talk Gartner, right? So there was

30:06 a Gartner study, about why companies are moving to open source. And it was really interesting

30:12 because a lot of people say, well, you've got to move to open source because it doesn't cost money.

30:15 So it helps the bottom line. And so many of the companies that were interviewed by Gartner were

30:19 like, it has nothing to do with price. I mean, price, it's a benefit. We'll take not paying less.

30:24 That's fine. But this is about higher quality, higher visibility and so on. And I think that's a

30:29 real interesting inherent advantage in the community. Right. And in the, in the case of,

30:34 of Excel, you're hitting up against, limitations in Excel, you know, the size limitations,

30:40 most notably, and now you're able to, to handle it with, it happens to be open source, the solution,

30:45 but you really, the pain was the limitations and, now you're able to do without it.

30:51 There's got to also be maintenance too, because we, I mean, sometimes I've heard Pearl referred to as a,

30:56 a write only language, but, but it's got regular expressions. Yeah.

31:00 Yeah. It's, it's got nothing over trying to edit somebody else's spreadsheet full of macros.

31:07 Right. Oh yeah. Yeah. If they put some VBA in there, it's the kiss of death for sure.

31:11 Yeah. That's like, those are like go-to statements. It's insane.

31:14 Yeah. And, so what we're seeing is, you know, even though it, it feels like there's a heavy

31:20 adoption, it's still relatively small in road compared to what we're going to see in the future.

31:25 it's like, water rapidly collecting behind a weak dam. And, we've seen that happen in the industry before.

31:34 I think that's a really great thing to highlight, Greg. I talked with Mahmoud Hashemi,

31:38 who at the time was at PayPal about Python, soft Python for enterprise software development.

31:43 As I think this is the fourth episode of talk Python. It was certainly right at the beginning in 2015.

31:48 I remember that one.

31:49 Yeah. And, it was like a big question. Like, well, does it make sense? Should people be using

31:54 Python for these company stuff? Does that make it like now it just seems, yeah. I mean, it seems just

31:59 like so obvious. there's one thing I was actually going to cover this and I'll cover it again,

32:03 more depth because I had so many extras already. So I made room, but one of the interesting things that

32:08 Google came on to sponsor the PSF at, they say, they probably don't say this is like a friendly one,

32:13 but there was another, article. This is just the sort of fresh release from the PSF,

32:17 but they came on and they're now sponsoring, the PSF as a visionary sponsor, which I think is

32:23 over 300,000 in terms of how much there's, and they're also sponsoring a core developer,

32:30 particularly for things around like security and PI PI and whatnot. So a lot of interesting stuff.

32:35 I'll come back to that later, but, and another show, but yeah, it seems worth giving a little

32:39 shout out about that. Yep. And then a quick comment, Greg, from Magnus. I read an

32:44 article about the re, the reinsurance industry also moving in from Excel to Python. Yeah. I can

32:49 imagine. Awesome. Thank you, Magnus. Yeah. All right. I guess that's it for our items. Now,

32:53 Brian, how about some extras? Well, I know that you've been using, you've been using Firefox

32:59 for a while, right? I did notice over on, your stream that looks a little Firefoxy over there.

33:04 What happened, man? Yeah. So the thing that convinced me, is this announcement thing.

33:09 They just released Firefox 86 and it's got this, enhanced cookie protection. And I don't

33:15 understand the gist of it, but mostly it's, it seems like, they just said, you know,

33:21 whatever site you're on, they can, you, cause you know, sometimes I've heard people say I turn off

33:26 cookies. Well, like sites don't work without, cause some of them just don't. Yeah. You want to log in?

33:30 Well, you're going to need a cookie. Yeah. So, or just saving stuff. I don't want,

33:35 there's times where I just don't, there's nothing private there. I don't want to log in.

33:38 Every time, but I don't want you to share it with other people either. So this, this,

33:42 this enhancement is just, keep the sites cookies to themselves. So they have like a cookie jar or a

33:47 storage area for cookies. That's individual to each site. And you can save as many as you want for your

33:54 site. And then the, another site gets another one. And there's the obvious, like you

33:59 were saying, login stuff. I used, you know, different login providers. There is an exception for that.

34:05 So you can, you can use a login providers and it allows that, but these are, these are non tracking

34:11 cookie uses. So yeah, I'm super excited about this as well. Basically, if you were to go to CNN.com

34:19 and then you were to go, I'm not for sure about this, right. But likely then you were to go to

34:24 the verge and then you're going to go to chewy.com and buy something for your pet. Like very likely they're

34:30 using some ad network that's put a cookie that knows you did that sequence of events. And Oh,

34:35 by the way, you're logged in as so-and-so over on that one. So, and all the other sites, we now know

34:38 that so-and-so is really interested in chew toys for a medium-sized dog, but a puppy, not a, not a

34:44 full-crow. Right. You know, and that's, it gets to the point where people think, Oh, well, all these

34:48 things are listening to us on our phone, but they just like track us so insanely deeply. And so the idea

34:54 is yeah. Let that third party thing, let it set a cookie. But when they get the chewy.com from CNN.com

35:00 and they ask for the cookies there, like, yeah, sure. You can have your third party cookie, but it's a

35:04 completely unrelated brand new one. As if you like deleted your history and started over it, which

35:09 is beautiful. I'm super excited about this as well. Yeah. And, Robert Robinson says that CNN better

35:14 not try to sell him. I'm with you, man. I'm with you. Doggy toys from the doggy toy site news from the

35:22 news site. Sometimes they're hard to tell apart, but you never know. Stay in your lane.

35:26 Stay in your lane. All right. yeah. So that was the one thing you wanted to cover, right?

35:30 Yeah. Yeah. I did my extra, extra, extra, extra. So I've already covered that. So I feel like,

35:35 Greg, anything you want to throw out there before we, move on to a joke?

35:38 No, it can't get in the way of a joke. No, I know this is good. So sometimes we, we find an interesting

35:45 joke or a funny thing out there. And sometimes we strike gold, right? Like Brian pie jokes. I mean,

35:51 pip X install pie joke. Come on. Like the CLI is now full of dad developer jokes. Well,

35:56 I kind of feel like I got one of those here as well. So there's this place called,

36:01 article called 56 funny code comments that people actually wrote. Nice. I don't want to go through

36:06 56, but I feel like we may revisit this. So I want to go through four here. Okay. I linked to the real

36:11 article in there, but I pulled them out separately. So I'm showing on the screen here. Like I'll,

36:16 I'll read the first one and we can take turns reading. There's only four or five here.

36:20 So the first one is, is it a big like header at the top of a function in a comment? It says,

36:24 dear maintainer, once you're done trying to optimize this routine and you've realized what a terrible

36:29 mistake that was, please increment the following counter as a warning to the next guy. Total hours

36:35 wasted here equals 73. Is that awesome or what? Yeah. Oh man. That's beautiful. Isn't it? Yeah. I've had,

36:48 I've had code that were every like the next, like one out of five developer that gets to it says,

36:53 Oh, I think we can make this cleaner. And they don't. Nope. They just make it stop working. Then

36:58 they have to fix it. And then it goes back like it was. All right, Brian, you want to do the next one?

37:01 Sure. sometimes I believe compiler ignores all my comments. Huh?

37:06 That's a comment. Sometimes I believe the compiler ignores all your comments. Like probably all the

37:15 time. Hopefully. Oh, this next one's my favorite. Yeah. All right. Greg, that was you. Great. Drunk,

37:22 drunk. Fix later.

37:27 I can totally see that one. Honesty. Honesty. also this one is nice. Probably this came from

37:35 stack overflow and a partial level of understanding. The comment is magic. Do not touch. Yeah,

37:40 definitely. Yeah. Brian, you want to round us out with this last one? Because sometimes the best part

37:45 about comments is if they're accurate or not. Is there wrong? Yeah. I've heard people refer to

37:49 comments as future lies. and this one, is there's a routine called, it's a Boolean returns

37:56 a Boolean. It's called is available. And it returns false. It's just a single statement return false

38:02 with a comment that says always returns true. I love it. I'm telling you, there's going to be a lot

38:10 of good jokes coming from this, this article here. So yeah, pretty good. All right. Well,

38:14 thank you, Brian, as always, Greg, thank you for being here. Thank you for having me. Yeah,

38:18 It was definitely great.

38:19 And thanks everyone for listening.