#235: Flask 2.0 Articles and Reactions

Watch the live stream:

About the show

Sponsored by Sentry:

- Sign up at pythonbytes.fm/sentry

- And please, when signing up, click Got a promo code? Redeem and enter PYTHONBYTES

Special guest: Vincent D. Warmerdam koaning.io, Research Advocate @ Rasa and maintainer of a whole bunch of projects.

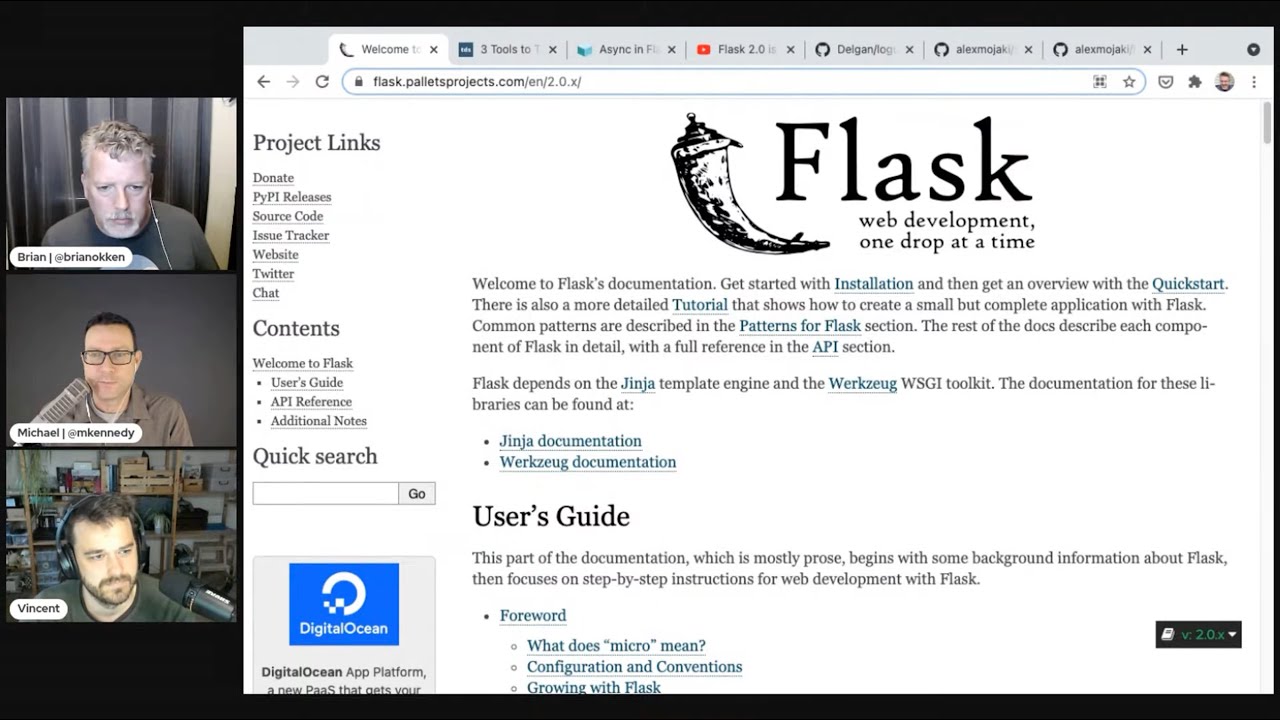

Brian #1: Flask 2.0 articles and reactions

- Change list

- Async in Flask 2.0

- Patrick Kennedy on testdriven.io blog

- Great description

- discussion of how the async works in Flask 2.0

- examples

- how to test async routes

- An opinionated review of the most interesting aspects of Flask 2.0

- Miguel Grinberg video

- covers

- route decorators for common methods,

- ex

@app.post(``"``/``"``)instead of@app.route("/", methods=["POST"])

- ex

- web socket support

- async support

- Also includes some extensions Miguel has written to make things easier

- Great discussion, worth the 25 min play time.

- See also: Talk Python Episode 316

Michael #2: Python 3.11 will be 2x faster?

- via Mike Driscoll

- From the Python Language summit

- Guido asks "Can we make CPython faster?”

- We covered the Shannon Plan for speedups.

- Small team funded by Microsoft: Eric Snow, Mark Shannon, myself (might grow)

- Constrains: Mostly don’t break things.

- How to reach 2x speedup in 3.11

- Adaptive, specializing bytecode interpreter

- “Zero overhead” exception handling

- Faster integer internals

- Put __dict__ at a fixed offset (-1?)

- There’s machine code generation in our future

- Who will benefit

- Users running CPU-intensive pure Python code •Users of websites built in Python

- Users of tools that happen to use Python

Vincent #3:

- DEON, a project with meaningful checklists for data science projects!

- It’s a command line app that can generate checklists.

- You customize checklists

- There’s a set of examples (one for for each check) that explain why the checks it is matter.

- Make a little course on calmcode to cover it.

Brian #4: 3 Tools to Track and Visualize the Execution of your Python Code

- Khuyen Tran

- Loguru — print better exceptions

- we covered in episode 111, Jan 2019, but still super cool

- snoop — print the lines of code being executed in a function

- covered in episode 141, July 2019, also still super cool

- heartrate — visualize the execution of a Python program in real-time

- this is new to us, woohoo

- Nice to have one persons take on a group of useful tools

- Plus great images of them in action.

Michael #5: DuckDB + Pandas

- via __AlexMonahan__

- What’s DuckDB? An in-process SQL OLAP database management system

- SQL on Pandas: After your data has been converted into a Pandas DataFrame often additional data wrangling and analysis still need to be performed. Using DuckDB, it is possible to run SQL efficiently right on top of Pandas DataFrames.

Example

import pandas as pd import duckdb mydf = pd.DataFrame({'a' : [1, 2, 3]}) print(duckdb.query("SELECT SUM(a) FROM mydf").to_df())When you run a query in SQL, DuckDB will look for Python variables whose name matches the table names in your query and automatically start reading your Pandas DataFrames.

- For many queries, you can use DuckDB to process data faster than Pandas, and with a much lower total memory usage, without ever leaving the Pandas DataFrame binary format (“Pandas-in, Pandas-out”).

- The automatic query optimizer in DuckDB does lots of the hard, expert work you’d need in Pandas.

Vincent #6:

- I work for a company called Rasa. We make a python library to make virtual assistants and there’s a few community projects. There’s a bunch of cool showcases, but one stood out when I was checking our community showcase last week. There’s a project that warns folks about forest fire updates over text. The project is open-sourced on GitHub and can be found here. There’s also a GIF demo here.

- Amit Tallapragada and Arvind Sankar observed that in the early days of the fires, news outlets and local governments provided a confusing mix of updates about fire containment and evacuation zones, leading some residents to evacuate unnecessarily. They teamed up to build a chatbot that would return accurate information about conditions in individual cities, including nearby fires, air quality, and weather data.

- What’s cool here isn’t just that Vincent is biased (again, he works for Rasa), it’s also a nice example of grass-roots impact. You can make a lot of impact if there’s open APIs around.

- They host a scraper that scrapes fire/weather info every 10 seconds. It also fetches evacuation information.

- You can text a number and it will send you up-to-date info based on your city. It will also notify you if there’s an evacuation order/plan.

- They even do some fuzzy matching to make sure that your city is matched even when you make a typo.

Extras

Michael

Vincent: Human-Learn: a suite of tools to have humans define models before resorting to machines.

- It’s scikit-learn compatible.

- One of the main features is that you’re able to draw a model!

- There’s a small guide that shows how to outperform a deep learning implementation by doing exploratory data analysis. It turns out, you can outperform Keras sometimes.

- There’s a suite of tools to turn python functions into scikit-learn compatible models. Keyword arguments become grid-search-able.

- Tutorial on calmcode.io to anybody interested.

- Can also be used for Bulk Labelling.

Joke

Episode Transcript

Collapse transcript

00:00 Hello and welcome to Python Bytes, where we deliver Python news and headlines directly to

00:04 your earbuds. This is episode 235, recorded May 26, 2021. And I'm Brian Okken.

00:11 I'm Michael Kennedy.

00:12 And I'm Vincent Wormadam.

00:13 We talked about Vincent a while ago and got his name wrong. And he told us a story that was good,

00:19 that we accidentally pronounced his name, what, Wonderman.

00:25 Yes.

00:26 So sorry about that.

00:28 That's fine. It's fine. I was bragging to my wife that I was on a podcast and then

00:32 I was announced as Vincent Wonderman and she's still kind of philosophical about the whole thing.

00:36 But it was a fun introduction. It's the best mispronunciation of my life. Let me put it that

00:42 way.

00:42 It's your alter ego. It's like your spy name.

00:45 I'll take it.

00:47 Well, thanks for joining us today.

00:49 My pleasure.

00:50 Should we jump into the first topic?

00:52 Sure.

00:53 Okay. Well, I think we covered, we mentioned last time that Flask 2.0 was out. And then Michael had, you talked with somebody, didn't you?

01:04 I did. I had David Lord and also Philip Jones on Talk Python to basically announce Flask 2.0 and talk about all their features.

01:17 Yeah. And that was a great episode. I listened to both those. I listened to that. It was great.

01:22 What I wanted to cover was a couple articles or an article and a video. So first off, we've got a link to the change list. So if I actually lost the change list. Yeah, there it is. So you can read through that. And maybe that's exciting to you. But I like a couple other ways. So there's an article by Patrick Kennedy.

01:45 I think that's a good thing. I like a couple other ways. So I like a couple other ways. So I like that. And then a description of the ASGI and why we don't need it yet.

02:10 And I'm not sure that may, I'm not sure what the framework, the timeline is for Flask, if they're going to do it more. But there is a discussion of that. It's not completely ASAC yet.

02:22 There was a lot of discussion with David and Phillip that they may be leaving court to take the place of full-on ASGI Flask. And the idea being that there's a lot of stuff that kind of has to change, especially around the extensions. And you get nearly that, but not exactly that, by using the gevent async stuff that's in regular Flask.

02:45 And that integrates in, if you just do an async def method in your regular Flask. But if you want true asyncio integration, then they basically were saying for the foreseeable future, instead of import Flask and go in that, just import court. And wherever you see Flask, replace it with the word court.

03:03 Okay. But there's other cool stuff other than the async that's coming into Flask 2.0. So I appreciate it. There's also a video from, we don't want it to play, from Miguel Grinberg.

03:17 And talking about some of the new stuff in Flask. And I really like this. One of the things that he covers right away is the new route decorators.

03:29 Yeah, those are nice.

03:31 Might be just a syntax thing, but it's really nice. So you used to have to say app route, and then methods equals post, or list the method. And now you can just say app post. That's nice.

03:43 And then a really clean discussion of the WebSocket support with Flask. And then he goes in to talk about the async. And with that also does a little demo timing it. And I was actually surprised at how easy it was to set up this demo of timing.

04:03 And showing that he showed that you could increase the users and then still get, it doesn't really increase your response time or how many users per request per second doesn't increase because of the way that Flask 2.0 was done.

04:21 But it was nice. And then he also talked about some of the extensions that he wrote to that work with Flask 2.0 and stuff. So it was definitely worth the listen.

04:31 Oh, that's always cool. That's always the thing when you get like, Flask is like a pretty big project. So when there's like a new upgrade of that, one of the things that people sometimes forget is like, oh, like all the plugins, do they kind of still work?

04:41 So it's nice if someone does a little bit of the homework there and says, well, here's a list of stuff that I've checked and that's at least compatible.

04:47 Well, he's mostly doing some, so for instance, one of the things is around which, I don't know, which, just some of the WebSocket stuff has changed and some of the other things have changed.

04:59 And he has like some more, some different shims that he was recommending some things before, but now you don't have to do, you don't have to swap out some things.

05:07 So like, for instance, some of the extensions we're allowing for WebSockets required you to swap out the server for a different server and you don't have to anymore.

05:17 So like that.

05:18 Okay.

05:19 All right, cool.

05:20 Yeah.

05:20 A couple of big other things that come to mind.

05:23 One, they've dropped Python to support and even 3.5 and below.

05:27 I mean, we're at this point where 3.5 is like old school legacy, which surprises me.

05:32 That still feels new.

05:32 Yeah.

05:33 I remember when it came out.

05:34 Yeah.

05:36 Yeah.

05:36 Well, that was when async and await arrived.

05:38 Right.

05:38 So that was a big, the big deal there, but it doesn't have F string.

05:41 So it's.

05:42 Yeah.

05:42 That's the killer feature.

05:43 Yeah.

05:44 Yeah.

05:46 So there's that.

05:47 And they also said that you are not going to need to change your deployment infrastructure.

05:52 If you want to run async flask, you can just push a new version and it's good to go.

05:57 So yeah, a lot, a lot of neat things there.

05:59 Very good.

06:00 Nice.

06:01 What do we got next, Michael?

06:02 Well, what if Python were faster?

06:06 That would be nice.

06:07 That's always good.

06:08 We actually talked about Cinder.

06:10 Remember Cinder?

06:11 Yeah.

06:11 From the Facebook world.

06:14 So that's one really interesting thing that is happening around Python.

06:19 And there's a lot of cool stuff here.

06:21 But remember, this is not supported.

06:22 It's not meant to be a new runtime.

06:25 Just there to give ideas and motivation and examples and basically to run Instagram.

06:31 On the other hand, Mike Driscoll tweeted out, hey, Python might get a two-time speedup of the next version of Python.

06:38 And you might want to check out Guido's slides from the Python language summit at the virtual PyCon.

06:43 That's exciting, right?

06:44 Yes.

06:46 If Guido is saying it, then odds of it happening increase, right?

06:52 Exactly.

06:52 Exactly.

06:53 So a while ago, we actually covered what has now become known as the Shannon plan for making Python faster a little bit each time over five years, over the next four, at least, I guess, four years at that point.

07:07 And how to make that happen.

07:08 So some of these ideas come from there.

07:10 And so here I'm pulling up the slides.

07:12 And Guido says, can we make CPython faster?

07:16 If so, by how much?

07:17 Could it be a factor of two?

07:18 Could it be a factor of 10?

07:20 And do we break people if we do things like this?

07:22 So the Shannon plan, which was posted last October and we covered, talks about how do we make it 1.5 times faster each year, but do that four times.

07:32 And because of compounding performance, I guess, that's five times faster.

07:36 All right.

07:37 So there's that.

07:38 Guido said, thank you to the pandemic.

07:40 Thank you to boredom.

07:42 I decided to apply at Microsoft.

07:43 And shocker, they hired him.

07:45 So as part of that, it's kind of just like, hey, we think you're awesome.

07:51 Why don't you just pick something to work on that will contribute back?

07:54 That'd be really cool.

07:55 So his project at Microsoft is around making Python faster, which I think is great.

08:00 Cool.

08:00 So, yeah.

08:01 So there's a team of folks, Mark Shannon, Eric Snow, and Guido, and possibly others, who are working with the core devs at Microsoft to make it faster.

08:11 It's really cool.

08:12 Everything will be done on the public GitHub repo.

08:16 There's not like a secret branch that will be then dropped on it.

08:19 So it's all just going to be PRs to GitHub.com slash Python slash CPython, whatever the URL is, the public spot.

08:27 And one of the main things they want to do is not break compatibility.

08:31 So that's important.

08:33 Also said, what things could we change?

08:36 Well, you can't change the base object like piobj.

08:41 Basically, the base class, right?

08:44 Pi object pointer.

08:44 That's it.

08:45 The pi object class.

08:46 So that thing has to stay the same.

08:48 And it really needs to keep reference counting semantics because so much is built on that.

08:52 But they could change the bytecode that exists, the stack frame layout, the compiler, the interpreter, maybe make it a JIT compiler to JIT compile the bytecode, all of those types of things.

09:03 So that's pretty cool.

09:05 And they said, how are we going to reach two times speed up in 3.11?

09:07 An adaptive, specialized bytecode interpreter that will be more performant around certain operations, optimized frame stacks, faster calls, zero overhead exception handling, and things like integral internals.

09:23 So maybe treating numbers differently, changing how PYC files.

09:26 So there's a lot of stuff going on.

09:28 Also, putting the dunderdick for a class always at a certain known location because anytime you access a field, you have to go to the dunderdick, get the value out, and then read it.

09:40 And I suspect the first thing that happens is, well, go find the dunderdick pointer and then go get the element out of it.

09:47 So if every access could just go, nope, it's always one certain byte off in memory from where the class starts, that would save that sort of traversal there.

09:57 So some pretty neat things.

09:58 Yeah, I'm glad you explained that because I read it before and I'm like, why would that help at all?

10:02 I think you can traverse one fewer pointers.

10:06 Yeah.

10:07 In general, it doesn't matter, but literally everything you ever touch, ever, if you could cut in half the number of pointers, you got to follow, that'd be good.

10:14 Yeah.

10:15 Yeah.

10:15 This is always one of those things that always struck me with, when you're using Python, you don't think about these sorts of things.

10:21 It's when you're doing something in Rust or something, then you are confronted with the fact that you really have to keep track of where's the pointer pointing and memory and all that.

10:27 And you take a lot of this stuff for granted.

10:29 So it's great that people are still sort of going at it and looking for things to improve there.

10:33 Yeah, absolutely.

10:34 You know, in C, you do the arrow, you know, dash greater than sort of thing.

10:39 Every pointer.

10:39 So you're like, I'm following a pointer.

10:40 I'm following a pointer.

10:41 You know it, right?

10:42 Here you just, you write nice, clean code and magic happens.

10:45 So let me round this out with who will benefit.

10:49 So who will benefit?

10:50 If you're running CPU intensive pure Python code, that will get faster because the Python execution should be faster.

10:58 should be faster because a lot of that code is running in the Python space.

11:02 And what does that happen to use Python?

11:04 Who will not benefit so much?

11:05 NumPy, TensorFlow, Pandas, all the code that's written at C, things that are IO bound.

11:11 So if you're waiting on something else, speeding up the part that goes to wait, really matter.

11:16 Multithreaded code because of the GIL at this point.

11:19 But Eric Snow is also working on the subinterpreters, which may fix that and so on.

11:23 So I like the last bullet.

11:25 Pretty neat stuff.

11:26 There's some peps out there.

11:27 I'll link to, I link to the tweet by Mike Driscoll, but that'll take you straight to the GitHub repo, which has the PDF of the slides.

11:37 And people can check that out if they're interested.

11:38 I like the last bullet for the previous slide of things, people that will not benefit.

11:43 Code that's algorithmically inefficient.

11:45 Otherwise, if your code already sucks, it's not going to be better.

11:49 It may be better, but it could be better.

11:52 I was going to say, like, theoretically, it actually would go faster and just...

11:57 Just not as much better as it could, right?

11:59 Yeah, it would still be like n to the power of three or something like that, but it would be faster n to the power of three.

12:05 Yeah, yeah.

12:06 It won't change the big O notation, but it might make it run quicker on wall time.

12:10 That's right.

12:11 Yeah.

12:11 Yeah.

12:12 And Christopher Tyler out there in the live stream says, I know I still need to improve my code, but this would be great, right?

12:18 I mean, it used to be that we could just wait six months.

12:21 A new CPU would come out that's like twice as fast as what we ran on before.

12:25 Like, oh, now it's fast enough.

12:26 We're good.

12:26 That doesn't happen as much these days.

12:28 So it's cool that the runtimes are getting faster.

12:30 Yeah.

12:31 And I mean, let's be honest.

12:32 Python is also still used for like just lots of script tasks.

12:35 Like, hey, I just need this thing on the command line that does the thing.

12:38 And I put that in Chrome and like a lot of that will be nice if that just gets a little bit faster.

12:42 And it sounds like this will just be right up that alley.

12:45 Yeah.

12:45 And one of the things that I know has been holding certain types of changes back has been concern about slowing down the startup time.

12:54 Because if all you want to do is run Python to make a very small thing happen, but like there's a big JIT overhead and all sorts of stuff, and it takes two seconds to start and a nanosecond and microsecond to run, right?

13:05 They don't want to put those kinds of limitations and heal that use case either.

13:09 So yeah, it's good to point that out.

13:11 All right, Vincent, you're up next.

13:12 Cool.

13:13 Yeah.

13:13 So I dabble a little bit in fairness algorithms.

13:17 It's a big, important thing.

13:18 So I get a lot of questions from people like, hey, if I want to do like machine learning and fairness, where should I start?

13:24 And I don't think you should start with algorithms.

13:26 Instead, what you should do is you should go check out this Python project called Deon.

13:30 And the project's really minimal.

13:32 The main thing that it really just does is it gives you a checklist of just stuff to check before you do like a big data science project at a big company or an enterprise or something like that.

13:41 And they're really sensible things.

13:44 They're sort of grouped together.

13:46 So like, hey, can I check off that I have informed consent and collection bias?

13:50 Can I check all these things off?

13:52 The main themes are...

13:53 And it's literally a checkbox.

13:54 You can check them off in the page to sort of get the feel of it.

13:57 Like, oh yeah, these are good.

13:58 It goes further.

13:59 So the thing is, this is an actual Python project.

14:01 You can generate this as YAML for your GitHub profile.

14:03 So like for your GitHub project, you actually have this checklist that has to be checked in Git.

14:07 So you know that people signed off on it.

14:09 Like you can actually see the checklist.

14:10 You can even maybe in your Git log see who checked it off.

14:13 But what's really cool is two things.

14:16 Like one, you can generate this checklist.

14:17 Two, you can also customize the checklist.

14:20 So if you are at a specific company of certain legal requirements, this tool actually kind of makes it easy to customize a very specific checklist for data projects.

14:27 But the real killer feature, if you ask me, like again, all of these comments are good.

14:31 Like, is the data security well done?

14:34 Is the analysis reproducible?

14:36 How do we do deployment?

14:38 Like all of these things that are usually like things that go wrong and were obvious in hindsight.

14:42 But the real killer feature is usually you have to convince people to take this serious.

14:46 So what the website offers is like an example list.

14:49 So for every single item that is on this checklist, they have one or two examples.

14:53 Typically, these are like newspaper articles of places where this has actually gone wrong in the past.

14:59 So if you need like a really good argument for your boss, like, hey, we got to take this serious.

15:03 There's a newspaper article you can just send along as well.

15:06 Oh, that's interesting.

15:08 Yeah.

15:08 I like it.

15:09 And the fact you can also generate Jupyter notebooks with this.

15:13 You can customize it a little bit.

15:14 The people that made this, the company I think is called Driven Data.

15:18 They host Kaggle competitions for like good causes.

15:20 That's sort of a thing that they do there.

15:21 But Deon is just a really cool project.

15:23 Like I think if more people would just start with a sensible checklist and work from there, a lot of projects would immediately be better for it.

15:31 Yeah, this is really cool.

15:33 So things are, can you go to the very bottom of that page that you're on?

15:37 Yeah.

15:37 Sorry, just the checklist.

15:39 Oh, right.

15:39 Yeah, yeah.

15:40 So there's some examples like make sure that you've accounted for unintended use.

15:45 Have you taken steps to identify and prevent unintended uses and abuse?

15:49 So like if you created a find my friends in pictures.

15:53 So like I want to find pictures my friends have taken to me.

15:55 You could put it up and it would show you all the pictures your friends took.

15:57 But maybe someone else is going to use that to, I don't know, try to fish you.

16:02 Like here's the picture of us together or I don't know, some weird thing.

16:06 Use it for like facial recognition and tracking when it had no such intent.

16:10 Right.

16:10 Things like that.

16:11 I think for and I might be.

16:13 So it doesn't have this example.

16:15 The best example of unintended use.

16:17 There used to be this geo lookup company where you could give an IP address and give you like

16:21 an actual address.

16:22 However, sometimes you don't know where the IP address actually is.

16:25 So just give like the center point of like a US state or the country.

16:28 So there used to be this house in the middle of Kansas, I think.

16:31 It was like the center point.

16:34 But the thing is, they will get like FBI trucks driving by and like doing raids and stuff because

16:39 they thought there were criminals there because the geo lookup servers would always say like,

16:43 ah, the crooks at that IP address, that's this latitude longitude place.

16:46 Right, right.

16:46 We had a cyber attack.

16:48 It was from this IP address.

16:49 Raid them, boys.

16:51 Of course, it was just some poor farmer in the Midwest going, you know, just the geographic

16:58 center.

16:59 Please stop raiding my farm.

17:00 Yeah, but the story was actually quite serious.

17:03 Like, I think the person who lived there could like death threats at some point as well because

17:07 of the same mistake.

17:07 So like this is stuff that takes serious.

17:09 The one thing that I did like is the solution.

17:12 I think now the, the, the, the, instead of it pointing to the house in Kansas, I think

17:17 it points to like the center of the three big lakes in Michigan.

17:21 I think it's just the middle of a puddle of water, basically, just to make it like obvious

17:25 to the FBI squads that like, nah, it's not a person living there.

17:28 Yeah, that's good.

17:29 They're like, darn, these submarines are, they've moved underwater.

17:32 Or, or whatever.

17:33 But I mean, but that's why you want to have a checklist like this.

17:36 Like you're not going to, the thing with unintended use is you, it's unintended.

17:39 So you cannot really imagine it, but you at least should do the exercise.

17:43 And that's what this list does in a very sensible way.

17:46 And more people should just do it.

17:47 And there's interesting examples too.

17:49 You should just have a look.

17:50 And there's also a little community.

17:51 There's a little community around it as well of like collecting these examples.

17:54 And they have like a wiki page with examples that didn't make the front page cut.

17:58 So definitely recommend anyone interested in fairness.

18:00 Start here.

18:01 I was curious.

18:03 You brushed by fairly quickly of fairness analysis, fairness analysis.

18:08 Is that what you do?

18:09 I just don't know what that means.

18:12 So could you?

18:13 Yeah.

18:14 So, oh man, this is a longer, like this topic deserves more time than I'll give it.

18:18 But the idea is that you might be able, we know that models aren't always fair, right?

18:23 It can be that you have models that, for example, the Amazon was a nice example.

18:30 So they had like a resume parsing algorithm that basically favored men because they hired

18:36 more men historically.

18:37 So the algorithm would prefer men.

18:38 Oh, okay.

18:39 Stuff like that.

18:39 That kind of fairness.

18:40 Okay.

18:40 Got it.

18:41 Yeah.

18:41 Historical.

18:41 These have been our good employees.

18:43 Let's find more like them.

18:44 Exactly.

18:45 Something like that.

18:46 And the thing is, you don't get an algorithm that's unfair.

18:48 So there are these machine learning techniques and there's this community of researchers that

18:52 try to look for ways like, can we improve the fairness of these systems?

18:55 So we don't just optimize for accuracy.

18:57 We also say, well, we want to make sure that subgroups are treated fairly and equally and

19:01 stuff like that.

19:02 So I dabble a little bit in this.

19:04 There's this project I like to collaborate with.

19:06 I put my open source a couple of things with these people.

19:08 It's called FairLearn.

19:10 The main thing that I really like about the package is that it starts by saying fairness

19:14 of AI systems is more than just running a few lines of code.

19:17 Like it starts by acknowledging that.

19:19 But they have mitigation techniques and algorithms and like tools to help you measure the unfairness.

19:24 It's scikit-learn compatible as well.

19:25 Stuff to like.

19:26 Having said all that, start here.

19:29 Start with a checklist.

19:30 Don't worry about the machine learning stuff just yet.

19:32 Start here.

19:33 But yeah.

19:34 Very cool.

19:34 Before we move on, Connor Furster in the live chat says, I'm glad the conversation of ethics

19:40 and data science is enlarging.

19:41 I think it's important about what we make.

19:43 Yeah.

19:44 I agree.

19:44 Totally.

19:45 Now, before we do move on though, let me tell you all about our sponsor for this episode,

19:50 Sentry.

19:51 So this episode is brought to you by Sentry.

19:53 Thank you, Sentry.

19:54 How would you like to remove a little bit of stress from your life?

19:57 Do you worry that users may be having difficulties or encountering errors with your app right now?

20:02 And would you even know it until they sent you that support email?

20:05 How much better would it be to have the errors and performance details immediately sent to you,

20:09 including the call stack and values of local variables and the active user recorded right

20:14 in the report?

20:14 With Sentry, it's not only possible, it's simple.

20:17 We actually use Sentry on our websites.

20:19 It's on pythonbytes.fm.

20:21 It's on Talk Python Training, all those things.

20:23 And we've actually fixed a bug triggered by a user and had the upgrade ready to roll out as we got the support email.

20:30 They said, hey, I'm having a problem with the site.

20:32 I can't do this or that.

20:33 I said, actually, I already saw the error.

20:35 I just pushed the fix to production.

20:36 So just try it again.

20:37 Imagine their surprise.

20:39 So surprise and delight your users.

20:41 Get your Sentry account at pythonbytes.fm/sentry.

20:44 And when you sign up, there's a got a promo code.

20:47 Redeem it.

20:47 Make sure you put Python Bytes in that section or you won't get two months of free Sentry team

20:52 plans and other features.

20:53 And they won't know it came from us.

20:54 So use the promo code at pythonbytes.fm/sentry.

20:57 Yeah.

20:58 Thanks.

20:58 Thanks for supporting the show, Brian.

21:00 Yeah.

21:01 I like this one that you picked here.

21:02 You like this?

21:03 I like it a lot.

21:04 It's very good.

21:04 It has pictures, little animated things, and great looking tools.

21:09 Yeah.

21:09 So there's an article.

21:11 It was sent to us.

21:11 I can't remember who sent it.

21:12 So apologies.

21:13 But it's an article called Three Tools to Track and Visualize the Execution of Your Python Code.

21:19 And I don't know why.

21:23 Executing your code just seems funny to me.

21:25 I know it just means run it, but, you know, chop its head off or something.

21:29 Anyway, so the three tools, the three tools it covers are, we don't cover this very much

21:38 because I don't know how to pronounce it.

21:39 L-O-G-U-R-U.

21:41 It's LogGuru or LogGuru?

21:43 Not sure.

21:44 And then, so LogGuru is a pretty printer with better exceptions.

21:49 So let's go and look at that.

21:52 So it does exceptions like this.

21:54 It breaks out your exceptions into colors.

21:56 And it's just kind of a really great way to visualize it.

22:00 And I would totally use this for if I was teaching, like if I was teaching a class or something,

22:05 this might be a good way to teach people how to look at trace logs and error logs.

22:12 That is fantastic.

22:13 And if you're out there listening and not seeing it, you should definitely pull up this site because the pictures really are what you need to tell quickly.

22:19 Yeah.

22:19 Yeah.

22:20 That's one of the things I like about this article is that lots of great pictures.

22:25 So one thing out of curiosity.

22:26 So what I'm seeing here is that, for example, it says return number one divided by number two.

22:30 And then you actually see the numbers that were in those variables.

22:33 Do you have to add like a decorator or something to get this output?

22:36 Or how does that work?

22:38 That's explained later, maybe.

22:40 I don't remember where.

22:43 Yeah, it's explained later, I think.

22:44 Yeah.

22:44 Yeah.

22:45 I think you just pull it in and it just does it, but I'm not sure.

22:48 Okay.

22:48 Interesting.

22:49 Anyway.

22:49 So that's LogGuru.

22:53 Then there's Snoop, which is kind of fun.

22:57 That has a hold down to Snoop.

23:01 Should have had this already.

23:02 Anyway, with Snoop, you can see it prints lines of code being executed in a function.

23:08 So it just runs your code and then prints out each line in real time as it's going through it.

23:14 You would hardly ever want this, I think.

23:17 But when you do want it, I think it might be kind of cool to watch it go along.

23:22 And you could also do this in a debugger.

23:25 But if you didn't want a debugger, do a debugger.

23:27 You can do this on the command line.

23:28 Well, one of the things that most debuggers have that is a little challenging is you'll see the state and you'll see the state change.

23:36 And you'll see it change again.

23:38 But in your mind, you've got to remember, okay, that was a seven.

23:40 And then it was a five.

23:42 And then it was a three.

23:43 Oh, right.

23:44 Yeah.

23:44 Right.

23:44 And here it'll actually reproduce each line, each block of code with the values over.

23:50 If you're in a loop three times, it'll show like going through the loop three times with all the values set.

23:55 And that's pretty neat.

23:57 Yeah.

23:57 I would also argue just for teaching recursion, I think this visualization is kind of nice because you see that you actually see like the indentation and the depth appear.

24:04 So you can actually see like this function is called inside of this other function and there's a timestamp.

24:09 So I would also argue this one's pretty good for teaching.

24:12 I like it.

24:13 And in fact, Connor on the live stream says, I'm teaching my first Python course tomorrow.

24:17 So, yeah.

24:18 Thanks for the timely article.

24:19 And a real-time follow-up for the log guru, you have to import logger and then you've got to put a decorator on the function and then it'll capture like that super detailed output.

24:30 And that's probably exactly what you want because you don't really want to do that for everything probably.

24:36 So there'll be something you're working on that you want to trace.

24:39 So heart rate is the last tool that we want to talk about.

24:43 And it's a way to visualize the execution of a Python program in real time.

24:48 So this is something we have not covered before, but it's, I thought there was a little video.

24:53 Yeah.

24:53 It kind of goes through and does a little like a heat map sort of thing on the side of your code.

25:03 So when it's running, you can kind of see that different things get hit more than others.

25:08 So that's, that's fast.

25:10 Almost like a profiler sort of not speed though.

25:13 It's just number of hits.

25:14 Yeah.

25:15 Yeah.

25:16 I'm, I'm, I'm kind of on the fence about this, but it's pretty.

25:19 So yeah, same.

25:21 But, the logger one looks amazing.

25:23 I thought logger was also like a general logging tool.

25:26 Like it does more, I think, than just, things for, for debugging.

25:32 Yeah.

25:32 I think it's a general logging tool as well.

25:34 Okay.

25:34 Okay.

25:35 But I guess it logs errors really good.

25:37 so logger dot catch decorator.

25:42 Okay.

25:42 Well, you could probably do other things with the logger then as well, but having a good

25:45 logging debugger catcher is always welcome.

25:48 Yeah, absolutely.

25:49 All right.

25:50 let's talk about ducks.

25:51 I mean, Brian, you and I are in Oregon.

25:53 Go ducks.

25:54 Is that a, well, I know your daughter goes there.

25:56 My, my daughter goes to OS, OSU.

25:59 So go, go be use, I guess.

26:00 Whatever ducks.

26:01 We're gonna talk duck databases anyway.

26:03 And data science.

26:04 So Alex Monahan sent over to us saying, Hey, you should check out this article about duck

26:10 DB, which is the thing I'm now learning about.

26:13 And it's integration.

26:14 It's direct integration with pandas.

26:16 So instead of taking data from a database loaded into a pandas data frame, doing stuff on it,

26:23 and then getting the answer out, you basically put it into this embedded database duck DB,

26:27 which is SQL light like, and then, you know, sorry, you put it into a pandas data frame,

26:32 but then the query engine of duck DB can query it directly without any data exchange, without transferring it back and forth between the two systems or formats.

26:41 That's pretty cool.

26:42 Right?

26:42 So let me pull this.

26:43 Oh, that's honest.

26:45 I know him.

26:45 Nice.

26:46 Yeah.

26:47 He's from master them.

26:47 Yeah.

26:48 Very cool.

26:49 So here's the idea.

26:50 We've got a SQL on pandas.

26:53 Basically.

26:54 So if we had a data frame here, they have a really simple data frame, but just, you know, a single array, but it could be a very complex data frame.

27:02 And then what you can do is you can import duck DB and you can say duck DB dot query.

27:07 And then you write something like, so one of the columns is called a in the data frame.

27:12 And you could say, select some of a from the data frame.

27:17 How cool is that?

27:18 I don't know.

27:19 Is it cool?

27:19 It's very cool.

27:21 So then you can also, there's also a two data frame on the result.

27:24 So what happens here is this is parsed by duck DB, which has an advanced query optimizer for things like joins and filtering and indexes and all that kind of stuff.

27:37 And then it says, oh, okay.

27:38 So you said there's a thing called my DF, which I'll just go look in the locals of my current call stack and see if I can find that.

27:47 Oh, yeah, that is neat.

27:49 So you can write arbitrary SQL.

27:51 And this one looks pretty straightforward.

27:52 You're like, yeah, yeah.

27:53 Okay.

27:53 Interesting.

27:53 Interesting.

27:54 But you can come down here and do more interesting things.

27:58 Let's see.

27:59 I'll pull up some examples.

28:00 So they do a select aggregation group by thing.

28:04 So select these two things and then also do a sum min max and average on some part of the data frame.

28:11 And then you pull it out of the data frame and you group by two of the elements.

28:15 Right.

28:16 And they show also what that would look like if you did that in true pandas format.

28:20 That's cool.

28:21 And they say, well, it's about two to three times faster in the duck DB version.

28:26 That is interesting.

28:27 That's interesting.

28:28 Right.

28:28 But then they say, well, what if we wanted not to just group by, but we wanted a filter?

28:33 Seems real simple.

28:34 Like where the ship date is less than 1998.

28:36 No big deal.

28:38 But because the way that this be really officially figured out by the query optimizer, it turns out to be much faster.

28:46 So 0.6 seconds on single threaded or it actually supports parallel execution as well.

28:52 So multi-threaded.

28:53 They tested on a system that only had two cores, but it can be many, many cores.

28:57 So it's faster 0.4 seconds when threaded versus 2.2 seconds.

29:03 Sorry, 3.5 seconds on regular pandas.

29:06 But there's this more complicated, non-obvious thing you can do called a manual pushdown in pandas, which will help drive some of the efficiency before other work happens.

29:16 And then they finally show one at the very end where there's more stuff going on that query optimizer does.

29:22 So the threaded one's 0.5 seconds.

29:24 Regular pandas is 15 seconds.

29:26 So all that's cool.

29:27 And what's really neat is it all just happens like on the data frame.

29:30 Yeah, there's two things about that that are pretty interesting.

29:32 Like one is we should underestimate how many people are still new to pandas, but do understand SQL.

29:36 So just for that use case, I can imagine, you know, you're going to get a lot of people on board.

29:41 But the fact that there's a query optimizer in there that's able to work on top of pandas, that's also pretty neat.

29:47 Because I'm assuming it's doing clever things like, oh, I need to filter data.

29:50 I should do that as early on as possible in my query plan.

29:53 It's doing some of that logic internally.

29:55 And the fact is you can paralyze it because pandas doesn't paralyze easily.

29:59 It's also something.

30:00 Yeah, I don't know that it paralyzes at all.

30:02 You've got to go to something like Dask.

30:04 Yeah.

30:04 I mean, so there are these, there are some, there are some, some tricks that you could do, but they're tricks.

30:08 They're not really natively supported.

30:10 Right.

30:11 Right.

30:11 But just having a SQL interface is neat.

30:13 Yeah.

30:14 Yeah.

30:15 This is pretty neat.

30:15 And also now I learned about DuckDB.

30:17 So apparently that's a thing, which is pretty awesome.

30:21 So it's, it's in process, just like SQLite's written in C++ 11 with no dependencies.

30:27 Ooh.

30:28 Supposed to be super fast.

30:29 So this is also a cool thing that, you know, maybe I'll check out unrelated to query and pandas,

30:33 but the fact that you can think is pretty cool.

30:35 It's got a great name.

30:36 Yeah.

30:37 You know, another database out there I hear a lot about, but I've never used to have really

30:42 an opinion about is CockroachDB.

30:44 I'm not a huge fan of just on the name.

30:47 Although it has some interesting ideas.

30:49 I think it's like meant to communicate resiliency and it can't be killed because it's like geolocated

30:53 and it's just going to survive.

30:55 But yeah.

30:55 Ducks.

30:56 I'll go with ducks.

30:57 Yeah.

30:58 I would agree.

30:59 Yeah.

30:59 And then chat out in the, out in the live stream chat, Christopher says, so DuckDB is

31:04 worrying on pandas data frames or can you load the data method chain with DuckDB and reduce

31:09 memories?

31:09 I believe you could do either.

31:11 Like you could load data into it and then there's a two data frame option that probably

31:16 could come out of it.

31:17 But I think, I think just very briefly.

31:19 It's right on it.

31:19 Yeah.

31:20 Doesn't, I might've just seen it briefly while, while you were scrolling in the blog post,

31:24 but I believe it also said that it supports the parquet file format.

31:27 It does.

31:28 So the nice thing about parquet is you can kind of index your data cleverly.

31:32 Like you can index it by date on the file system.

31:34 And then presumably if you were to write the SQL query in DuckDB, it would only read the

31:39 files of the appropriate date if you put a filter in there.

31:41 So I can imagine just because of that reason, DuckDB on its own might be more memory performance

31:46 than pandas, I guess.

31:47 Yeah.

31:48 Perhaps.

31:48 That's very cool.

31:49 Stuff like that you could do.

31:50 Yeah.

31:51 And then Nick Harvey also says, I wonder if it's read only, if you can insert or update.

31:55 I don't know for sure, but you can see in some of the places they are doing like projections.

32:02 So for example, they're doing a select sum min max average.

32:06 Like that's generating data that goes into it.

32:07 And then the result is a data frame.

32:10 So you can just add into the data frame afterwards if you want to be more manual about it.

32:14 Yeah.

32:14 All right.

32:15 Vincent, you got the last one.

32:16 Yeah.

32:17 So the thing is, I work for a company called Raza.

32:20 We make software with Python to make virtual assistants easier to make in Python.

32:25 And I was looking in our community showcase and I just found this project that just made me

32:30 kind of feel hopeful.

32:31 So this is a personal project, I think.

32:33 So we have a name here, Amit and I'm hoping I'm pronouncing it correctly, Arvind.

32:38 But what they did is they used Raza kind of like a Lego brick, but they made this assistant,

32:44 if you will, that you can send a text message to.

32:46 Now what it does, I'll zoom in a little bit for people on YouTube that they might be able

32:51 to see the GIF, but every 10 minutes, it scrapes the weather information, the fire hazard

32:56 information, and I think evacuation information from local government in California meant to help

33:01 people during wildfire season.

33:02 And they completely open source this project as well.

33:05 So there's a linked GitHub project where you can just see how they implemented it.

33:09 And it's a fairly simple implementation.

33:11 They use Raza with a Twilio API.

33:14 They're doing some neat little clever things here with like, if you misspelled your city,

33:19 they're using like a fuzzy string matching library to make sure that even if you misspell your

33:23 city, they can still try to give you like accurate information.

33:27 But what they do is they just have this endpoint where you can send the text message to like,

33:30 give me the update of San Francisco.

33:32 And then it will tell you all the weather information, air quality information, and that sort of thing.

33:36 And if you need to evacuate, they will also be able to tell you that.

33:38 And what I just loved about this, if you look at the way that they described it,

33:44 this was just two people who knew Python who were a little bit disappointed with the communication

33:49 that was happening.

33:50 But because the APIs were open, they just built their own solution.

33:52 And like thousands of people use this.

33:55 And what's even greater is that, you know, if your mobile coverage isn't great, watching a YouTube

34:01 video or like trying to get audio in can be tricky.

34:03 But a text message is really low bandwidth.

34:05 So for a lot of people, this is like a great way to communicate.

34:08 And of course, I'm a little bit biased because I work for Raza.

34:11 And I think it's awesome that they use Raza to build this.

34:13 But again, the whole thing is just open source.

34:15 You can go to their GitHub and you can just, if I'm not mistaken, there's like the scraping job

34:22 of the endpoints actually in here as well.

34:25 But this is like exactly what you want.

34:27 Just a couple of open APIs and sort of citizen science, building something that's useful for the

34:30 community.

34:31 It's great.

34:32 Yeah, I like it.

34:33 And text message is probably a really good way to communicate for disasters, right?

34:36 Yes.

34:37 Possibly in a place where, you know, LTE is crashed, Wi-Fi is out, right?

34:43 Like if even if you're on edge, you know, text should still get there.

34:47 Exactly.

34:47 Unless you're on iMessage, then you're out of luck.

34:49 No, I don't know.

34:50 Sort of.

34:51 Well, yeah.

34:52 I live in Europe, so I cannot comment on that, of course.

34:55 But it's a little bit different here.

34:57 But no, but like the data service, you can just look in here.

35:00 And this is like, again, I like these little projects that don't need anyone's permission

35:04 to help people.

35:05 Like that stuff, like, this is good stuff.

35:07 Yeah.

35:08 And the thing that I also really like about it is it's really just sending you a text message

35:12 with like air quality information and like enough information.

35:14 And that's good.

35:15 It's not like they're trying to make like a giant predictive model on top of this or anything

35:19 like that.

35:20 But just really doing enough and enough is plenty.

35:22 Like that's the thing I really love about this little demo.

35:24 And of course, using Raza, which is great.

35:26 But this is the kind of stuff that this is why I get up in the morning projects like this.

35:32 That's fantastic.

35:34 Yeah, I love it.

35:36 That's a really good one.

35:36 Brian, is that it?

35:37 Yeah, that's it.

35:39 It's our six items.

35:41 Any extras that you want to talk about?

35:43 I might have one.

35:44 Okay.

35:45 Yeah.

35:46 Okay.

35:46 So I'm totally tooting my own horn here, but this is a project I made a little while

35:51 ago.

35:52 But I think people might like it.

35:54 So at some point, it kind of struck me that people are making these machine learning algorithms

35:58 and they're trying to like on a two dimensional plane, trying to separate the green dots from

36:03 the red ones from the blue ones.

36:04 And I just started wondering, well, why do you need an algorithm if you can just maybe draw

36:10 one?

36:10 So very typically you got these like clusters of red points and clusters of blue points.

36:14 And I just started wondering, maybe all we need is like this little user interface element

36:19 that you can load from a Jupyter notebook.

36:20 And maybe once you've made a drawing, it'd be nice if we can just turn it into a scikit-learn

36:25 model.

36:25 So there's this project called Human Learn that does exactly this.

36:29 It's a tool of little buttons and like widgets that I've made to just make it easier for you

36:35 to like do your domain knowledge thing and turn it into a model.

36:38 So one of the things that it currently features is like the ability to draw a model, which

36:42 is great because domain experts can just sort of put their knowledge in here.

36:45 It can do outlier detection as well, because if a point falls outside of one of your drawn

36:50 circles, that also means that it's probably an outlier.

36:52 But it also has a tool in there that allows you to turn any Python function, like any like

36:57 custom Python written function into a scikit-learn compatible tool as well.

37:01 So if you can just declare your logic in a Python function, that can also be a machine learning

37:05 model from now on.

37:07 There's an extra fancy thing if people are interested.

37:09 I just made a little blog post about that, where I'm using a very advanced coloring technique

37:16 using parallel coordinates.

37:17 Very fancy technique.

37:20 I won't go into too much depth there.

37:22 But what's really cool is that you can basically show that a drawn model cannot perform the model

37:27 that's on the Keras deep learning blog, which I just thought was a very cool little feature

37:32 as well.

37:33 The project's called Human Learn.

37:35 It's just components for inside of Jupyter Notebook to make sort of domain knowledge and

37:40 human learning and all that good stuff better.

37:42 And also with the fairness thing in mind, I really like the idea that people sort of can

37:47 do the exploratory data analysis bit and at the same time also work on their first machine

37:51 learning model as a benchmark.

37:52 That's what Human Learn does.

37:54 So if people are sort of curious to play around with that, please do.

37:57 It's open source.

37:58 PIV install.

37:59 Please use it.

37:59 I'm impressed.

38:00 This is cool.

38:01 It is, right?

38:02 It is, right?

38:02 Yeah.

38:03 Matty out in the live stream asks, how does it handle ND data?

38:07 So-

38:08 And I guess it's three or larger.

38:09 Yeah.

38:09 So you can make like, so if you have four columns, you can make two charts with two dimensions.

38:14 That's one way of dealing with it.

38:15 And there's like a little trick where you can combine all of your drawings into one thing.

38:19 If you go to the examples though, the parallel coordinates chart that you see here, that has

38:23 30 columns and it works just fine.

38:25 I do think like 30 is probably like the limit, but the parallel coordinates chart, I mean,

38:30 you can make a subselection across multiple dimensions.

38:32 That just works.

38:33 It's really hard to explain a parallel coordinates chart on a podcast though.

38:37 I'm sorry.

38:39 Yeah.

38:40 So this is like a super interactive visualization thing with lots of colors and stuff happening.

38:44 I'm sorry.

38:45 You have to go to the docs to fully experience that, I guess.

38:47 But again, also, like if you, let's say you work for a fraud office and someone asks you like,

38:54 Hey, without looking at any data, can you come up with rules?

38:56 That's probably fraud.

38:57 And you can kind of go, yeah, if you're 12 and you earn over a million dollars, that's probably

39:01 weird.

39:01 Someone should just look at that.

39:03 And the thing is you can just write down rules that way.

39:05 And that should already be, can already be turned into a machine learning model.

39:08 You don't always need data.

39:09 And that's the thing I'm trying to cover here.

39:11 Like just make it easier for you to declare stuff like that.

39:14 It's a more human approach.

39:15 Nice.

39:16 Brian, I cut you off.

39:17 Were you going to say something?

39:18 Oh, one of the things, I don't know if we've covered this already, but we've talked about

39:23 comcode.io a lot on this podcast.

39:26 And you're the person behind it, right?

39:29 Yeah, I am.

39:30 Yeah.

39:30 It's been a fun little side project that I've been doing for a year now.

39:33 Yeah.

39:34 Yeah.

39:34 So nice videos.

39:35 I like how short they are.

39:37 Thanks.

39:37 No, so I like to hear, like people tell me that, and that's also the thing that I was

39:41 kind of going for.

39:42 Like I love the, you know, when you watch a video, it was like a lightning talk and you

39:46 learn something in five minutes.

39:47 Yeah.

39:48 Oh, that's an amazing feeling.

39:50 Like that's the thing I'm trying to capture there a little bit.

39:52 Like if, if it takes more than five minutes to get a point across, then I should go on

39:57 to a different topic, but I'm happy to hear you like it.

39:59 Cool.

39:59 Yes.

39:59 Very cool.

40:00 How about you, Michael?

40:01 Anything extras?

40:02 Well, I had two.

40:04 Now I have three because I was reading the source code of one of Vincent's projects there.

40:10 And as we were talking and I learned about fuzzy wuzzy.

40:13 So fuzzy wuzzy was being used in that emergency disaster recovery awareness thing.

40:21 And it's fuzzy string matching in Python.

40:24 And it says fuzzy string matching like a boss, which you got to love.

40:28 So it was like slight misspellings and plural versus not plural and whatnot.

40:32 And Brian even uses hypothesis, which is kind of interesting.

40:36 Yeah.

40:37 And pytest.

40:37 Yeah.

40:38 And pytest, of course.

40:38 Yeah.

40:39 Anyway, that, that's pretty cool.

40:40 I just, I just discovered that.

40:41 So fuzzy wuzzy is a pretty cool tool.

40:44 The only thing I don't like about it.

40:46 And it's the one thing I do have to mention.

40:47 It's, it is my understanding that fuzzy wuzzy is a slur in certain regions of the world.

40:52 That's so in terms of naming a package, they could have done better there, but I think

40:55 they only realized that in hindsight.

40:56 Other than that, there's some cool stuff in there.

40:58 Definitely.

40:59 Just when I learned about this, I did make the comments to myself like, okay, I should always

41:03 acknowledge it whenever I talk about the package.

41:04 But yeah, it's a, it's definitely useful stuff in there.

41:07 Fuzzy string matching is a useful.

41:09 It's a useful problem to have a tool for.

41:10 Yeah.

41:11 Very cool.

41:12 And PyCon way out in the future, 2024, 2025 announcement is out.

41:18 So the next two PyCons are already theoretically in Salt Lake City.

41:23 So hopefully we actually go to Salt Lake City and not just go and we'll virtually imagine

41:28 it was there, right?

41:29 Like this year.

41:29 But last two years, because of the pandemic, Pittsburgh lost its opportunity to have PyCon.

41:36 So not just once, but twice.

41:37 So they are rescheduling the next one back into Pittsburgh.

41:41 So folks there will be able to go and part of PyCon.

41:43 That's pretty cool.

41:45 Because of Corona, they've now been able to plan four years ahead of the way.

41:49 Exactly.

41:50 Everything's upside down now.

41:53 And then also, I just want to give a quick shout out to an episode that I think is coming

41:57 out this week on Talk Python.

41:58 I'm pretty sure that's the schedule called CodeCarbon.io.

42:02 And it is a, let me pull it up here.

42:04 It is both a dashboard that lets you look at the carbon generation, the CO2 footprint of your

42:11 machine learning models as you specifically around the training of the models.

42:16 So what you do is you pip install someone here, you pip install this emission tracker,

42:21 and then you just say, start tracking, train, stop tracking.

42:24 And it uses your location, your data center, the local energy grid, the sources of energy

42:30 from all that.

42:31 And it'll say like, oh, if you actually switch to say the Oregon AWS data center from Virginia,

42:37 you'd be using more, you would be using more hydroelectric rather than, I don't know,

42:42 gas or whatever, right?

42:44 So just, we were talking about some of the ethics and cool things that we should be paying

42:48 attention to.

42:48 And I feel like the sort of energy impact of model training might be worth looking at as

42:53 well.

42:53 So I totally agree with model training.

42:56 I've been wondering about this other thing though, and that's testing on GitHub.

42:59 Like if you think about some of these CI pipelines, they can be big too.

43:02 Like I've heard projects that take like an hour on every commit.

43:05 I'd be curious to run this on that stuff as well.

43:08 Yeah.

43:08 Well, you could turn on, you could employ this as part of your CI CD.

43:13 It doesn't really have to do with model training per se, but it does things like when you train

43:19 models that use a GPU, it'll actually ask the GPU for the electrical current.

43:23 Ah, right.

43:25 Right.

43:25 So it goes down into the hardware.

43:26 That's a fancy feature.

43:28 That's a fancy feature.

43:29 And it goes down to like the CPU level, the CPU level voltage and all sorts of like low.

43:34 It's not just, well, it ran for this long.

43:35 So it's this, right?

43:36 That's like really detailed.

43:38 That said, I suspect you could actually answer the same question on a CI, right?

43:44 It would just say, well, it looks like you're training on a CPU.

43:46 Yeah.

43:47 Yeah.

43:48 True.

43:49 But so it's a nice way to be conscious about compute times and stuff.

43:53 So that's.

43:53 Yeah.

43:54 And what's cool is it has the dashboard that like actually lets you explore like, well,

43:58 if I were to shift it to Europe rather than train in the US, which who really cares where

44:02 it trains with that, what difference would that have?

44:04 Look at how green Paraguay is where you're hosting.

44:07 Yeah.

44:08 That's incredible.

44:09 I suspect a lot of waterfalls.

44:11 Yeah.

44:12 Hydro.

44:13 Countries down there have insane amounts of hydro.

44:15 Yeah.

44:16 Like Chile.

44:17 Maybe I can't remember exactly, but yeah, it's a lot of hydro.

44:19 And you see, and you see Iceland as well.

44:20 And it's probably because of the volcanoes and warmth and heat.

44:22 And yeah.

44:23 Yeah.

44:23 The geo.

44:24 Yeah.

44:24 Okay.

44:24 Interesting.

44:25 All right.

44:25 Nice.

44:26 Brian, you got anything?

44:27 Not this week.

44:28 How about we do a joke?

44:29 Sounds good.

44:30 So.

44:32 It's been a while since I've been to a strongest man competition, world's strongest

44:36 man.

44:37 You know, like maybe one of those things where you pick up like a telephone pole and you have

44:40 to carry this throat as far as you can, or you lift like the heaviest barbells or like

44:44 you carry huge rocks some distance.

44:46 So here's one of those things.

44:48 There's like three judges, a bunch of people who look way over pumped.

44:53 They're all flexing, getting ready.

44:55 The first one is this person carrying a huge rock, sweating clearly.

45:00 And the judges are, they're not super impressed.

45:02 They give a five, a two and a six.

45:03 Then there's another one lifting this, you know, 500 pound barbell over his head.

45:07 Does eight, seven, a six is their score.

45:09 And then there's this particularly not overly strong looking person here.

45:14 It says, I don't code.

45:15 I don't use Google when coding.

45:17 Wow.

45:18 So strong.

45:19 The judges give them straight tens.

45:21 And he's, he's also being like really sincere.

45:24 Like his hand over his heart.

45:25 Oh yeah.

45:26 Like it's very humble.

45:28 Yeah, exactly.

45:29 All right.

45:31 Well, that's what I got for you.

45:32 Take it, take it for what you will.

45:34 That's pretty good.

45:35 Just stack overflow.

45:36 Yeah.

45:37 Yeah.

45:37 Well, I feel like stack overflow would be, we give them, take it to 11.

45:41 Honestly, I don't use stack overflow now.

45:45 Yeah.

45:45 You're the winner.

45:46 Definitely.

45:48 That's funny.

45:48 Well, thanks for that.

45:50 you're usually pretty good about finding our jokes.

45:53 I appreciate it.

45:54 And, thanks for coming on the show.

45:57 thanks for having me.

45:59 It's fun.

46:00 I think that's a wrap.

46:00 Yeah, that is.

46:01 Thanks, Brian.

46:02 Thank you.

46:03 Bye Vincent.