#255: Closember eve, the cure for Hacktoberfest?

Watch the live stream:

About the show

Sponsored by us:

- Check out the courses over at Talk Python

- And Brian’s book too!

Special guest: Will McGugan

Michael #1: Wrapping C++ with Cython

- By Anton Zhdan-Pushkin

- A small series showcasing the implementation of a Cython wrapper over a C++ library.

- C library: yaacrl - Yet Another Audio Recognition Library is a small Shazam-like library, which can recognize songs using a small recorded fragment.

- For Cython to consume yaacrl correctly, we need to “teach” it about the API using `cdef extern

- It is convenient to put such declarations in

*.pxdfiles. - One of the first features of Cython that I find extremely useful — aliasing. With aliasing, we can use names like

StorageorFingerprintfor Python classes without shadowing original C++ classes. - Implementing a wrapper: pyaacrl - The most common way to wrap a C++ class is to use Extension types. As an extension type a just a C struct, it can have an underlying C++ class as a field and act as a proxy to it.

- Cython documentation has a whole page dedicated to the pitfalls of “Using C++ in Cython.”

- Distribution is hard, but there is a tool that is designed specifically for such needs: scikit-build.

- PyBind11 too

Brian #2: tbump : bump software releases

- suggested by Sephi Berry

- limits the manual process of updating a project version

tbump init 1.2.2initializes atbump.tomlfile with customizable settings--pyprojectwill append topyproject.tomlinstead

tbump 1.2.3will- patch files: wherever the version listed

- (optional) run configured commands before commit

- failing commands stop the bump.

- commit the changes with a configurable message

- add a version tag

- push code

- push tag

- (optional) run post publish command

- Tell you what it’s going to do before it does it. (can opt out of this check)

- pretty much everything is customizable and configurable.

I tried this on a flit based project. Only required one change

# For each file to patch, add a [[file]] config # section containing the path of the file, relative to the # tbump.toml location. [[file]] src = "pytest_srcpaths.py" search = '__version__ = "{current_version}"'cool example of a pre-commit check:

# [[before_commit]] # name = "check changelog" # cmd = "grep -q {new_version} Changelog.rst"

Will #3: Closember by Matthias Bussonnier

Michael #4: scikit learn goes 1.0

- via Brian Skinn

- The library has been stable for quite some time, releasing version 1.0 is recognizing that and signalling it to our users.

- Features:

- Keyword and positional arguments - To improve the readability of code written based on scikit-learn, now users have to provide most parameters with their names, as keyword arguments, instead of positional arguments.

- Spline Transformers - One way to add nonlinear terms to a dataset’s feature set is to generate spline basis functions for continuous/numerical features with the new SplineTransformer.

- Quantile Regressor - Quantile regression estimates the median or other quantiles of Y conditional on X

- Feature Names Support - When an estimator is passed a pandas’ dataframe during fit, the estimator will set a

feature_names_in_attribute containing the feature names. - A more flexible plotting API

- Online One-Class SVM

- Histogram-based Gradient Boosting Models are now stable

- Better docs

Brian #5: Using devpi as an offline PyPI cache

- Jason R. Coombs

- This is the devpi tutorial I’ve been waiting for.

Single machine local server mirror of PyPI (mirroring needs primed), usable in offline mode.

$ pipx install devpi-server $ devpi-init $ devpi-servernow in another window, prime the cache by grabbing whatever you need, with the index redirected

(venv) $ export PIP_INDEX_URL=http://localhost:3141/root/pypi/ (venv) $ pip install pytest, ...then you can restart the server anytime, or even offline

$ devpi-server --offlinetutorial includes examples, proving how simple this is.

Will #6: PyPi command line

Extras

Brian:

- I’ve started using pyenv on my Mac just for downloading Python versions. Verdict still out if I like it better than just downloading from pytest.org.

- Also started using Starship with no customizations so far. I’d like to hear from people if they have nice Starship customizations I should try.

- vscode.dev is a thing, announcement just today

Michael:

- PyCascades Call for Proposals is currently open

- Got your M1 Max?

- Prediction: Tools like Crossover for Windows apps will become more of a thing.

Will:

- GIL removal

- vscode.dev

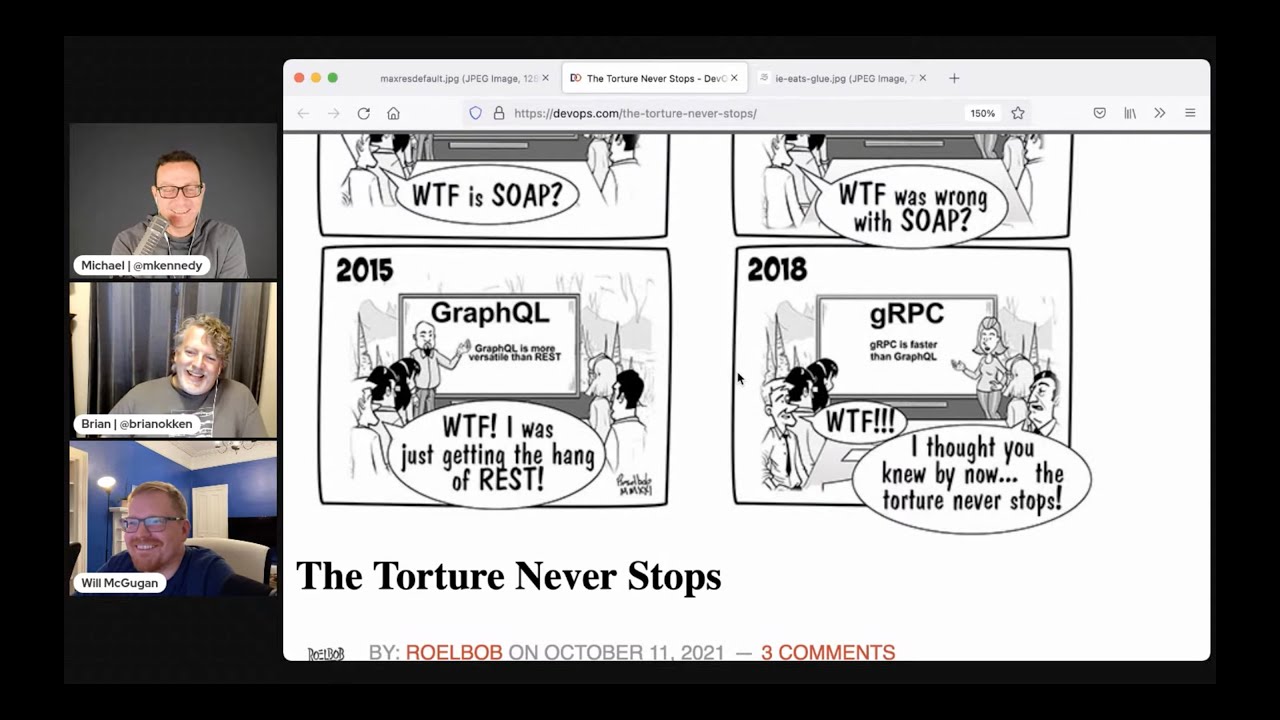

Joke:

Episode Transcript

Collapse transcript

00:00 Hey there, thanks for listening. Before we jump into this episode, I just want to remind you

00:03 that this episode is brought to you by us over at Talk Python Training and Brian through his pytest

00:09 book. So if you want to get hands-on and learn something with Python, be sure to consider our

00:15 courses over at Talk Python Training. Visit them via pythonbytes.fm/courses. And if you're

00:22 looking to do testing and get better with pytest, check out Brian's book at pythonbytes.fm slash

00:27 pytest. Enjoy the episode. Hello and welcome to Python Bytes, where we deliver Python news and

00:33 headlines directly to your earbuds. It's episode 255, recorded October 20th, 2021. I'm Brian Okken.

00:41 I'm Michael Kennedy. And I'm Will McGuggan. Welcome Will. Thank you. Good to be here. I'm sure people

00:46 know who you are through all you do with Textual and Rich. Could you do a quick intro? Sure, yeah. I'm

00:55 software developer from Edinburgh, Scotland. Last couple of years, been working quite heavily in

01:00 open source. I built Rich and started work on Textual, which is an application framework using

01:08 Rich. And I'm currently working exclusively on that. So I've taken a year off, probably more than that,

01:16 to work on open source projects. I'm very excited about that. We're excited about it too.

01:22 Yeah, that's fantastic, Will. I think we've talked about this offline as well, the success you're

01:29 having with Rich and Textual and this opportunity you have to really just double down on this project

01:35 you created. And I know there must be thousands of maintainers of projects out there. If I could just

01:39 put all my energy into this, and you're currently lucky enough to be in that situation, right? That's

01:44 fantastic. Yeah, I'm very fortunate, actually. I mean, I put some money aside. I planned for this

01:51 year. But things are really looking up. And I've been blown away by the level of interest from it.

01:58 I mean, it gradually ramped up with Rich. People like that. I think there was a missing niche or

02:05 something which did that. But then with the Textual, people were excited about it. I mean, I put a disclaimer

02:12 on the ReadMe that said it's not quite ready for prime time yet. It might break and it's in active

02:18 development, but it doesn't seem to discourage anyone. They're very busy building things with it. So I'm excited.

02:26 I want to take it to the next level. And to be honest, if I was doing it part-time like I was doing Rich,

02:33 it would just take too long. If it was evening and weekends, it would be two years before it was like

02:41 1.0. Yeah, and we're ready to use it now. So yeah, most people want to use it yesterday.

02:47 Congrats again on that. That's cool. It's great stuff. You know, we've talked about over on Talk Python

02:54 and people want to dive in. We've certainly covered it many times over here as well. So we're happy to

02:59 spread the word on it. Yeah, Michael, let's kick off the topics.

03:02 I do want to kick it off. All right. How about we start with some awesome Python topic like C++?

03:07 I like both of them.

03:09 This is right in your wheelhouse, Brian. A lot of C++. So I want to talk about this tutorial article

03:16 series, however you want to think about it, of wrapping C++ code with Cython. So the interoperability

03:23 story with C and Python being CPython as the runtime is pretty straightforward, right? But C++ is a

03:30 little more interesting with classes and this pointers and all those kinds of things. So the

03:35 basic idea is Cython is this thing that allows us to write very nearly Python code and sometimes

03:44 actually just Python code, sometimes like in a little extended language of Python that then compiles down

03:50 to C. And if that's the case, well, it's probably pretty easy to get that Cython code to work with C code.

03:57 And then Cython naturally is exposed as Python objects and variables and whatnot. So that should

04:04 be a good bridge between C++ and Python, right? And it turns out it is. So this person, Anton Zedan

04:11 Pushkin wrote an article or is working on a series of articles called wrapping C++ with Cython.

04:17 And so there's this library called Yarkerl, yet another audio recognition library. And it's kind

04:23 of like Shazam. It'll, you give it a small fragment of audio and it'll say, oh, that's Pearl Jam black,

04:29 you know, black by Pearl Jam or something like that. Right. Pretty cool. And if you look at it,

04:34 it's got some neat C++ features, you know, Brian, feel free to jump in on this, but see that right there?

04:40 Namespace. So cool. I love how they're writing like well-structured C++ code here. But basically,

04:48 there's a couple of structures like a WAV file and an MP3 file and then classes, which have like a

04:53 fingerprint and public methods and storage and so on. And so the idea is how could we take this and

04:58 potentially make this a Python library, right? Basically create a Python wrapper with Cython for it.

05:04 So you're going to come down here and says, all right, well, what we're going to do is we're going

05:07 to write some Cython code and Cython doesn't immediately know how to take a C++ header file,

05:16 which is where stuff is defined in C++ and turn that into things that Python understands. So you've

05:21 got to write basically a little file, a PXD file that declares what the interface looks like. So you write

05:29 code like this. Have you done this stuff before, Brian?

05:31 No, but this looks pretty straightforward.

05:34 Yeah, it's pretty straightforward. How about you, Will?

05:36 I've never wrapped a library, but I've used Cython quite successfully. So it's a really good system.

05:41 Yeah, yeah, I agree. I've done it, but not to wrap C++ code.

05:44 No.

05:44 So basically you do things like CDEF extern from this header, create a namespace,

05:49 and then you have CDEF, a keyword CPP class. And then you get, what's interesting about this is

05:56 you get to give it two names. And you get to say, here's the name, I want to talk about it in Python.

06:02 So CPP wave file. And then here's its name in C, which is YAR control colon colon wave file.

06:09 And the value of this is they want to have a thing called wave file in Python, but not the C++ one, a friendly Python one, but it needs to use the wave file from the C library.

06:19 So if you directly import it, then there's like this name clash, which I suppose you could fix with namespaces and all.

06:24 But I think it's cool that you can give it this name, this kind of this internal name and off it goes.

06:29 Right. So then you def out its methods, basically like just here are the functions of the class.

06:36 Same thing for the fingerprint and the storage and off it goes.

06:39 And so all of this stuff is pretty neat.

06:41 And yeah, this thing I'm talking about is called aliasing, which is pretty awesome.

06:47 Like it lets you reserve the name wave file and storage and fingerprint and stuff like that for your Python library without,

06:56 even though that's what the C names are as well.

06:58 So yeah, pretty straightforward.

07:00 What was the next thing I really want to highlight?

07:02 There's kind of this long article here.

07:03 So the next thing they talk about is using this thing called extension types.

07:08 So an extension is just a C structure or C++ library, and you create some class that is kind of a proxy to it.

07:17 So here we say C def Python class called storage, and then internal it has in Python language, you have to say C def.

07:26 It has a C++ class called this.

07:28 And then from then on, you just go and write standard Python code.

07:32 And anytime you need to talk to the C library, you just work with this like inner pointer thing that you've created, which is pretty awesome.

07:40 You just new one up in the constructor and the C++ thing.

07:43 And then like it goes off to Python's memory management.

07:47 So you don't have to worry about deleting it, stuff like that.

07:50 I guess you do have to sort of deallocate here, but that's, you know, once you write that code, then Python will just take it from there.

07:56 Right.

07:56 So pretty neat, a way to do this.

07:58 And the library goes on to talk about how you use it and so on.

08:01 So there's a couple of interesting things about like dereferencing the pointer, like basically modeling reference types in Python.

08:08 But if you've got a C++ library that you want to integrate here, I think this is a pretty cool hands-on way to do a Cython.

08:15 Yeah, I think this looks fun.

08:17 I'd like to give it a try.

08:18 Yeah, definitely.

08:19 Another one is Pybind 11.

08:21 That might also be another option to look at.

08:24 So I saw Henry out into the live stream there.

08:27 So here's another way to operate seamlessly between C++ 11 and Python.

08:32 So another option in this realm.

08:36 Maybe I'll throw that link into the show notes as well.

08:39 But yeah, a lot of cool stuff for taking these libraries written in C++ and turning them into Python-friendly, feeling Python-native libraries.

08:47 Well, you know, that's really how a lot of Python's taken off, right, is because we've been able to take these super powerful C++ libraries and wrap a Python interface into it and have them stay up to date.

08:59 When you make updates to the C++ code, you can get updates to the Python.

09:04 So you sometimes hear Python described as a glue language.

09:08 I think many years ago, that's probably what it was.

09:12 I think Python's growing.

09:14 It's more than just a glue language, but it's very good at connecting other languages together.

09:19 It's still good as a glue language, though.

09:21 Yeah, it's not just a glue language.

09:23 It's a language of its own, I guess.

09:26 Yeah.

09:26 I was talking to somebody over on Talk Python, and I'm super sorry.

09:30 I forgot which conversation this was, but they described Python as a glue language for web development.

09:37 I thought, okay, that's kind of a weird way to think of it.

09:39 But R.I.

09:40 said, well, no, no, look, here's what you do with your web framework.

09:42 You glue things together.

09:43 You glue your database over to your network response.

09:47 You glue an API call into that.

09:50 I'm like, actually, that kind of is what a website is.

09:53 It talks to databases.

09:54 It talks to external APIs.

09:56 It talks to the network in terms of HTML responses.

09:59 And that's the entire web framework.

10:01 But yeah, you can kind of even think of those things in those terms there.

10:04 It's like a party where no one's talking to each other.

10:07 And you need someone to start conversations.

10:10 It's what Python does.

10:12 Yeah, yeah.

10:13 And I think also that that's why Python is so fast for web frameworks.

10:17 Even though computationally, it's not super fast.

10:20 Like it's mostly spending a little time in its own code.

10:23 But a lot of time, it's like, oh, I'm waiting on the database.

10:25 I'm waiting on the network.

10:26 I'm waiting on an API.

10:27 And that's where web apps spend their time anyway.

10:30 So it doesn't matter.

10:31 All right.

10:32 Brian, you want to grab the next one?

10:33 Yeah, sure.

10:34 Bump it on to topic two.

10:36 Bump it on.

10:37 So I've got, I just have a few packages that I support on PyPI.

10:43 And then a whole bunch of internal packages that I work on.

10:46 And one of the things that is a checklist that I've got is what do I do when I bump the version?

10:51 And I know that there have been some automated tools before.

10:55 But they've kind of, I don't know, they make too many assumptions, I think, about how you structure your code.

11:02 So I was really happy to see T-Bump come by.

11:05 This was suggested by Cephi Berry.

11:09 But so T-Bump is an open source package that was developed.

11:13 Looks like it was developed in-house by somebody.

11:16 But then their employer said, hey, go for it.

11:18 Open source it.

11:18 So that's cool.

11:19 And the idea really is you just, it's just to bump versions.

11:26 And that's it.

11:26 But it does a whole bunch of cool stuff.

11:28 It does.

11:29 So let's say I've got to initialize it.

11:31 So you initialize it as a little TML file that stores the information in the configuration.

11:36 But if you don't want yet another TML file or another configuration, it can also append that to the PyProject.com.

11:44 That was a nice addition.

11:45 You can combine them or keep it separate up to you.

11:48 And so, for instance, I tried it on one of my projects.

11:51 And I kept it separate because I didn't want to muck up my PyProject.tML file.

11:56 But once you initialize it, all you have to do when you want to add and bump a new version is just say T-Bump and then give it the new version.

12:05 It doesn't automatically count up.

12:08 I mean, you could probably write a wrapper that counts up.

12:10 But looking at your own version and deciding what the new one is reasonable.

12:14 That's a reasonable way to do it.

12:16 And then it goes out and it patches any versions you've got.

12:21 And then in your code, in your code base or your files or config files or wherever.

12:26 And then it commits those changes.

12:31 It adds a version tag, pushes your code, pushes the version tag.

12:34 And then also you could have these optional run things, places where, like, before you commit, you can run some stuff.

12:40 Like, for instance, check to make sure that you've added that version to your changelog or your, if you want to check your documentation.

12:48 So that's pretty cool.

12:48 And then also you can have post actions.

12:50 If you wanted to, I was thinking a post action would be cool.

12:54 You could just automatically tweet out, hey, a new version is here.

12:57 Somehow hook that up.

12:58 That'd be fun.

12:59 Yeah.

12:59 Grab the first line out of the release notes and just tweet that.

13:02 Yeah.

13:03 And then the hard part, really, is how does it know where to change the version?

13:09 And that's where part of the configuration, I think, is really pretty cool.

13:14 It just has this file configuration setting, if I can find it on here, that you list the source.

13:22 And then you can also list, like, the configuration of it.

13:27 Let me grab one.

13:28 So, like, the source and then how to look for it.

13:34 So, like, it's a search string or something of what line to look for and then where to replace the version.

13:39 And that's pretty straight.

13:41 I mean, you kind of have to do some hand tweaking to get this to work.

13:44 But, for instance, it's just a couple lines.

13:47 It makes it pretty nice.

13:48 At first, I thought, well, it's not that much work anyway.

13:51 But it's way less work now.

13:53 And then, frankly, I usually forget.

13:55 I'll remember to push the version.

13:56 But I'll forget to make sure that the version's in the changelog.

14:00 I'll forget to push the tags to GitHub because I don't really use the tags, the version tags in GitHub.

14:08 But I know other people do.

14:09 Yeah, that's nice.

14:10 You know, Will, what do you think as someone who ships libraries frequently that matter?

14:14 I think it's useful.

14:16 I think for my libraries, I've got the version in two places, two files.

14:21 So, for me, it's like edit two files and I'm done.

14:25 Probably wouldn't be like massive time saver.

14:29 But I like the other things you can do with it, the actions you can attach to it.

14:34 Like creating a tag in GitHub.

14:37 So, I do often, quite often forget that.

14:40 Especially for like minor releases.

14:42 I sometimes forget that.

14:44 So, that's quite useful.

14:45 Yeah, it's the extra stuff.

14:46 It's not just changing the files.

14:47 But like Brian described, like creating a branch, creating a tag, pushing all that stuff over,

14:52 making sure they're in sync.

14:53 That's pretty cool.

14:53 Yeah.

14:54 Yeah, good find.

14:55 This does more than I expected when I saw the title.

14:57 What do we go next?

14:58 Will.

14:59 Yeah, okay.

15:00 It goes off on your first one.

15:00 This is Close Ember, which is, what's the purpose?

15:06 Portmanteau is when you put two words together.

15:08 November and close.

15:11 The idea is to help open source maintainers close issues and close PRs.

15:18 So, is this like to recover from the hangover of Hacktober?

15:21 Hacktober.

15:22 I think so.

15:23 I didn't do Hacktober this year.

15:26 I didn't either, no.

15:27 No.

15:28 Last year, I mean, I got a lot of PRs coming in.

15:32 Some of them are of dubious quality.

15:35 Some of them just, some of them are very good, actually.

15:40 I did actually benefit a lot, but it does actually generate extra work.

15:45 If you manage it, it's really great.

15:48 But this is, it generates more work for you, even though it's in your benefit.

15:52 But Close Ember is purely to take work away from you, work away from maintainers.

15:59 You know, there's lots of issues.

16:00 I mean, I've been very busy lately and not kept an eye on the rich issues, and they've just piled up.

16:07 Some of them can be closed with a little bit of effort.

16:10 So, I think that's what this project is more of a movement than a project designed to do.

16:15 It's designed to take away some of that burden from maintainers.

16:21 And it's a very nice website here.

16:24 There's a leaderboard on different issues.

16:28 And it describes what you should do to close issues and PRs.

16:34 The author, his name is Matthias Boussounier.

16:38 I've probably mispronounced that.

16:40 He started this, and I think it's going to turn into a movement.

16:45 Possibly it's too soon to really get big this year.

16:52 But I'm hoping that next year, it'll be a big thing.

16:54 It'll be after Hacktober, you can relax a bit because someone, you know, get lots of people coming in to, like, fix your issues and clear some PRs and things like that.

17:06 I mean, sometimes it's maintenance.

17:08 It's just tidying up, closing PRs, which have been merged, and closing issues, which have been fixed, that kind of thing.

17:17 So, I think it's a great thing.

17:18 I guess I don't quite get what it is.

17:20 Is it a call out to people to help maintainers?

17:24 Yeah.

17:24 Yeah.

17:25 It's like a month-long thing, and it's almost like a competition that they've given.

17:29 Yeah, they've got a leaderboard, right?

17:31 Yeah.

17:32 Yeah.

17:32 Yeah.

17:33 Matthias is a core developer of Jupyter and IPython, so he's definitely working on some of the main projects there.

17:40 Yeah.

17:40 He probably understands the burden of open source maintainer.

17:45 Even if you love something, it can be hard work.

17:51 Too much of a good thing, right?

17:53 But no T-shirt for this, at least not this year.

17:56 I don't think they offer T-shirts.

17:57 No, maybe next year.

17:59 I wonder if you can add your project to this.

18:01 I think you can tag your project with Closeember.

18:06 I think that's how it works.

18:07 And then other people can search for it and decide which one they want to help with.

18:13 All right.

18:13 That's pretty cool.

18:14 So another Brian, Brian Skin, sent over.

18:17 Thank you, Brian.

18:18 He's been sitting in a ton of stuff our way lately, and we really appreciate it.

18:20 Yeah.

18:21 Keep it coming.

18:21 So this one is, the announcement is that scikit-learn goes 1.0.

18:27 And if you look at the version history, it's been zero for, zero-ver for a long time with being, you know, 0.20, 0.21, 0.22.

18:36 So this release is really a realization that the library has been super stable for a long time.

18:44 But here's a signal to everyone consuming scikit-learn that, in fact, we intended, they intended to be stable, right?

18:52 So there's certain groups and organizations that just perceive zero-ver stuff as not finished,

18:59 especially in the enterprise space, in the places that are not typically working in open source as much,

19:05 but are bringing these libraries in.

19:07 You can see managers, like, we can't use scikit-learn.

19:09 It's not even done.

19:10 0.24.

19:11 Come on.

19:12 All right.

19:12 So this sort of closes that gap as well, signals that the API is pretty stable.

19:17 Will, Textual is not quite ready for this, is it yet?

19:22 No, it's still on zero because I'm kind of advertising that I might change a signature next version and break your code.

19:30 Never do that lightly, but it's always a possibility.

19:34 So if you use a zero-point version bit of anything, you should probably pin that and just make sure that if there's an update that you check your code.

19:43 Right.

19:44 As a consumer of Rich or a consumer of Blask or a consumer of whatever, if you're using a zero-ver, you're recommending you pin that in your application or library that uses it, right?

19:53 Yeah, exactly.

19:55 I mean, you might want to pin anyway just to, you know, lots of bits of software working together.

20:00 There could be problems with one update here that breaks this bit of software here.

20:06 But when you've got 1.0, that's the library developer is telling you, I'm not going to break anything backwards compatibility without bumping that major version number.

20:16 If they're using Semver, but because there's lots of other versioning schemes that have the pros and cons.

20:23 Yeah, like calendar-based versioning and stuff like that, right?

20:26 Yeah.

20:27 Yeah.

20:27 I think that makes more sense in an application than it does in a library.

20:31 Calendar versioning.

20:33 I think it might do, actually.

20:35 How much calendar versioning makes sense for libraries?

20:38 Maybe it does.

20:38 I don't know.

20:39 I think some projects that have shifted to Calver have recognized that they really are almost never changing backwards compatibility.

20:47 So they're never going to go to a higher number.

20:53 Yeah.

20:53 It's strange that there's no one perfect system.

20:56 I quite like Semver, but by and large, it does what I need of it.

21:01 But there is no perfect system, really.

21:03 Yeah, I like it as well.

21:05 Just the whole zero verb being for like something is on zero version, zero dot something for 15 years.

21:10 Like that doesn't make sense.

21:11 Yeah.

21:12 All right.

21:12 So as we're talking about the 1.0 release of scikit-learn, let me give a quick shout out to some of the new features or some of the features they're highlighting.

21:20 So it exposes many functions and methods which take lots of parameters like hist gradient boosting regressor.

21:27 Use that all the time.

21:28 No, not really.

21:29 But it takes, I don't know, was that 15 parameters?

21:32 Like 20, zero, 255, none, none, false.

21:36 What?

21:36 Like what are these, right?

21:38 And so a lot of these are moving to require you to explicitly say min sample leaf is 20.

21:45 L2 regularization is zero.

21:47 Max bins is 255.

21:48 Like keyword arguments to make it more readable and clear.

21:51 I like to make virtually all my arguments keyword only.

21:56 I might have one or two positional arguments, but the rest, keyword only.

22:00 I think it makes code more descriptive.

22:03 You can look at that code and then you know at a glance what this argument does.

22:08 Yeah, absolutely.

22:09 Yeah, it drives me nuts when there's like, I want all the defaults except for like something special at the last one.

22:16 And so I've got to like fill in all of them just to hit that.

22:19 And also I would love to throw out that this is way better than star star kwa args.

22:24 Way better, right?

22:26 If you've got 10 optional parameters that have maybe defaults or don't need to have a specified value, make them keyword arguments.

22:32 It means that the tooling like PyCharm and VS Code will show you autocomplete for these.

22:38 I mean, if it's truly open-ended and you don't know what could be passed, star star kwa args.

22:43 But if you do know what could be passed, something like this is way better as well.

22:47 Very much more exclusive.

22:49 Yeah, you have to type more.

22:50 If you've got like a signature which takes the same parameter or something else, you just have to type it all over again.

22:56 It can be a bit tedious.

22:57 But it's very beneficial, I think, for the tooling, like you said.

23:01 Indeed.

23:02 Also for typing, right?

23:04 You can say that this keyword argument thing is an integer and that one's a string, right?

23:08 And if it's star star kwa args, you're just any, any.

23:10 Great.

23:11 Okay.

23:11 Or string any.

23:12 Okay.

23:13 So we also have new spline transformers.

23:15 So you can create spline bezier curves, which is cool.

23:20 Quintile regressor is updated.

23:23 Feature name support.

23:25 So when you're doing an estimator pass to a pandas data frame during a fit, it will, estimator will set up feature names in attribute containing the feature names.

23:33 Right?

23:34 So that's pretty cool.

23:34 Different examples of that.

23:36 A more flexible plotting API.

23:38 Online one class SVM for all sorts of cool graphs.

23:42 Histogram based gradient boosting models are stable and new documentation.

23:46 And of course you can launch it in a binder and play with it, which is pretty sweet.

23:50 Congrats to the scikit learn folks.

23:52 That's very nice.

23:53 And also kind of interesting to get your take on API changes and versioning and stuff, Will.

23:59 Oh, before we move on, Brian, I saw a quick question that maybe makes sense to throw over to Will from Anu.

24:04 Don't go.

24:04 Everybody keeps asking this.

24:07 So I've ordered a Windows laptop.

24:09 For everyone listening, the question is when will there be Windows support for Textual?

24:12 Yeah.

24:13 I've ordered a Windows laptop.

24:15 I've been working on a VM, but it's a pain to work on a VM.

24:19 I've ordered a Windows laptop and that's going to arrive at the end of this month.

24:22 And I don't know exactly when, but that'll definitely need that to get started.

24:29 And in Siri, it should only be a week or two of work.

24:33 So how about I say this year?

24:36 This year.

24:36 After the month of configuring your laptop.

24:39 That's true.

24:40 That's true.

24:41 I haven't used Windows in I don't know how long apart from a VM.

24:44 I need to test it with a new Windows terminal, which is actually really, really good.

24:49 Yeah.

24:50 The Windows terminal is good.

24:51 Yeah.

24:52 I think it can be like a first class, like textual platform.

24:57 The Mac works great.

25:00 Linux works great.

25:01 Windows has always been like a bit of a black sheep.

25:03 But the new Windows terminal is a godsend because the old terminal was frankly terrible.

25:09 It hadn't been updated in decades.

25:10 Yeah.

25:11 The old school one is no good.

25:13 But the new Windows terminal is really good.

25:14 Also, just a quick shout out for some support here.

25:18 Nice comment to Shar.

25:19 Windows support will be provided when you click the pink button on Will's GitHub profile,

25:24 aka the sponsor button.

25:25 It's not a ransom, I promise.

25:29 I do intend to do it.

25:31 All right.

25:33 How about some server stuff?

25:35 We talked, I can't remember, I think several times talked about how to use,

25:40 how to develop packages while you're offline.

25:42 Let's say you're on an airplane or at the beach or something with no Wi-Fi.

25:47 I mean, maybe there's Wi-Fi at the beach, but not at the beaches I go to.

25:51 That's because you live in Oregon and some of the most rural parts are the beach.

25:57 If this was California, you'd have 5G.

25:58 Yeah.

25:59 Well, I mean, I could tether my phone to it or something.

26:01 But anyway, so Jason Coombs sent over an article using DevPy as an offline PyPI cache.

26:10 And I got to tell you, to be honest, I don't know if it's just the documentation for DevPy

26:15 or the other tutorials.

26:17 It just threw out a few commands and they're like, you're good.

26:22 That'll work.

26:23 And I just never got it.

26:25 I've tried and it just didn't work for me.

26:27 But this did.

26:28 So this tutorial is just a straightforward, okay, we're just going to walk you through

26:32 exactly everything you do.

26:34 It's really not that much.

26:36 For instance, he suggests using PipX to install DevPy server, which is nice.

26:42 The T-Bump package as well suggested installing itself with PipX.

26:47 PipX is gaining a lot of momentum.

26:49 Well, especially things like, well, like, yeah, T-Bump or, well, or DevPy.

26:55 I don't know if I'd do it with T-Bump because I want other package maintainers to be able to

26:59 use it too.

26:59 But anyway, this is definitely something you're just using on your own machine.

27:02 So why not let it sit there?

27:05 And then, so you install it, you init it, and it creates some stuff.

27:10 I don't know what it does when you init it.

27:12 But then you, hidden in here is you run DevPy server also then.

27:18 So it really is just a few commands and you get a server running.

27:21 But there's nothing in it.

27:23 There's no cache in it yet.

27:25 So then you have to, you have to go somewhere else and then prime it.

27:31 So you've got a local host and you, that it, it, it reports.

27:35 So you can export that as your pip index and then just create a virtual environment and start

27:40 installing stuff.

27:41 That's all you got to do.

27:42 And now, now it's all primed.

27:44 And then what you do is you turn off when, next time when you're, when you don't have any wifi,

27:48 you turn off, you can run the DevPy server as, where is it?

27:56 DevPy offline mode.

27:58 And then there you have it.

28:00 You've got a cache of everything you need.

28:03 So I tried this out, just on like, like, you know, installing pytest for my plugins

28:08 and then, set it in offline mode and then, try it in the, all the installing the normal

28:14 stuff that I just did worked fine into a new, new virtual environment.

28:18 But then when I tried to do something like, install requests that I didn't have yet

28:22 or something else, it just said, Oh, that's not, it's not a, I can't find it or something.

28:27 It's a happy failure.

28:29 So anyway, this, this instruction worked great.

28:31 I know DevPy can be, do a whole bunch of other stuff, but I don't need it to do a whole bunch

28:36 of stuff myself.

28:36 I just need it to be a IPI cache.

28:39 Yeah, this is really neat.

28:40 The init looks like it creates the database schema as well as allows you to set up, set

28:48 up a user.

28:48 Okay.

28:49 I guess you could, you set up with some authentication that no one can mess with it and stuff like

28:53 that.

28:54 Apparently this works just fine for teams.

28:57 So you can set up, set up a server on like a, on just like a computer that in your

29:02 network that, just runs as a cache.

29:05 And then you can point, everybody can point to the same one.

29:08 So, I mean, that, that, that would work as a really quick and dirty and not too dirty,

29:13 just a fairly quick way for a local team to, to have a caching server.

29:17 I'd probably even think about doing this for testing, even on one machine so that

29:23 you can have multiple, like, you know, completely clean out your environments and still run,

29:27 run a test machine and not hit the network so much.

29:29 If you're pulling a lot of, a lot of different stuff.

29:32 Henry Schreiner out in the live stream says, can we also mention that Jason, the article

29:36 we're just talking about also maintains 148 libraries, including setup tools on PyPI.

29:41 Oh, that's awesome.

29:42 So may know something about interacting with PyPI.

29:47 That's phenomenal.

29:49 I don't know how he finds the time to be honest.

29:51 148 packages.

29:52 he needs closed, closed Ember.

29:54 Yeah.

29:55 He needs a lot of closed Ember.

29:56 Awesome.

29:58 All right, well, what's this, last one you got for us here?

30:00 Sure.

30:01 So I found this, project on Reddit.

30:05 it's called PyPI command line.

30:07 And I noticed it in particular because it used rich, but it is a pretty cool project.

30:12 it's notable because the author is 14 years old.

30:17 Like that's blown me away.

30:19 it's going to be that young and he's, he's done a very good job of it.

30:22 so it's, interface to PyPI from the command line.

30:25 you can do things like, get the top, top 10 packages.

30:30 you can search for packages.

30:33 you can say here's, I think that's a search, PyPI search rich.

30:41 And that's, given all the packages that have got rich in the name, it's got a description,

30:45 everything and the date.

30:46 And here you can, PyPI info Django.

30:50 That gives you some nice information about the Django package, which it pulls from PyPI.

30:55 Like the GitHub stars, the download traffic, what it depends upon, meta information like

31:01 it's licensed and who owns it.

31:03 This is really cool.

31:04 Yeah.

31:04 It's, it's, it's really nice.

31:05 here we have the description and that's rendered in, that renders the mark down right

31:10 in the terminal.

31:11 I wonder how it does that.

31:13 I couldn't hazard a guess.

31:16 It's got to use rich, right?

31:18 I think it might.

31:19 Yeah.

31:20 And, yeah.

31:21 So it's, it makes good use of rich.

31:23 That's how I, how I noticed it, but it is a very cool project in its own right.

31:28 It also uses, questionnaire.

31:30 so that's like a, terminal thing for, for selecting stuff from, for the menu.

31:36 so it does a bit dynamically and also has like a command line.

31:41 to do it more from the, the, all the terminal.

31:45 Yeah.

31:46 I think it's well worth, checking out.

31:48 I think I want to check it out just for an example of using, using this sort of a workflow,

31:53 not necessarily with PYPI, but with just sort of copying the codes.

31:57 Yeah.

31:58 Yeah.

31:58 It's a really nice looking terminal user interface type thing.

32:01 I think it could be really interesting for you to me, Brian, to just do like info on the

32:06 various things we're talking about.

32:07 Right.

32:07 That'll, that might be fun to pull up as well.

32:09 Yeah.

32:10 And there's, there's actually tons of times where I don't, I don't really want to pull up

32:13 a web browser just to put up, but I do want more information just to help.

32:17 I love the web, but sometimes you have to do a context, which if you're in the terminal,

32:21 you're, you're writing commands and then you've got to like, switch windows and find the

32:26 title, the bar and type everything in.

32:28 it's just a little bit of effort, but it can kind of like interrupt your, your flow when

32:34 you are working.

32:35 Yeah.

32:35 I mean, especially when you got like the whole, I've got like a big monitor and I've got

32:38 them all, everything in place exactly where I want it.

32:41 And there's no web browser.

32:42 So if I want to look something up, I got to like, you know, interrupt that.

32:45 Yeah.

32:46 Or the browser he wants there, but it's behind a dozen other windows, dozen other web browsers

32:52 typically.

32:52 Exactly.

32:54 Yeah.

32:55 That's a good find.

32:55 And, well done to this, this guy who wrote it at such a young age.

33:00 Very cool.

33:00 I was just going to ask you if you have an extras, thing.

33:03 So, yep.

33:04 Do I have any extras?

33:05 Ta-da!

33:06 Here's my little banner extras.

33:08 I do have some actually, Brian, a quick shout out.

33:10 Madison sent over a notice to let us know that high cascades 2022, their call for

33:17 proposals is out.

33:19 So if people want to sign up for that, it closes October 24th.

33:24 So, you know, make haste, you've got four days, but yeah, still, closes in four days.

33:29 Yeah.

33:30 So if you're thinking of preparing something, you got three days.

33:32 Talks are 25 minutes long.

33:34 It was a lot of fun.

33:34 You know, we both attended this conference a few times it in the before times it was in Portland,

33:40 Seattle and Vancouver.

33:41 this, I'm not sure what the story is with this one.

33:44 If it's going to be in person.

33:45 I think it's remote, right?

33:47 Yeah.

33:47 I think so.

33:48 At least I hope I'm not wrong.

33:49 Yeah.

33:50 I think you're right.

33:51 Then, have you got your Mac book, your, M1 max?

33:54 Have you ordered that yet?

33:55 I want one, but no.

33:57 The $3,000.

34:00 I would love one, but I have no idea what I'd do with it.

34:02 You know, I'll, I just work in the terminal most of the time.

34:04 Hey, you know, it has that new pro, was it pro res?

34:09 Something display where it has 120 adaptive, display Hertz display.

34:14 So, you know, maybe.

34:15 I think my monitor only does 60.

34:18 So I don't know if I could use it, but, I have actually got textual running at 120 frames

34:23 per second.

34:24 which is pretty crazy.

34:26 Yeah.

34:26 That's pretty crazy.

34:27 I did end up ordering one and, on my Apple account, I have this really cool message.

34:32 It says your order will be available soon.

34:33 MacBook pro available ship available to ship null.

34:37 So, we'll see where that goes.

34:38 See where that goes.

34:41 But I think, I should have think how many people's orders I would be getting.

34:47 I would get just like a stack of boxes outside.

34:49 Did that Amazon or something?

34:50 Yeah.

34:50 and then I think also, I want to give a quick shout out to this thing.

34:54 This, code weavers crossover, which allows you to run windows apps natively on macOS

35:01 without a virtual machine.

35:02 It's like a, it's like an intermediate layer.

35:04 So I think that that kind of stuff is going to get real popular, especially since the new

35:08 M ones have like a super crappy story for windows as a virtual machine.

35:12 Cause windows has a crappy arm story and you can only do our VMs over there.

35:17 So I think that, things like this are going to become really popular.

35:20 There's a bunch of cool stuff.

35:21 If people haven't checked out this crossover stuff, I haven't really done much.

35:24 in it, but it looks super promising.

35:25 I've like been on the verge of like, I almost need this, but I just run into VM.

35:29 That's that.

35:29 Anyway, those are my extras.

35:30 Okay.

35:31 Well, I've got a couple.

35:34 we've, we've brought up starship.

35:36 Once I just, I broke down and I'm using starship.

35:39 Now it looks working nice.

35:41 And one of the things that installed when I, when I grew installed starship, it also installed

35:46 I end.

35:47 I'm not sure why.

35:48 So I started using my, I have also it was still in pain works great.

35:53 I like it on my Mac, but I still don't think it belongs in Python tutorials.

35:58 Anyway, burning still out on me whether or not it's any better than just downloading

36:03 off of org.

36:03 You're going to get tweets, Brian.

36:05 You're going to get tweets.

36:05 But I agree with you.

36:08 I support you on this.

36:09 And so, one of the things that was announced today is, VS Code.dev is a thing.

36:15 so I thought it was already there, but apparently this is new.

36:18 if you go to VS Code.dev, it is, just VS Code in the browser.

36:24 Oh, interesting.

36:25 I think it was already there.

36:27 Where does it execute?

36:27 And where, where, where's your file system and stuff like that?

36:30 Well, I think it's the same as like the GitHub code spaces.

36:34 You press dot.

36:35 Yeah.

36:35 Okay.

36:36 Got it.

36:36 so.

36:37 It can use the local file system though, which I think is a difference.

36:40 GitHub had this thing where you hit dot and it, it brought up, a VS Code, which worked with the files in your repo.

36:47 But I think with this, it can actually use your local, file system.

36:52 Wow.

36:52 Yeah.

36:53 Which makes it more interesting.

36:55 I mean, it's great if you work on another computer and you just pop it open,

36:57 you've got all your settings there.

36:58 Yeah, exactly.

36:59 And boom, you're ready to go.

37:00 Yeah.

37:00 Oh, that actually is quite a bit different then.

37:03 That's pretty cool.

37:03 Yeah.

37:04 Two use cases for me that, that I think I would use this, that seem really nice.

37:08 One is I'm working like, say on my daughter's computer.

37:12 She's like, dad, help me with this file.

37:14 You know, there's help me with, something and I've got to open some file in a way that has some form of structure.

37:19 And I, you know, she doesn't have VS Code set up on her computer.

37:22 She's in middle school.

37:23 She doesn't care.

37:23 but I could just fire this up and, you know, look at some file in a non-terrible way.

37:29 Right.

37:29 That would be great.

37:30 The other is on my iPad.

37:31 Oh yeah.

37:32 Right.

37:32 Like there's not a good, super good story for that.

37:35 But this kind of like VS Code in the browser, other things in the browser, they seem really nice.

37:40 Or if I was on a Chromebook or something like that, right?

37:42 If I was trying to help somebody with code on a Chromebook, that'd be good.

37:45 How about you, Will?

37:45 Do you have any extras for us?

37:46 Here we go.

37:48 Python multi-threading, without the GIL.

37:50 GIL stands for, global interpreter lock.

37:54 And it's something which prevents, Python threads from truly running in parallel.

37:59 it's, people have been talking about this for years and I've got a bit kind of, you know,

38:03 a dismissive because every time it comes up, it never seems to happen because, there's

38:08 quite a lot of trade-offs generally.

38:09 if you get rid of the GIL, you hurt single-threaded, performance and most things

38:15 are single-threaded.

38:16 but this, looks like, the author, Sam Gross has come up with, a way of removing the GIL without hurting, single-threaded performance.

38:28 I think they've got, it's to do with reference counting.

38:31 They've got two references, reference counts, one for, the thread which owns the object

38:37 and one for all the other threads.

38:39 And apparently it's, it works quite well.

38:42 And the great thing about this is...

38:43 Yeah, that's super creative to basically think of like, well, let's treat, the ref count

38:48 as a thread local storage.

38:49 And probably when that hits zero, you're like, okay, well, let's go look at the other threads

38:52 and see if they're also zero.

38:54 Right?

38:55 Yeah.

38:56 Yeah.

38:57 And if this goes ahead and it's got, quite a lot of support, I think in the core

39:00 dev community, I don't keep a, really strong eye on that, but, from what I

39:04 from what I hear is, is, got a lot of support.

39:07 And if, if that lands, then we can get a fantastic performance out of multi-threaded code.

39:13 You know, if you've got 20 threads, you get almost 20 times, performance.

39:18 So that, that could be huge.

39:20 I've no doubt there'll be a lot of technical hurdles, from, C libraries and things.

39:27 but I'm really excited about that.

39:29 I think, you know, performance improvements, the single thedded, they come in little fits

39:33 and starts, you know, we get 5% here, 10% here, and it's all very welcome.

39:37 but if this lands, then we can get like 20 times for certain types of computing tasks.

39:42 so I'm, I'm really excited.

39:45 I hope this one, this one lands.

39:47 I mean, you're talking about this.

39:48 Oh, here, let's, let's get this multi-thread stuff.

39:51 You know, you were just saying, what are we going to do with these new M1 pros and M1 max?

39:55 I mean, 10 core machines, 30, 32 core GPUs.

39:59 There's a lot of, a lot of stuff that's significantly difficult to take advantage of with Python, unless

40:04 something like this comes into existence, right?

40:07 Exactly.

40:07 If you have 10 cores, chances are you'd just use one of them.

40:11 I'm wondering if, if this goes in, whether it'll change, we'll need some other ways of

40:16 taking advantage of that.

40:17 Because, I think at the moment for most tasks, you'd have to explicitly create

40:21 and launch threads.

40:23 I wonder if there'll be advances where, Python could just launch threads and things

40:30 which could be easily parallelized.

40:32 maybe I'm, I'm hoping for, for too much, but I've, I've no doubt there'll be some kind

40:36 of like software solution to help you just, launch threads and like use all those cores

40:41 and your shiny new, new max.

40:43 There's a lot of interesting stuff that you can do with async and await.

40:47 And there's also some cool thread scheduler type things.

40:50 But I think the, you know, much like Python three, when type annotations came along, there

40:55 was a whole bunch of stuff that blossomed that took advantage of it, like Pydantic and fast

41:00 API and stuff.

41:01 I feel like that, that blossoming hasn't happened because you're really limited by the gill

41:06 of the CP level.

41:06 Then you go multi-processing and you have like a data exchange and compatibility issues.

41:11 But if this were to go through all of a sudden people were like, all right, now how do we

41:15 create these libraries that we've wanted all along?

41:18 Yeah.

41:18 Yeah.

41:19 I think that's it.

41:20 I think, once you've got over that technical hurdle, all the, all the library authors,

41:25 will be like looking for like a creative ways of using this, for speeding code up and

41:33 for just doing more with your Python.

41:36 Yeah.

41:36 I mean, with it, with every programming language, the jump from single threaded to multi-process

41:41 is a huge overhead.

41:43 So you don't do it lightly, but you could do it lightly with multi-threads.

41:48 You don't have such a huge, overhead burden.

41:51 It's very exciting.

41:52 I was also super excited about this.

41:53 So I'm glad you gave it a shout out.

41:55 We'll probably come back and spend some more time on it at some point.

41:57 Yeah.

41:57 And, where is it?

41:59 somebody said, one of the exciting things about it is we didn't say no immediately.

42:05 That's a very good sign.

42:06 Yeah.

42:08 Which has not been the case with some of these other ones because they were willing to sacrifice

42:11 single threaded performance to get better multi-course performance.

42:15 Like, you know, this is not a common enough use case that we're willing to do that.

42:18 I think actually, the solution the author came up with, it did reduce single,

42:23 threaded performance, but he also added some unrelated, optimizations, which speeded

42:30 it back up again.

42:31 Exactly.

42:32 I'm sorry.

42:33 I fixed it.

42:34 Yeah.

42:34 Yeah.

42:34 Yeah.

42:35 Interesting.

42:36 One more thought on this really quick.

42:38 David pushing out in the live stream says the Gilectomy is like nuclear fusion.

42:42 It's always 10 years away.

42:44 Yeah.

42:44 I hope hopefully it's not 10.

42:46 It's possible, but I think this is the biggest possibility since then to do interesting things,

42:52 maybe already taking account that, you know, looked at it and didn't say no immediately

42:55 to, this is a project from, this is a project Sam's working on, but it's supported by Facebook

43:02 where he works.

43:02 So there's like a lot of time and energy.

43:05 It's not just a side project.

43:06 Third, Larry Hastings Hastings, the guy who was doing the Gilectomy commented on this

43:13 thread saying, you've made way more progress than I did.

43:15 Well done, Sam.

43:16 So these are all good signs.

43:17 That's fantastic.

43:18 Yeah.

43:18 Yeah.

43:19 All right.

43:20 Well, Ryan, are we ready for our joke?

43:22 Yeah.

43:23 Laugh.

43:23 Definitely.

43:24 See, this is optimistic because they're not always that funny, but I'm going to give it

43:28 a try.

43:29 This one is for the web developers out there for those folks that work on APIs and probably

43:34 have been working for a long time on them.

43:36 So, the first one I got for us, I just found another one I'm going to throw in from,

43:39 inspired by the live stream, but this one is entitled the torture never stops.

43:44 All right.

43:44 Okay.

43:45 So it's a, every one of these, it's four different pictures in this cartoon.

43:49 There's a, there's a different developers up at the board describing some new way to talk

43:56 to web servers from your app.

43:57 So way back in 2000, it says soap, simple object, object access protocol.

44:02 Soap makes programming easier.

44:04 And the developer in the audience like WTF is soap.

44:08 Oh, come on.

44:09 What is this?

44:09 Crazy namespaces in XML.

44:11 Skip ahead 10 years.

44:13 Now there's a developer up here saying rest representational state transfer.

44:16 Rest is better than soap.

44:18 The developer now as WTF was wrong with soap.

44:21 2015 graph QL graph QL is more versatile than rest.

44:27 WTF.

44:28 I was just getting the hang of rest.

44:29 2018 GRPC.

44:31 GRPC is faster than graph QL.

44:34 WTF.

44:34 I thought you knew by now that torture never stops.

44:38 Like the guy next to the other developer that's been complaining for 20 years.

44:42 I think that that hits a bit too close to home.

44:45 But if you're a JavaScript developer, that gets compressed into like the last six months,

44:49 I think.

44:50 That's right.

44:51 You've lived it really hard, really intensely.

44:53 Nick says, let's just start over with soap.

44:56 Yeah, pretty good.

44:57 Pretty good.

44:57 All right.

44:57 And then we were talking about VS Code.dev and how you just press dot in your browser

45:04 and GitHub or how you go to that URL and so on.

45:08 How cool was.

45:08 And somebody said, oh, it doesn't work in Safari.

45:10 So I want to come back to this joke that used to be applied to IE.

45:14 But now I think it should be applied to Safari.

45:19 Like genuinely, I think it should be.

45:22 Is it's the browser wars as a cartoon.

45:26 So there's Chrome and Firefox.

45:28 It's a little dated because Firefox is not as popular as it used to be, sadly.

45:30 But it's like Chrome and Firefox are fiercely fighting.

45:33 And like IE is in the corner eating glue.

45:37 I just feel like that needs a little Safari icon and we'd be good.

45:40 Yeah.

45:41 We'd be all up to date in 2021.

45:42 How do you know it's IE?

45:44 It has a little E on it in a window symbol.

45:47 Oh, okay.

45:48 Well, the E, of course, is backwards because the shirt's probably on backwards or something.

45:52 Also, it's eating glue.

45:53 Yeah, true.

45:58 Funny.

45:59 So thanks, Will, for joining us today.

46:01 This was a really fun show.

46:03 Thanks, everybody in the chat for all the great comments.

46:05 Thanks, Brian.

46:06 Thanks, Will.

46:07 See you all later.

46:07 Thanks.

46:08 Thanks, guys.

46:08 Bye-bye.

46:09 Thanks for listening to Python Bytes.

46:12 Follow the show on Twitter via at Python Bytes.

46:15 That's Python Bytes as in B-Y-T-E-S.

46:18 Get the full show notes over at pythonbytes.fm.

46:21 If you have a news item we should cover, just visit pythonbytes.fm and click submit in the

46:26 nav bar.

46:27 We're always on the lookout for sharing something cool.

46:29 If you want to join us for the live recording, just visit the website and click live stream to

46:34 get notified of when our next episode goes live.

46:37 That's usually happening at noon Pacific on Wednesdays over at YouTube.

46:41 On behalf of myself and Brian Okken, this is Michael Kennedy.

46:45 Thank you for listening and sharing this podcast with your friends and colleagues.