#282: Don't Embarrass Me in Front of The Wizards

About the show

Sponsored by us! Support our work through:

Brian #1: pyscript

- Python in the browser, from Anaconda. repo here

- Announced at PyConUS

- “During a keynote speech at PyCon US 2022, Anaconda’s CEO Peter Wang unveiled quite a surprising project — PyScript. It is a JavaScript framework that allows users to create Python applications in the browser using a mix of Python and standard HTML. The project’s ultimate goal is to allow a much wider audience (for example, front-end developers) to benefit from the power of Python and its various libraries (statistical, ML/DL, etc.).” from a nice article on it, PyScript — unleash the power of Python in your browser

- PyScript is built on Pyodide, which is a port of CPython based on WebAssembly.

- Demos are cool.

- Note included in README: “This is an extremely experimental project, so expect things to break!”

Michael #2: Memray from Bloomberg

- Memray is a memory profiler for Python.

- It can track memory allocations in

- Python code

- native extension modules

- the Python interpreter itself

- Works both via CLI and focused app calls

- Memray can help with the following problems:

- Analyze allocations in applications to help discover the cause of high memory usage.

- Find memory leaks.

- Find hotspots in code which cause a lot of allocations.

- Notable features:

- 🕵️♀️ Traces every function call so it can accurately represent the call stack, unlike sampling profilers.

- ℭ Also handles native calls in C/C++ libraries so the entire call stack is present in the results.

- 🏎 Blazing fast! Profiling causes minimal slowdown in the application. Tracking native code is somewhat slower, but this can be enabled or disabled on demand.

- 📈 It can generate various reports about the collected memory usage data, like flame graphs.

- 🧵 Works with Python threads.

- 👽🧵 Works with native-threads (e.g. C++ threads in native extensions)

- Has a live view in the terminal.

- Linux only

Brian #3: pytest-parallel

- I’ve often sped up tests that can be run in parallel by using -n from pytest-xdist.

- I was recommending this to someone on Twitter, and Bruno Oliviera suggested a couple of alternatives. One was pytest-parallel, so I gave it a try.

- pytest-xdist runs using multiprocessing

- pytest-parallel uses both multiprocessing and multithreading.

- This is especially useful for test suites containing threadsafe tests. That is, mostly, pure software tests.

- Lots of unit tests are like this. System tests are often not.

- Use

--workersflag for multiple processors,--workers autoworks great. - Use

--tests-per-workerfor multi-threading.--tesst-per-worker autolet’s it pick. - Very cool alternative to xdist. -

Michael #4: Pooch: A friend for data files

- via via Matthew Fieckert

- Just want to download a file without messing with

requestsandurllib? - Who is it for? Scientists/researchers/developers looking to simply download a file.

- Pooch makes it easy to download a file (one function call). On top of that, it also comes with some bonus features:

- Download and cache your data files locally (so it’s only downloaded once).

- Make sure everyone running the code has the same version of the data files by verifying cryptographic hashes.

- Multiple download protocols HTTP/FTP/SFTP and basic authentication.

- Download from Digital Object Identifiers (DOIs) issued by repositories like figshare and Zenodo.

- Built-in utilities to unzip/decompress files upon download

file_path = pooch.retrieve(url)

Extras

Michael:

Joke:

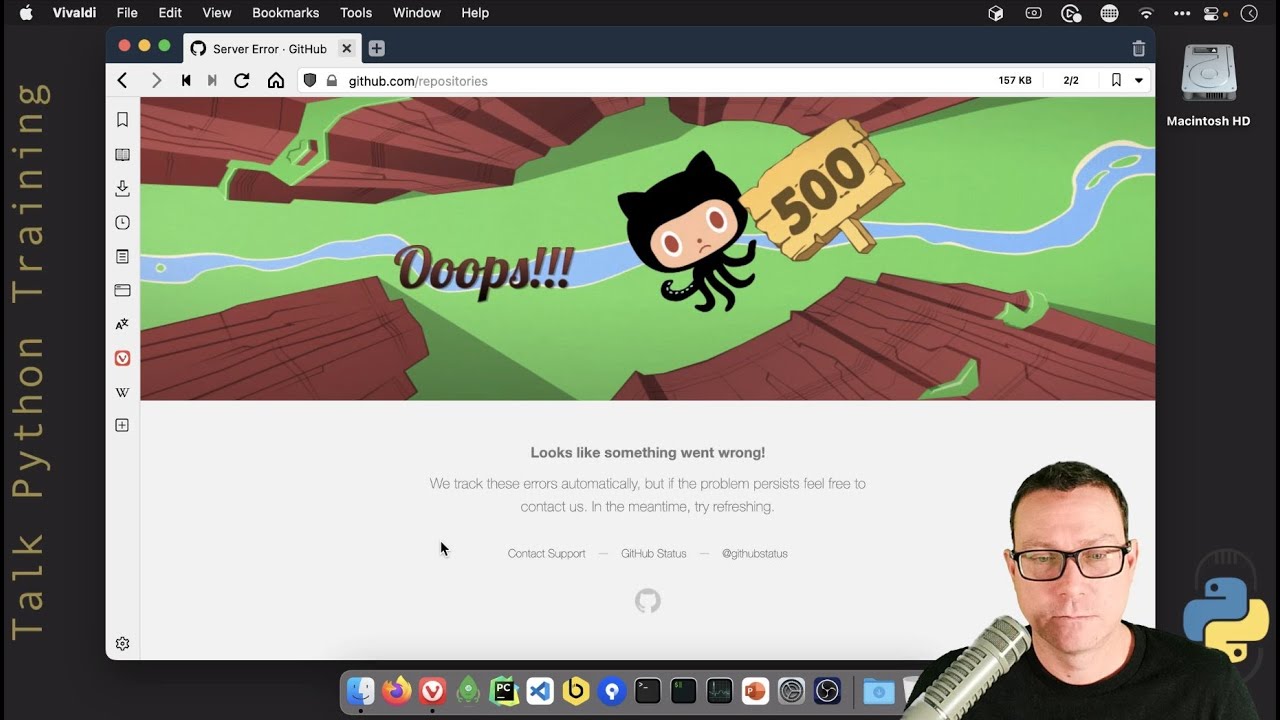

- Don’t embarrass me in front of the wizards

- Michael’s crashing github is embarrassing him in front of the wizards!

Episode Transcript

Collapse transcript

00:00 Hello and welcome to Python Bytes, where we deliver Python news and headlines directly to your earbuds.

00:05 This is episode 282, recorded May 3rd, 2022.

00:11 I'm Michael Kennedy.

00:12 And I am Brian Okken.

00:13 It's great to have you here, Brian. It's just us, just the two of us.

00:16 Yeah, just like old times.

00:17 I know, but we have our friends out in the audience, so we're not entirely alone.

00:22 It's great.

00:22 So let's kick it off.

00:25 I know you have a particularly exciting announcement.

00:29 It's a very important topic to cover here.

00:31 So let's go do it.

00:33 Okay, so PyScript.

00:35 So this was an announcement at PyCon US by Anaconda's CEO, Peter Wang, during a keynote.

00:43 I wasn't there, but everybody was tweeting about it.

00:46 So it almost felt like I was there.

00:49 But I haven't seen the presentation, so I can't wait till that goes online.

00:55 I know.

00:57 Are the videos, I have not seen the videos for the presentations at PyCon out yet.

01:01 Are they out yet?

01:02 And I just missed it?

01:03 I haven't looked.

01:04 Is my YouTube broken?

01:05 It should be full of this stuff.

01:07 Like, what's up with, is it supposed to be next day or something?

01:11 I don't know.

01:11 I know, I know.

01:12 Anyway.

01:13 I would have loved to live stream it, but I didn't see an option.

01:15 So anyway, I'm looking forward to watching this one in particular when it comes out, because

01:19 this is big news.

01:20 So PyScript is Python in the browser.

01:22 So what does that mean?

01:24 It is built on top of Pyodide, which is a port of CPython based on WebAssembly.

01:29 I'm pretty sure we've covered Pyodide before.

01:32 So this is a pretty neat thing.

01:34 And one of the things that this, so the PyScript.net, you go to it, it's got a little, it's kind

01:40 of actually, it's like hype and it sounds neat.

01:43 And you can do Python in the browser.

01:45 Neat with the PyScript tags.

01:46 But what does that mean?

01:48 So there's a, if you go down to the bottom, there's a GitHub repo that you can go look

01:53 at.

01:53 This is what I suggest.

01:55 And this will talk about, there's a getting started guide.

01:58 But what I did is just followed this.

02:02 I cloned the repo and then I went in and did the, into the JavaScript area and then did NPM

02:08 install and then did this dev run, run dev thing.

02:12 So this only take me like five minutes to get this far.

02:16 And, and what you have is you've got one of the things that it has is it has an examples

02:21 folder and you can just open this up now in your local, your local browse, local host.

02:26 And there's all these cool demos.

02:28 Like there's a, a REPL where you can just do, it's kind of like a Jupyter where you can

02:33 say like X equals three.

02:35 Let's do this.

02:36 And then X.

02:37 And then if I do shift enter, it evaluates it.

02:40 How neat is that?

02:41 That's pretty neat.

02:41 That's awesome.

02:42 Yeah.

02:43 To do app here.

02:45 So make sure you listen to our podcasts, go buy Python testing with pytest.

02:49 We'll check that.

02:50 Cause we know you already bought that.

02:51 So, and then there's an example with D3 graphics.

02:56 This is neat.

02:57 I don't think I've ever done this.

02:59 There's an Altair example.

03:00 And this is pretty fun.

03:02 Cause you click around and it changes the above.

03:04 It's like an interactive thing.

03:06 This is fun.

03:07 I, we, we use Altair with a project at work.

03:10 So this is neat.

03:11 The Mandelbrot set.

03:13 So there's some code.

03:14 So all of this code is in the repo.

03:15 So you can look at the examples and look exactly how the code is done.

03:19 There's a HTML file and a Python file for all of these.

03:22 So you can check it out.

03:24 Actually, I don't know about the Python thing.

03:25 It's a, it's, it's HTML and Python within the HTML code embedded.

03:30 So there isn't a separate file, but you have, you can do imports and all this sort of stuff

03:34 too.

03:34 Oh, I went too far, but I wanted to bring up, there's also an article that we're going

03:39 to link to in the show notes that is called, PyScript, unleash the power of Python

03:45 in your browser.

03:45 this is by Eric Lewis Lewinson and, it runs through it's, it's a pretty interesting,

03:52 a little quick read of what it is.

03:54 If you're not familiar with, WebAssembly and pyodide.

03:57 So it's nice.

03:59 What do you think, Michael?

04:00 So excited.

04:01 I am very excited.

04:02 You know, there's been progress on the WebAssembly plus Python side on several occurrences that

04:10 were, they give you a sense of what's possible, but they didn't give you a thing to build with.

04:15 You know what I mean?

04:16 Yeah.

04:16 So for example, pyodide is awesome, but it's kind of like, well, if I want to sort of host

04:21 a Jupyter kernel in my browser, like I can, I can kind of do that.

04:25 Right.

04:26 the WebAssembly Python itself is great, but it doesn't specify a way to have a UI

04:32 of your webpage interact with Python.

04:34 It's just, oh, you could execute Python over here.

04:36 Well, like, and then what, you know what I mean?

04:38 Which is, which is still good, but there's not something where like, I can have a button

04:43 on there that like wires up to this thing in Python.

04:46 And I can have this list that binds in that way and so on.

04:49 And this looks like we might be there.

04:53 Like one of the things they talk about on the page is not just running Python in the browser

04:58 and the Python ecosystem, as you pointed out, but really importantly, two more things, Python

05:03 with JavaScript, bi-directional communication between Python and JavaScript objects.

05:07 Yeah.

05:08 So you can wire into like events on the page and other, DOM type of things.

05:13 Yes.

05:13 And then a visual application development ties in with that with, use readily available

05:19 curated UI components, such as buttons, containers, text boxes, and more.

05:22 Oh yeah.

05:23 Yeah.

05:24 Yeah.

05:25 I mean, like these are just a little quick examples, but I'd love to see some, some,

05:28 bigger examples of things like that.

05:30 Like, being able to connect, it, you know, yeah.

05:34 JavaScript interaction with, stuff on, on the Python side.

05:37 That'll be neat.

05:38 Yeah.

05:39 It's weird to see Python written just straight in the browser, you know?

05:43 Yeah.

05:43 Like here you have like angle bracket, pi dash script, and then just import anti-gravity,

05:48 anti-gravity dot fly.

05:49 Like, wait, what?

05:51 Well, so this, this is a good example.

05:53 I picked this example for one is because it does, it does do an import.

05:57 So this, it, there's like a path thing you see, you set up.

06:00 So you can put code, you can put code, all your code doesn't have to be in HTML.

06:04 It can be in, in a Python file.

06:07 So you can debug it there, which that's where you want to debug it.

06:10 And then you can import it and call it within Python.

06:12 And so this is probably more where I would use it is, putting most of my code somewhere

06:17 else.

06:17 And then.

06:18 Yeah.

06:19 That's what I want to see.

06:20 I would want to see just Python files and just, effectively a script tag for it.

06:25 I mean, you probably, maybe you can't do it directly as a script tag, but you could do,

06:29 you know, bracket, high script, and then just import and run.

06:32 Right.

06:32 Yeah.

06:33 So the point, basically.

06:34 I haven't looked at this before.

06:35 So the anti-gravity.py that is, bringing in is bringing in some pyodide stuff and,

06:42 to be able to work it.

06:43 So I'm seeing some from, doc, this is Python code from document or sorry, from JS import

06:50 document.

06:51 Yeah.

06:52 And set interval.

06:52 And so those are the things you do there.

06:55 let's see.

06:56 Are there any, any callbacks?

06:58 I don't see any callbacks there.

07:00 Oh yeah.

07:00 Yeah.

07:00 This set interval as a callback self.move when the interval, the JavaScript interval

07:04 fires.

07:05 So under, under fly, that is, hooking into a timer there.

07:09 Yeah.

07:10 Timer callback.

07:10 So we should check that out.

07:11 So where's, where's that?

07:12 so the, I should have done this ahead of time.

07:17 The anti-gravity is not linked to, but I'll just like bring it up.

07:20 Anti-gravity based on.

07:23 Wow.

07:25 Oh my gosh.

07:26 This is so amazing.

07:27 People have to do this.

07:28 Oh, this is cool.

07:30 We all know import anti-gravity and we've got to know the XKCD that comes up, but yes,

07:35 this is so good.

07:37 It's great.

07:37 It's alive.

07:38 It's not just, is the person who, who says, how are you flying?

07:41 The person says, I'm playing with Python.

07:42 Like that thing is alive and cruising around.

07:44 I love it.

07:45 Yeah.

07:45 And that's based on the callback, right?

07:47 That's, that's calling Python based on the set interval, timer callback in JavaScript.

07:51 Yep.

07:51 Yeah.

07:52 And, and to me, that has been the missing piece.

07:55 Like how do I wire up?

07:56 It's like great if I can just execute Python and have, you know, like a number of things.

08:00 But what I want is view and Python or reactive.

08:03 I want to build the UI and Python and just not deal with JavaScript and be able to do so

08:08 many more things on the front end.

08:11 I mean, this opens up stuff like, progressive web apps, which could be really amazing for the Python space.

08:19 Right?

08:19 Like I'm here in Vivaldi.

08:21 If I go to my email client, just in the browser, I can right click and install.

08:25 It gets its own app that works offline.

08:28 It like pull this data down into local DB or whatever.

08:30 Theoretically you could do this, right?

08:32 You could pull down the CPython WASM.

08:35 You can pull down the 5k I script file and then just somehow use JavaScript to Python to talk to local

08:43 DBs.

08:43 I mean, what if we get like ORMs in Python going, oh yeah, we have one of our backends is the web

08:49 browser, local DB.

08:50 Yeah.

08:51 Or something that would mean, this is great.

08:53 I would love, I'm very excited for where this might go.

08:56 Sky's the limit, right?

08:57 That's what that little flying character is saying at least.

09:01 Yeah.

09:01 Okay.

09:03 So, well, good job Anaconda folks.

09:05 And I believe this was Fabio and crew.

09:08 So really, really nice.

09:09 That was super psyched.

09:10 How am I going to follow that one up, right?

09:11 I mean, come on.

09:12 It's just, I'll, I'll give it a try.

09:15 No, I've got some good items.

09:17 They're just not flying around.

09:19 Amazing Python in the browser.

09:21 Amazing.

09:21 So Bloomberg has a lot of Python going on and Bloomberg actually has a pretty cool,

09:28 like tech engineering blog where they talk about some of the stuff going on at Bloomberg,

09:32 right?

09:33 Yeah.

09:33 One of the really good articles I read from this, from them was about how to really set

09:39 up and run micro whiskey in production.

09:41 And it was like this huge, long, deep list of like, here's a bunch of flags you probably

09:45 never thought about.

09:46 And here's why you should care about them in Python.

09:48 Really good stuff.

09:49 So they're back with another thing that they use that is cool called memory, like memory,

09:56 but memory.

09:57 It is a memory profile for Python.

10:00 So if you want to understand the performance of your application, especially around memory,

10:06 here's a pretty neat tool.

10:09 Now, let me just get that right out of the way before I forget.

10:11 Linux only.

10:13 So if you're not using Linux, just close your ears.

10:15 No, just kidding.

10:16 Like you could all, if you're on Windows, you could just run your Python app under WSL and

10:21 then profile it and then go back to running on Windows.

10:23 Or if you're on Mac, just do a VM or something, right?

10:26 Anyway, it only runs on Linux.

10:28 But because Python is so similar across the platforms, I'm sure you could just test your

10:33 code there, even if that's not the main use case.

10:35 All right.

10:36 So you get all these different visualizations of memory usage.

10:39 It can track allocations for Python code in native extension modules, like NumPy or something

10:45 like that.

10:46 And even within CPython itself.

10:48 So you get sort of a holistic view of the memory, which is pretty awesome.

10:52 Yeah.

10:53 And it'll give you different memory reports.

10:55 We'll talk about them a little bit.

10:57 And you can use it as a CLI tool, just like kind of like time it or whatever.

11:01 You can just say memory run my app.

11:03 And then when your app exits, it's like, and here's what happened.

11:07 One of the things that's super challenging about complicated applications and web apps

11:12 and stuff is you want to focus on a particular scenario.

11:15 And there's so much overhead of like startup and other things.

11:19 So for example, if I just want to profile a FastAPI API call, if I just say run it up

11:26 and then I go hit that API, all of the infrastructure starting up UVicorn and FastAPI and Python, it

11:33 just like, it just dwarfs whatever that little thing is usually.

11:36 So there's also a programmable API that says, you know, you could create like a context manager.

11:41 Like, I don't know if it actually is that way, but you could certainly build it if it doesn't exist.

11:44 Like with memory profile here and just do a little block of code and then get an answer,

11:49 which I think is pretty neat.

11:50 Alvaro asks if it accepts an entry point.

11:53 I suspect you could call an entry point because you just do the run on the command prompt.

12:00 So you could probably pass it over.

12:02 Whatever you run.

12:02 Yeah.

12:02 Yeah, exactly.

12:03 But the problem is there's still like the startup of just CPython itself, right?

12:08 Like I always find just the imports and all that is just way more overhead than, you know,

12:14 it clutters it up.

12:15 Anyway, let's hit some notable features of Memray.

12:18 It traces every function call as opposed to sampling it.

12:22 So instead of just going every millisecond, what are you doing now?

12:25 What are you doing now?

12:26 Let's just record that, right?

12:27 It actually exactly traces so you don't miss any functions being called, even if they're brief.

12:33 It handles native calls in C++ libraries.

12:36 So the entire stack is represented in the results, which is pretty cool.

12:40 That's pretty neat.

12:40 Yeah, that's pretty dope.

12:42 Apparently it's blazing fast.

12:44 There's some kind of character.

12:45 I think it's a race car there.

12:46 It causes minimal slowdown in the app if you're doing Python tracing.

12:51 If you do the native code stuff, it's a little bit slower, it says, but that's optional.

12:54 You get a bunch of reports.

12:55 We'll see those in a minute.

12:56 It works on Python threads.

12:58 So you can see, I know all these people watching, but you check out the webpage.

13:02 There's a little thread, like a sewing thread emoji.

13:05 Or a Twitter thread.

13:06 Yeah, dude.

13:09 So it also works on native threads, like C++ threads and native extensions,

13:12 which it represents as an alien plus the thread icon.

13:18 I love it.

13:18 Alien threads, yeah.

13:19 Yeah, yeah, yeah.

13:20 So let's look over here real quick.

13:22 We'll look at just, I guess, the reporting, right?

13:24 I mean, the running is super simple, as I said.

13:26 Memory run Python file with arguments.

13:29 Or memory run dash M module with arguments.

13:32 These are the places you could put your entry point and so on.

13:35 And Dean, in the audience, says we've had a rich spotting.

13:39 Okay?

13:39 I haven't pulled that up yet, but very nice.

13:42 So there's different ways in which you can view it.

13:44 And the first one that I ran across, which is pretty interesting, if you're familiar with glances or you want to go old school, like top,

13:50 or one of these things you can run in just the terminal and get, like, not really with rich, not rich, not rich with top,

13:58 but rich output like glances, is you can run it in a live mode where, while it's running,

14:05 it'll show you what's happening with the memory.

14:07 That is so awesome.

14:08 That's pretty cool.

14:09 Yeah.

14:10 Yeah.

14:10 Yeah.

14:11 So instead of just showing you a memory graph, it's like, guess what?

14:13 We're running here right now with this many allocations and so on.

14:17 Yeah.

14:17 Like that looks super neat.

14:19 Yeah.

14:19 And if you've got something interactive, you can interact with it and watch the memory change then.

14:24 Yeah.

14:25 Yeah.

14:26 You can cycle through threads.

14:27 You can sort by total memory or its own memory of the, that's a common thing you do in profiling like this and all of the stuff it's called,

14:36 or just this method itself, sort by allocations versus memory usages, all kinds of stuff.

14:41 So that's really neat.

14:42 It will track the allocations across forks, as in process, sub process.

14:49 Oh, okay.

14:49 Why would you care?

14:50 Because multi-processing.

14:51 If you want to track some kind of multi-processing memory workflow, it'll actually do that.

14:56 Just you do dash, dash, follow fork, and it'll like aggregate the stats across the different processes.

15:01 Kind of insane.

15:02 Let's see if we can get down here.

15:05 You can do, they have the summary reporter, which is kind of a nice, just,

15:09 you know, this is probably what you would expect.

15:11 Flame graphs.

15:11 If I can get down here somewhere, it'll show like sort of the color and the width of these bars.

15:17 It'll show you how significant it is.

15:19 there's a nice tree version that'll show you the biggest 10 allocations.

15:23 And then a call stack sort of in and out with trees and like how much memory is being allocated in each one of those and so on.

15:30 That's nice.

15:30 Yeah.

15:30 This is a nice app, right?

15:32 Nice utility.

15:33 Definitely.

15:34 Cool.

15:34 Yeah.

15:35 Indeed.

15:36 Indeed.

15:36 Indeed.

15:36 So if you want to track down memory leaks or you're just wondering like,

15:40 why is my program using so much memory?

15:43 Fire it up.

15:44 Let it run for a while.

15:44 See what happens.

15:45 Yeah.

15:46 Cool.

15:46 All right.

15:47 Back to you, Brian.

15:49 Well, I want to bring up a, a pie test tool.

15:52 So, it was a, I have a recent, I've often used a pie test X dist, for parallel.

16:01 So X dist is a way you can just say that it's, it's, it's the one that I heard about first for running pie tests in parallel.

16:08 So you've got, you know, like tons of tons of unit tests, maybe, and you want to just speed them up.

16:13 you can throw them, throw a dash in for something like that at it.

16:17 and it'll, and it'll, it'll just throw them, and, and there was, I think it was Bruno Olivier,

16:34 suggested a couple of alternatives.

16:37 And one of them was pie test parallel, which, I know I've run across,

16:42 but I haven't played with it for a while.

16:43 So I tried it out and it's actually like really cool.

16:47 So one of the, one of the pie test X does does a lot.

16:50 One of the things it does is it not just, it's not just multi-processor,

16:55 but it can be on different actual different computers.

16:58 So you can launch them on.

16:59 Oh, nice.

17:00 Like grid computing almost.

17:01 Yeah.

17:02 You can SSH into different systems and have it run in parallel.

17:05 but that, you know, you don't, I don't usually need that kind of power.

17:10 the one thing it doesn't do is thread.

17:12 So it's, it's process based and pie test parallel does both.

17:16 So you can say, you can give it, you can give it, well, where we have, I'm going to go down to the examples.

17:24 So you can give it number of workers and it'll tell it to, that's how many,

17:29 processes it'll spin up or how many CPUs.

17:32 now you can also give it test per worker and then it'll run in multi-threading mode.

17:38 and you can give it auto on both of these.

17:41 And it's, this is extremely useful for, you have to, by default, this is turned off by default.

17:48 The, the features, if you just say workers equals five or something,

17:51 it won't do multiple threat, multi-threading.

17:54 And the reason is it, because you need to make sure your tests are thread safe.

17:59 and many are not.

18:01 So I tried it on a couple of my, even if they're isolated, they might not be thread safe,

18:05 right?

18:05 Yes.

18:06 that's, that's another level of consideration.

18:09 However, if there are, there's a lot of small, especially small, not really unit like system tests,

18:16 but a lot of unit tests are just testing a little Python code.

18:18 If you've got a part of that is a lot of projects, that's a big chunk of the test load.

18:23 So being able to do multi-threading is really nice, but you know, even with just multi-processing,

18:29 I tried this on a few different projects and there were like, I tried it on flask and the,

18:35 the parallel version using pytest parallel was like three times faster than the

18:43 ex-dist version.

18:44 So, so based on your, I, there's, there was another one that, Bruno mentioned,

18:50 but I think these two are really solid ex-dist and parallel.

18:53 So if you want to speed up your test run times, I would try both on your project and just see,

18:58 play with them.

18:59 And see, see which one's faster on, many of the projects I tried parallel was at least as fast or faster than

19:06 ex-dist.

19:07 So it's kind of nice.

19:08 Yeah, it's cool.

19:10 This looks great.

19:10 I like it.

19:11 And having your test run faster is always good.

19:15 Do you do anything crazy?

19:16 Like, do you set up your editor to auto run tests on file change or anything like

19:20 that?

19:20 sometimes, one of the things that I've always, I've done it a few times,

19:25 but it always makes me nervous.

19:26 I'm like, ah, just like it's unnerving to me that it just keeps running.

19:29 One of the things that I really like around that was added to PI test not

19:34 too long ago was, is stepwise.

19:37 So that's not really all the running it all the time, but, stepwise will,

19:42 and this would be a handy one to, to run all the time.

19:44 So what stepwise does is it takes, you can run all your tests in stepwise.

19:49 And when you run it again, it'll start at the first failing test because it assumes you're trying to fix

19:55 something.

19:55 It'll start at that and then run until it finds a failure.

19:59 So if you, if you haven't fixed this first failure, it'll just keep running that one until you fixed it and it'll go to the next one.

20:05 and, so I do that a lot while I'm trying to debug something.

20:09 and, and hooking that up with like an auto, like a watch feature.

20:14 There's a bunch of ways you can watch your code to, to do that.

20:17 yeah, it's fun.

20:19 Nice.

20:19 Very cool.

20:21 So let's do some real time follow up here.

20:23 First, Alvaro is being all mischievous asking, I wonder what would happen if I install both plugins,

20:29 both X, just in parallel.

20:31 I, you can, I don't know if I've, you can run them at the same time.

20:35 I should try.

20:36 I have it installed on like the flask one.

20:38 I ran it.

20:38 I installed both of them.

20:40 And then try it in both, but not at the same time.

20:42 I'll have to try the forks.

20:43 It's going to go so fast.

20:44 And then just going back to PyScript, there's like tons of excitement about PyScript.

20:49 Yeah.

20:49 Dale's excited.

20:50 Brandon's excited.

20:51 and David says, I hope someday I can say back in my day, you couldn't just learn Python.

20:57 You had to learn JavaScript too.

20:59 Yeah.

20:59 Indeed.

21:00 Indeed.

21:00 let's see.

21:02 So I got one more to cover that is going to be fun as well.

21:07 And this one comes to us from former, guest co-host, Michael Feigert, sorry,

21:14 Matthew Feigert.

21:14 And Matthew is a great support of the show.

21:17 It sends all sorts of interesting things in to help us out and good ideas.

21:22 And this is yet another one coming from the data science side of things saying,

21:27 you know, one of the things you have to do often in say a Jupyter notebook is go download a file off

21:33 of an API or just some link or S3 bucket or whatever.

21:36 And you want to process it.

21:37 And if you use requests while great, you end up making the request, verifying that it worked,

21:44 reading the stream into bytes, writing the bytes to a file, picking a file name and then using that file name to open it.

21:50 And then say, now you can process it.

21:52 Right.

21:52 Yeah.

21:53 So there's this thing called pooch, a friend to fetch your data files.

21:57 All right, pooch, go get, go get my files.

21:59 Like a little, a little, a friendly dog that also seems to hold a snake in its mouth.

22:04 So that's pretty cool.

22:05 Anyway, who wouldn't want a dog that can wrangle snakes to go help you with your notebooks?

22:10 Anyway, the idea is you can do all of what I described with requests.

22:15 You can do that in one line of code.

22:16 Oh, wow.

22:17 Yeah.

22:17 And you get other cool features as well.

22:19 So, it says, look, you can just make this one function call and it'll save it.

22:24 And it'll also cache your files locally.

22:26 So some of these files that data scientists especially work with are massive,

22:30 right?

22:30 You know, it's like a gig.

22:31 And every time you run the notebook, you don't want it to download the gig again.

22:34 You just want it to run more quickly.

22:36 So you can set up a location for it to cache it.

22:38 you can pass in a hash of the file to say, I want to get this file and I expect it to be this MD five or whatever the heck

22:47 the hash is that they're using so that you can be sure it doesn't change.

22:50 Right.

22:51 So if you're doing like reproducible data science, you say, what you do is you download this file,

22:56 then you apply this algorithm, then you get this picture.

22:58 Well, if the data changes, I bet the picture changes.

23:00 Right.

23:00 And so you can put it like a layer of verification that it's unchanged from the

23:05 last time you decided what it should be.

23:07 That's pretty cool.

23:08 You can do multiple protocols.

23:10 So not just HTTP, HTTPS, but FTP.

23:13 Oh my gosh.

23:14 S FTP.

23:15 Oh yeah.

23:16 It's what else.

23:17 Basic off.

23:17 It'll also automatically resolve DOIs, digital object identifiers, which are used in places like big share and Zen Zenodo.

23:28 And this is about the reproducible science.

23:30 Like here's that, here's the file.

23:32 And like, we've been assigned an immutable ID that we can always refer back to it.

23:36 So you can just say, here's the ID and it'll actually get the file and it'll even unzip and

23:39 decompress files upon download.

23:41 Neat.

23:41 Pretty neat.

23:42 Huh?

23:42 Yeah.

23:43 Yeah.

23:43 Pretty straightforward.

23:44 Let's, let me see if I can find an example of.

23:46 I love, I like the section of learning about it.

23:50 It's called training your pooch.

23:51 That's cute.

23:52 Oh, nice.

23:55 I love it.

23:55 Apparently it has progress bars.

23:57 Always download actions, logging, and you get multiple files, but the main use case is just file equals pooch.retrieve URL done.

24:07 That seems pretty nice.

24:08 Yeah.

24:09 That's great.

24:09 It's my data.

24:10 Here it is.

24:11 Oh, cool.

24:12 So Pamphill Roy on the audience says, Hey folks, funny.

24:15 We're adding this to scipy optional to have a scipy dataset sub module.

24:20 scikit image is using this as well.

24:22 I had no idea.

24:23 Very cool.

24:24 Thanks for the extra background there.

24:25 Cool.

24:25 Yeah.

24:26 But I think this is great.

24:27 In fact, I know it's sells itself.

24:30 It bills itself as being for data science.

24:32 I also like to download files sometimes and not go through five or six lines of code.

24:37 I could use this.

24:37 Yeah.

24:38 Yeah.

24:38 There's, there's a lot of stuff that data science people are doing that we can use in lots of other

24:43 fields.

24:43 So.

24:44 Indeed.

24:44 I do think that's actually one of the really interesting aspects of Python is we have so

24:50 many people from these different areas that it's not just all, you know, CS grads doing

24:54 the same thing.

24:55 Yeah.

24:55 Yeah, for sure.

24:56 All right.

24:58 Well, those are my items for today, Brian.

25:00 Nice.

25:01 I don't have any extras today.

25:05 Do you have any extra information in stuff?

25:07 I do.

25:08 I do have extras.

25:10 So this one I'm very, very excited about.

25:13 I have a new course that I just released called up and running with Git, a pragmatic UI based

25:19 introduction.

25:20 So I'm really excited.

25:22 I just released.

25:23 I haven't really even announced it yet, but I finished getting it all public and online

25:27 and turned all the GitHub repos public and all that stuff right before we jumped on the

25:31 call today.

25:31 And the idea is there are tons of Git courses.

25:34 So why create a Git course?

25:35 Well, I feel like so many of them are just like, okay, we're just going to work in the

25:41 terminal or the command prompt.

25:42 And you're just going to assume that like, that's the world of Git that you live in, like

25:47 kind of a least common denominator approach.

25:48 And while that, that is useful.

25:50 Like, I don't think that's how most people are working, right?

25:52 If you're in Visual Studio Code or PyCharm, like there's great hotkeys just to do the

25:57 Git stuff and see the history and whatnot.

25:58 And there's other tools like SourceTree and Power and others.

26:01 So it kind of takes this approach of like, well, let's take all the modern tools that give

26:06 you the best visibility and teach you Git with that.

26:08 So super fun.

26:10 Which GUI tools are you using then?

26:12 Which ones are you using?

26:13 Visual Studio Code.

26:14 Okay.

26:14 PyCharm.

26:15 SourceTree.

26:16 Okay.

26:16 Those are the things.

26:17 And so I've done a lot of work.

26:19 I've tried to take some of my experience from doing some work on YouTube where I was

26:23 experimenting with like setup and presentations and stuff.

26:27 And I think I have a really neat, polished experience for this course with like lots of

26:32 cool visuals and graphics and video and stuff.

26:35 So hopefully people really enjoy it.

26:37 Anyway, this is my extra.

26:38 I just sent this out to the world.

26:40 I'm pretty excited about this.

26:41 Nice.

26:42 Congrats.

26:42 Yeah.

26:43 Thanks.

26:43 Thanks so much.

26:44 You have no extras.

26:45 Does that mean you're ready for some humor?

26:47 Yes.

26:48 Always.

26:48 All right.

26:49 All right.

26:49 This one.

26:50 I chose this.

26:51 Honestly, I just chose it just because of the title.

26:53 So there's Robert.

26:57 Is this Robert Downey Jr. looking at somebody in like some kind of wizard situation?

27:02 Right?

27:02 Like.

27:02 Yeah.

27:03 This is like Endgame or something.

27:05 Okay.

27:05 Yeah.

27:06 I don't know the movie.

27:06 Like apparently I stopped watching movies at some point.

27:09 Now I don't.

27:09 I'm out of touch.

27:11 So anyway, the title is when your code stopped working during an interview or it could be

27:16 a demo presentation or whatever.

27:18 Like you want to, you want to tell us what this is about, what that's going on here?

27:21 So he's, he's, he's looking back at Banner.

27:25 So who's the Hulk?

27:26 He says, dude, you're embarrassing me in front of the wizards.

27:29 Yeah.

27:30 Because, yeah.

27:31 Because Banner wasn't able to become the Hulk.

27:33 So at the time.

27:34 Right, dude.

27:35 Don't, don't embarrass me in front of the wizards.

27:37 I just, I love to think of programmers as kind of like the modern day wizards.

27:40 Like we can think of things and then poof, they, they kind of come into existence.

27:43 Yeah.

27:44 It's good.

27:45 And also while working on that Git course, I had this pretty fun experience.

27:49 Like right while I was recording it.

27:51 Nice.

27:53 And I'm just sitting there and then.

27:55 Git was down.

27:56 How often does GitHub itself go down?

27:58 But no.

27:59 Oh no.

27:59 There's like an, the Octa cat is falling like with a 500 sign in its hands.

28:04 Toops.

28:05 Which of course made me.

28:07 I love the.

28:07 Redo that section of the course.

28:09 Yeah.

28:09 I like the expression on your face for that.

28:11 It's like.

28:12 Yes.

28:13 Exactly.

28:13 People seem to really like that tweet.

28:15 I'll, I'll put it in the show notes if people can check it out.

28:17 Anyway, dude, don't embarrass me in front of the wizards.

28:20 That's what I got for you.

28:21 Yeah.

28:22 Good.

28:23 Good, good.

28:24 Well, thanks.

28:25 Thanks a lot again.

28:26 It's a great show.

28:28 Yeah.

28:28 Sure was.

28:29 Thanks.

28:29 Thanks, Brian.

28:30 Thanks for everyone who came.