#306: Some Fun pytesting Tools

About the show

Sponsored by Microsoft for Startups Founders Hub.

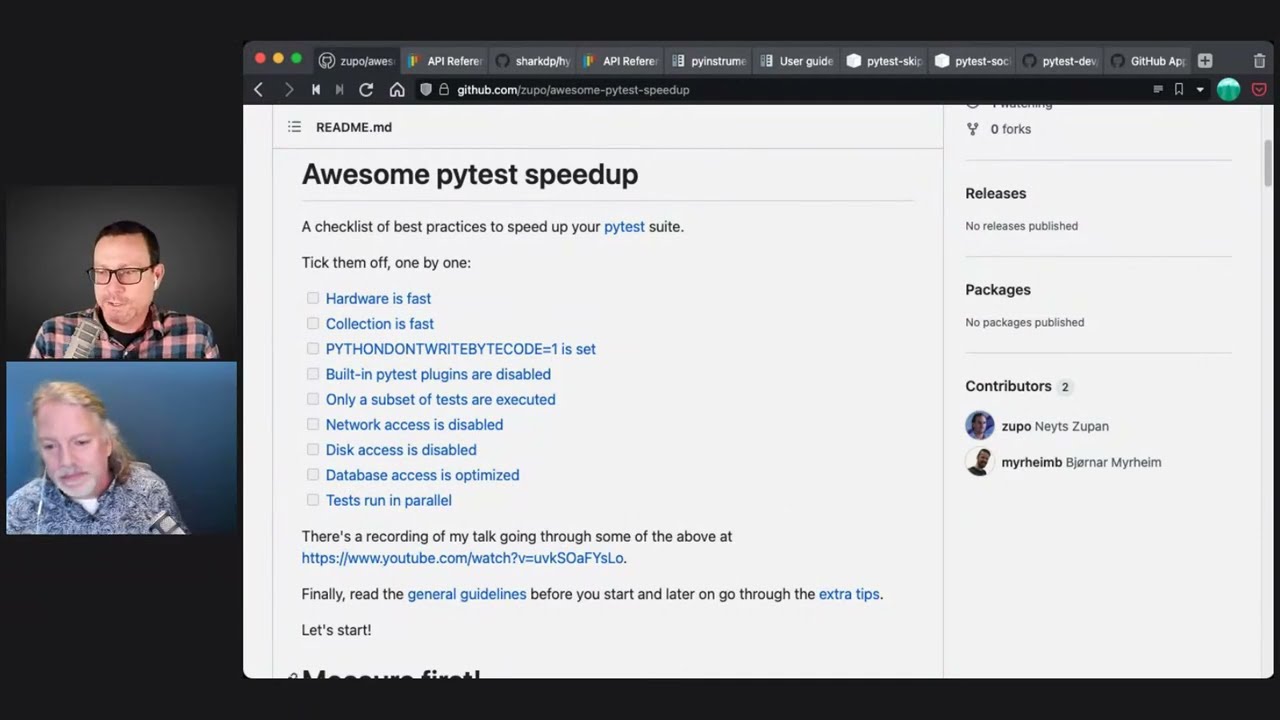

Brian #1: Awesome pytest speedup

- Neyts Zupan

- A checklist of best practices to speed up your pytest suite.

- as a talk at Plone NAMUR 2022

- Measure first

- Then make sure (all items have explanations)

- Hardware is fast

- use a faster computer

- also try a self-hosted runner

- seriously, a dedicated computer (or a few) for making test runs faster might be worth it. CI resources are usually slower in cloud than local, and even expensive VM farms are often slower. Try local

- Collection is fast

- utilize

norecursedirsand specifying the location of the tests, either on the command line or with <code>testpaths</code>

- utilize

- PYTHONDONTWRITEBYTECODE=1 is set

- might help

- Built-in pytest plugins are disabled

- try

-p no:pastebin -p no:nose -p no:doctest

- try

- Only a subset of tests are executed

- Especially when developing or debugging, run a subset and skip the slow tests.

- Network access is disabled

- <code>pytest-socket</code> can make sure of that

- Disk access is disabled

- interesting idea

- Database access is optimized

- great discussion here, including using truncate and rollback.

- Tests run in parallel

- pytest-xdist or similar

- Hardware is fast

- Then keep them fast

- monitor test speed

Michael #2: Strive to travel without a laptop

- Prompt from Panic for SSH on iThings

- github.dev for an editor on iPad

- Push to branch for continuous deployment

- BTW, Apple could just make M1 iPads boot to macOS rather than chase silly multi windowing systems (stage manager, etc, etc)

Brian #3: Some fun tools from the previous testing article

- hyperfine for timing the whole suite

pytest--``durations 10for finding test times of slowest 10 tests- leave the

10off to find times of everything, sorted

- leave the

- pyinstrument for profiling with nice tree structures

- pytest-socket disables network calls with

--disable-socket, helping to find tests that use network calls. - pyfakefs, a fake file system that mocks the Python file system modules. “Using pyfakefs, your tests operate on a fake file system in memory without touching the real disk.”

- BlueRacer.io

Michael #4: Refurb

- A tool for refurbishing and modernizing Python codebases

- Think of it as suggesting the pythonic line of code.

- A little sampling of what I got on Talk Python Training

- file.py:186:25 [FURB106]: Replace

x.replace("\t", " ")withx.expandtabs(1) - file.py:128:17 [FURB131]: Replace

del x[y]withx.pop(y) - file.py:103:17 [FURB131]: Replace

del x[y]withx.pop(y) - file.py:112:39 [FURB109]: Replace

not in [x, y, z]withnot in (x, y, z) - file.py:45:5 [FURB131]: Replace

del x[y]withx.pop(y) - file.py:81:21 [FURB131]: Replace

del x[y]withx.pop(y) - file.py:143:9 [FURB131]: Replace

del x[y]withx.pop(y) - file.py:8:50 [FURB123]: Replace

list(x)withx.copy()

- file.py:186:25 [FURB106]: Replace

- You don’t always want the change, can suppress the recommendation with either a CLI flag or comment.

Extras

Michael:

- Back on episode 54 in 2017 we discussed python apps in systemd daemons.

- Multiprocessing allows for a cool way to save on server memory

- Do the scheduled work a multiprocessing.Process

- Here’s an example from Talk Python Training

- Completely rewrote search UI for Talk Python courses

- Google analytics is now illegal?

- Fleet is finally in public preview

- I’ll be on a JetBrains/PyCharm webcast Thursday.

Joke: Tests pass

Episode Transcript

Collapse transcript

00:00 Hello and welcome to Python Bytes, where we deliver Python news and headlines directly to your earbuds.

00:05 This is episode 306, recorded October 18th, 2022.

00:11 I'm Michael Kennedy.

00:12 And I'm Brian Oken.

00:13 Very exciting to have a whole bunch of things to share this week.

00:17 Also, I want to say thank you to Microsoft for startups for sponsoring yet another episode of this one.

00:22 Brian, we've had a very long, dry summer here in Oregon.

00:28 And I was afraid that we would have like terrible fires and it'd be all smoky and all sorts of badness.

00:33 And there've been plenty of fires in the West, but not really around here for us this summer.

00:37 We kind of dodged the bullet until like today.

00:39 It's a little smoky today.

00:41 We smoke, go inside.

00:44 Yeah.

00:45 I thought we dodged it, sadly.

00:46 No.

00:47 So I think it's affecting my voice a little bit.

00:49 So apologies for that.

00:50 We'll put that filter on you and we'll make you sound like someone else and you'll be fine.

00:55 Yeah.

00:56 Yeah.

00:57 It's also affecting me.

00:58 So who knows?

00:59 But anyway, we'll make our way through.

01:03 We will fight through the fire to bring you the Python news.

01:06 Hopefully they get that actually put out soon.

01:09 All right.

01:09 The post office.

01:10 Yeah.

01:10 Let's kick it off.

01:12 What's your first thing?

01:12 So I've got, let's put it up.

01:15 So I've got to stream.

01:18 I've got awesome pytest speed up.

01:20 So this is awesome.

01:21 Yeah.

01:23 So actually, some people may have noticed the testing code is not really going on lately.

01:28 And so one of the things that makes it easier for me is when I see cool testing related articles,

01:34 I don't have a decision anymore.

01:35 I can just say, hey, it's going to go here.

01:37 Now, testing code will eventually pick up something again, but I'm not sure when.

01:42 So for now, if I find something cool like this article, I'll bring it up here.

01:46 So this is.

01:47 I think I make you show up every week.

01:48 Yeah.

01:49 Talk about fun stuff anyway.

01:50 So this is a GitHub repo.

01:54 And we're kind of seeing more of this of people writing instead of blogging.

01:59 They just write like a readme as a repo.

02:03 I know.

02:04 This is such a weird trend.

02:05 I totally get it.

02:06 And it's good.

02:07 But it's also weird.

02:08 But it's kind of neat that people can update it.

02:10 So if they can just keep it up and you can see people.

02:13 That's right.

02:13 You get a PR to your blog post.

02:15 That's not normally how it goes.

02:16 Yeah.

02:16 So I'm not sure.

02:18 But it's probably harder to throw Google Analytics at it, right?

02:23 Oh, yeah.

02:23 We'll see whether you should do that or not.

02:26 So anyway, so this comes to us from Nate Zupan.

02:31 Cool name, by the way.

02:34 And he also has, we'll include a link in the show notes to a talk he gave at a plon in Namar 2022.

02:43 So just recently.

02:45 Anyway, so he goes through best practices to speed up your pytest suite.

02:52 And he's just kind of lists them all at the top here, which is nice.

02:56 Hardware first.

02:58 Well, first of all, when he goes into the discussion, he talks about measuring first.

03:02 So before you start speeding anything up, you should measure because you want to know if your changes had any effect.

03:10 And if it's making support a little bit weirder, then you don't want to make the change if it's only marginal.

03:15 So I like that he's talking about that of like each step of the way here, measure to make sure it makes a difference.

03:23 Right.

03:23 So first off, and I'm glad he brought this up, is check your hardware.

03:28 Make sure you've got the fast hardware if you have it.

03:33 So one of the, and I've noticed this before as well, is, so here we go, measure first.

03:40 So some CI systems allow you to have self-hosted runners, and it's something to consider.

03:46 The, whether your CI is in the cloud or you've got virtual, like a server with some virtual machines around to be able to run your test runners.

03:57 They're not going to be as fast as physical hardware if you've got some hardware laying around that you can use.

04:03 So that's something to consider to throw hardware at it.

04:06 And then test collection time, some of the, some of the problems with the speed of pytest is using, if you've got, if you run it from the top level directory of a project and you've got tons of documentation and tons of source code, it's going to look everywhere.

04:21 So don't let it look in those places.

04:23 So there's, there's ways to turn that off.

04:25 So with no recursive, so with no recursive, and giving it the directory.

04:29 I also wanted to point out, he didn't talk about this in the article, but I want to point out that something to use is, oh, it went away.

04:37 Test paths.

04:38 So use test paths to say specifically.

04:41 So the no recursive says essentially avoid these directories, but test paths pretty much says, this is where the tests are.

04:49 Look here.

04:50 So those are good.

04:51 Nice.

04:52 Yeah.

04:52 I've done that before on some projects, like on the Talk Python Training website, where there's got a ton of text files and things laying around.

05:00 And I've done certain things like that to exclude, you know, pytest and PyTarm and other, other different things to look there in those places where like, there's no code, but there's a ton of stuff here and you're going to go hunting through it.

05:13 Yeah.

05:13 So like, I really sped up the startup time for a pyramid scanning for files that have route definitions in them, right?

05:21 For URL endpoints.

05:22 Cause it would look through everything.

05:24 Apparently doesn't matter.

05:25 At least looking for files through directories with tons of stuff.

05:28 And, and this is like, it makes a big difference if you have a large project for sure.

05:32 Yeah.

05:32 It's significant.

05:33 So it was something to think about.

05:36 And documentation too.

05:38 You don't, unless you're really testing your documentation, you don't need to look there.

05:41 So hardware fast, make collection fast.

05:45 This one is something I haven't used before, but I'll play with it.

05:49 Python don't write bytecode, a environmental flag.

05:54 I guess it's, it, it comments that it might not make a big difference for you, but it might.

05:58 So, you know, I don't know.

06:02 So Python writes, writes the bytecode normally, and maybe it'd be faster if you didn't do that.

06:08 What during tests, maybe we'll try.

06:10 there's a way to disable py test plugins, to, yeah, let's just go built in py test plugins.

06:18 You can say, no, like no nose or no doc test.

06:22 If you're running those.

06:22 I haven't noticed that it speeds it up a lot, but it's, again, it's something to try.

06:28 And, and then a subset of tests.

06:30 So, this is especially important if you're in a TDD style and that's, and one of the things

06:38 that I think some people forget is your tech, if you've got your tests organized, well, you

06:42 should be able to run a subset anyway.

06:44 Cause you're, you've got like the feature you're working on is in a subdirectory of everything

06:48 else.

06:49 And just run those when you're working on that feature and then you don't run the whole

06:53 suite.

06:53 they talk, there's a discussion and this is, this goes along with the unit tests mostly,

06:58 but, disable networking, unless you intending to have your code using networks, network

07:05 connections.

07:05 you can disable that for a set of tests or, or the whole suite.

07:10 And then also disc access trying to limit that.

07:14 And he includes a couple of ways to, to ensure those.

07:19 and then, a really good discussion, a fairly chunky discussion on database access

07:25 and optimization to databases, including discussion around, rollback and, and there was

07:33 something else that I hadn't seen before.

07:35 Let me see if I can remember.

07:36 Yeah.

07:37 There's some interesting things.

07:38 I think, I know you've spoken about it in your pie test course about, using fixtures

07:43 for like setup of, of those common, common type things.

07:47 Right.

07:47 So, so one of the things I w I'm not going to do with that.

07:49 I am not familiar with is truncate.

07:50 Have you used the database truncate before?

07:52 No.

07:53 So apparently that it allows you to set the whole database up, but delete all the stuff

07:59 out of it, like the empty, the tables.

08:00 and, and that, I mean, if, if part, if a big chunk of the work of setting up data

08:08 is setting, getting all the tables correct, then truncate might be a good way to clean them

08:12 out and then refill them if you need to.

08:14 But, also, yeah, like you said, paying attention to, to fixtures, that's, it's really good.

08:21 And then the last thing he brings up is just run them in parallel by default.

08:24 pytest runs single each, each, test one at a time.

08:28 And if you've got a code base that you're testing that can allow, like you're not testing a hardware

08:34 resource or something, that you can allow parallel to go ahead and turn those on,

08:39 turn on the, use xdst and turn or something else and run them in parallel.

08:43 So, really good list.

08:45 and I'm glad he put it together.

08:47 Also very entertaining talk.

08:48 So we'll give it, give his talk a look.

08:50 Yeah, absolutely.

08:52 Brandon out in the audience says people at work have been trying to convince me that

08:56 tests should live next to the file they are testing rather than in a test directory.

09:01 You know, I created a test directory that mirrors my app folder structure, with my test in there.

09:05 Any opinions?

09:06 I don't like that, but, Neither do I, honestly.

09:11 If you like it, I guess.

09:13 Okay.

09:13 I've, I've heard that before, but I know I haven't heard people in Python recommending

09:17 that very often.

09:18 Yeah.

09:19 For me, I feel, I understand why, like, okay, here's the code.

09:23 Here's the test.

09:24 Maybe, maybe the test can be exactly isolated to what is only in that file.

09:30 But sometime, you know, like as soon as you start to blend together, like, okay, well,

09:34 this thing works with that class to achieve its job.

09:39 But it, you know, you kind of, it kind of starts to blur together and like, well, what

09:43 if those are in the wrong places?

09:44 Well, now it's like half here.

09:46 And I don't know.

09:47 It just, it, it leads to like lots of, I don't know.

09:51 It's like trying to go to your IDE and say, I have these seven methods.

09:54 Please write the test for it.

09:55 And it says test function one, test function two, test function three.

09:58 You're like, no, no, no.

09:59 That is not really what you're after.

10:01 But I feel it kind of leads, leads towards that.

10:04 Like, well, here's the file.

10:05 Let's test all the things in this file.

10:07 And it, which is not necessarily the way I would think about testing.

10:10 Well, also, are you really test?

10:12 I mean, it kind of lends itself to starting to test the implementation instead of testing

10:17 the behavior.

10:18 Yes, exactly.

10:19 Because you might have, if you've got a file that has no test associated with it,

10:23 somebody might say, well, why is the test for that?

10:25 And you're like, well, that file is just an implementation detail.

10:28 It's not something we need to test because you, it's, you can't access it directly from the

10:33 API.

10:33 So.

10:33 Right.

10:34 It's completely covered by these two other, other tests.

10:37 And by the way, there are other folders.

10:38 Go find them.

10:39 Yeah.

10:40 Also the stuff you're speaking about here by like making collection fast and such also

10:46 is a little bit tricky.

10:47 Potentially sharing fixtures might be a little more tricky that way.

10:51 I don't know.

10:52 My, my vote is, is to not mix it all together.

10:55 Plus, do you want to ship your test code with your product?

10:58 Maybe you do, but often you don't.

11:00 Is it harder?

11:01 Harder if they're all woven together.

11:02 That's true.

11:03 Yeah.

11:04 Yeah.

11:05 So anyway, that's the same thing.

11:08 Also, Henry Schreiner out there kind of says, I don't like distributing tests in wheels

11:12 on the Estes.

11:13 So like a test folder as well.

11:14 Yeah.

11:15 I'm with you.

11:16 I think Brandon, the vote here is test folder, but you know, that's just, just us.

11:22 Yeah.

11:23 Awesome.

11:24 All right.

11:25 Well, this is, yeah, that's a good find.

11:26 You want to hear my first one?

11:28 This is a, this is a bit of a journey.

11:30 It's a bit of a journey.

11:31 So let's start here.

11:33 So I have a perfectly fine laptop that I can take places if I need to for work.

11:41 Take it to the coffee shop to work.

11:42 If I'm going on like a two week vacation, it's definitely coming with me.

11:46 Right.

11:47 It's even if my intent is to completely disconnect, I still have to answer super urgent emails.

11:52 If the website goes down, any of the many websites I seem to be babysitting these days,

11:59 like I've got to work on it.

12:00 Like there could be urgent stuff.

12:02 Right.

12:02 So I just, I take it with me, but I'm on this mission to do that less.

12:06 Right.

12:07 Cause I have a 16 inch MacBook pro.

12:09 It's pretty heavy.

12:09 It's pretty expensive.

12:10 I don't necessarily want to like take it camping with me, but what if, what if something goes

12:15 wrong, Brian?

12:16 What if I got to fix it?

12:17 Do I really want to drive the four hours back?

12:19 Because I got a message that like, you know, the website's down and everyone's upset.

12:23 Can't do their courses or they can't get the podcast.

12:25 No, I don't want that.

12:26 So I would probably take the stupid thing and try to not get it wet.

12:29 So I'm on this mission to not do that.

12:32 So I just wanted to share a couple of tools and, you know, people, if they've got thoughts,

12:35 I guess probably the YouTube stream chat for this to be the best or on Twitter, they could

12:40 let me know.

12:41 But I think I found like the right combination of tools that will let me just take my iPad

12:46 and still do all the dev opsy life that I got to lead.

12:51 Okay.

12:51 So that it's not good for answering emails.

12:53 You know, I have like minor RSI issues and I can't type on an iPad, not even a little

12:58 like keyboard that comes with it.

12:59 Like I've got my proper Microsoft ergonomic sculpt and you can plug that into an iPad.

13:06 But once you start taking that, you know, like, well, you might as well just take the computer.

13:09 So, two tools I want to give a shout out to prompt by panic panic is a Portland company.

13:15 So shout out to the local team.

13:17 Is it at the disco or?

13:19 Exactly.

13:21 They don't really freak out that much at the disco.

13:23 Okay.

13:23 I don't even panic there.

13:24 but prompt is a SSH client for iOS in particular for iPad, but you could, I mean, if you wanted

13:30 to go extreme, you could do this on your phone, you know, how, how far are you going camping,

13:34 right?

13:34 Or where, where are you going?

13:35 And so this lets you basically import your SSH keys and do full on SSH.

13:42 SSH like you would in your, I term to, or, or terminal or whatever.

13:47 Turns your iPad into a dump terminal.

13:48 Yeah.

13:49 And it does.

13:49 So like you can easily log into, you know, the Python bytes server and over SSH do all the

13:57 things that you need to do.

13:58 So, you know, you've got to get into the server and you've got to like, okay, well, I really

14:00 have to just go restart the stupid thing or change a connection string.

14:04 Cause who knows what, right?

14:05 You could, you do it.

14:07 It seems to work pretty well.

14:08 The only complaint, the only complaint that I have for it is it doesn't have, nerd

14:14 fonts.

14:15 So my, oh my posh, dude, this is serious business.

14:18 Don't laugh.

14:18 My nerd fonts, my, like, I can't do PLS.

14:21 I can't do, oh my posh and get like the cool, like shell prompt with all the information.

14:28 No, it's all just boxes.

14:29 It's rough.

14:30 No, it's fine.

14:31 It would be nice, but it does have cool things.

14:33 Like if you need to press control shift that, or, you know, it has like a special way to pull

14:39 up the, all those kinds of keys.

14:41 So you press control and then some other type of thing, or, you know, it has up arrow down

14:45 areas.

14:45 Like if you want to cycle through your history, it's got a, it's got a lot of cool features

14:48 like that where, you can kind of integrate that.

14:52 So it works.

14:52 I think it's going to work.

14:53 I think this is the one half of the DevOps story.

14:56 Okay.

14:57 The other, the other part is, oh my goodness.

14:59 What if it's a code problem?

15:02 Do I really want to try to edit code over this prompt thing through the iPad on, you know,

15:09 in like Emacs or what am I getting?

15:11 No, I don't want to do that.

15:12 So the other half is GitHub in particular, the VS Code integration into GitHub.

15:20 So if you remember, like here I have pulled up on the screen, just with any public repo

15:25 or your own private ones.

15:27 This is my Jinja partial thing for like basically integrating HTMX with a flask, but you can

15:33 press the dot.

15:34 If you press dot, it turns that whole thing into a cloud hosted VS Code session.

15:41 That's awesome.

15:42 Right.

15:42 Even has autocomplete.

15:43 So if I hit like dot there, you can see it's on my autocomplete.

15:46 That's pretty cool.

15:47 That's pretty cool.

15:48 But how do you press dot when you're on a webpage and, and iPad, there is no dot because you

15:55 can't pull up the keyboard.

15:56 The only thing you can do, pull up the keyboard is go to an input section.

15:59 And once you're in input, well, that just types dot.

16:01 It doesn't do that.

16:01 You're like, why?

16:03 So here's the other piece.

16:04 All right.

16:05 Here's the other piece.

16:05 So you go over here and you change github.com/Mike C. Kennedy slash Jinja partial to

16:10 github.com/dev slash whatever.

16:12 Boom.

16:12 Done.

16:13 So if you got to edit your code, you just go change the dot com to dot dev and you have an

16:18 editor.

16:19 You can check it back in.

16:20 Like in my setup, if I commit to the production branch, it kicks off a continuous deployment,

16:25 which will like automatically restart the server and reinstall like the things that might

16:30 need.

16:30 like if it has a new dependency or something, I could literally just come over here, make

16:35 some changes, do a PR over to the production branch or push some how much over to the production

16:39 branch and it's done.

16:41 It's good to go.

16:41 Isn't that awesome?

16:42 Just edit live.

16:43 Just, you know, edit your server live.

16:45 No.

16:46 Yeah.

16:47 I saw somewhere, somebody was complaining about the, prompt saying it's really hard for

16:52 me to edit my code on the server.

16:53 I'm like, why, why would you know it should be hard.

16:56 You don't do that.

16:57 Don't do that.

16:58 Yeah.

16:59 So I went to try to try this, but I have to do the two factor authentication to get

17:04 into my account.

17:05 So, yeah.

17:06 Yeah.

17:06 Yeah.

17:07 You got to do that.

17:07 Brandon also says, Hey, I'll buy you a keyboard case.

17:10 I absolutely hear you.

17:11 And I would love, you have no idea how jealous I am of people that can go and type on their

17:16 laptops and type on these small things like RSI.

17:18 I would be, I would be destroyed in like an hour or two.

17:22 If I did it, it's like, it's not a matter of, do I want to get the keyboard?

17:25 Like I just can't.

17:27 So anyway, it's not that bad to be me, but I'm not, I'm not typing on like small square

17:32 keyboards.

17:33 It just doesn't work.

17:33 It's just something I can't do.

17:34 Okay.

17:35 So just, exactly.

17:40 No, I just, I like, because when I was 30, my hands got messed up and they just, they

17:45 almost recovered, but not a hundred percent.

17:47 Right.

17:47 So I know you got more going on than I do though.

17:49 So I just got back from four days off and I took the iPad.

17:53 And I had to answer a few emails, but the, for me, these short emails, the little

18:01 key bed, the cover thing and it works fine.

18:04 Even though they're, those are expensive when you add, well, I want an iPad, but I also want

18:09 the keyboard thing.

18:10 And I want the pencil.

18:11 Suddenly it's like almost twice as much.

18:14 It is.

18:15 It is.

18:15 Absolutely.

18:16 And you know, just, people are, who've been paying attention for the last two hours.

18:21 Apple just released new iPads with M2s.

18:23 So people can go check that out if they want to spend money.

18:25 I'm happy with mine.

18:27 I'm going to keep it.

18:27 All right.

18:28 Before we move on to the next thing, Brian.

18:30 Okay.

18:31 Let me tell you about our sponsor this week.

18:33 So as has been the case as usual, thank you so much.

18:36 Microsoft for startups founders hub is sponsoring this episode.

18:41 We all know that starting a business is hard by a lot of estimates.

18:44 Over 90% of startups go out of business in just the first year.

18:48 And there's a lot of reasons for that.

18:49 Is it that you don't have the money to buy the resources?

18:52 Can you not scale fast enough?

18:54 Often it's like you have the wrong strategy or do you not have the right connections to,

18:59 you know, get the right publicity or you have no experience in marketing?

19:02 Lots, lots of problems, lots of challenges.

19:06 And as software developers, we're often not trained in those necessary areas like marketing,

19:13 for example.

19:13 But even if you know that, like there's others, right?

19:15 So having access to a network of founders, like you get in a lot of accelerators, like Y Combinator,

19:21 would be awesome.

19:22 So that's what Microsoft created with their founders hub.

19:25 So they give you free resources to a whole bunch of cloud things, Azure, GitHub, others,

19:32 as well as very importantly, access to a mentor network where you can book one-on-one calls with people

19:40 who have experience in these particular areas.

19:43 Often many of them are founders themselves and they've created startups and sold them

19:47 and they're in this mentorship network.

19:50 So if you want to talk to somebody about idea validation, fundraising, management and coaching,

19:56 sales and marketing, all those things, you can book one-on-one meetings with these people

20:00 to help get you going and make connections.

20:02 So if you need some free GitHub and Microsoft cloud resources, if you need access to mentors

20:08 and you want to get your startup going, make your idea a reality today with the support from Microsoft for startups, founders hub.

20:15 It's free to join.

20:16 It doesn't have to be venture backed.

20:18 It doesn't have to be third-party validated.

20:20 You just apply for free at pythonbiz.fm slash founders hub 2022.

20:25 The link is in your show notes.

20:27 Thanks a bunch to Microsoft for sponsoring our show.

20:31 What's next, Brian?

20:32 Well, that article that I already read about the speeding up pytest, it had a whole bunch of cool tools in it.

20:38 So I wanted to go through some of the tools that were in the article that I thought were neat.

20:42 One of them for profiling and timing was a thing called Hyperfine.

20:47 And this is a not, I don't think it's a Python thing, but you like for max,

20:53 you had to brew install it.

20:55 But one of the things it does is you can give it, you give it like two things and it runs both of them

21:02 and it can run it multiple times and then give you statistics comparing them.

21:08 So it's a really good comparison tool to, you know, like if you're testing your test suite to see how long it runs.

21:15 May as well run it a couple of times and see.

21:17 Yeah.

21:18 And for people who didn't see yet the example from that first article you covered,

21:23 a lot of those were CLI flags, right?

21:26 Like dash, dash, no, no's for disabling the plugin and so on.

21:32 So you could have two commands on the command line where you like basically change the command line arguments

21:38 to determine those kinds of things, right?

21:40 Yeah.

21:41 Like, yeah, exactly.

21:42 So run it a couple of times and run it, run the test suite a couple of times each

21:46 and just to see if I add the, if it had these no flags or this other flag or with the environmental variable.

21:53 Actually, I don't know how you could do that in there.

21:55 You can set environmental variables in the command line maybe.

21:58 Yeah, I'm sure that you can somehow.

21:59 Yeah.

22:00 In line an export statement or something, who knows?

22:03 At the very least, you can run the same command twice.

22:06 You can run it, set the environmental variable and then run it again to see if it makes a difference.

22:10 So, yeah.

22:11 So that, that was neat.

22:14 I don't know why I've got the API reference in here.

22:16 Oh, the thing I wanted to talk about was durations.

22:20 So let me find that.

22:21 I think I lost it.

22:22 So we did talk about duration, durations.

22:26 Oh, well.

22:27 Oh, here it is.

22:29 So durations, if you give it a number, it like durations 10, it, pytest will give you like

22:34 the 10 slowest tests and tell you how far, how slow they are.

22:37 But you can, if you don't give it anything, it just does all of it.

22:40 But the other thing that's been fairly recent, it wasn't there when I started using

22:46 pytest is durations min.

22:48 So you can, you can give it, when you give it durations with a blank or in zero, it, it

22:55 times everything, but you can, but that might be overwhelming.

22:58 So you can give it a minimum duration in seconds to only include only time the tests are all

23:04 over a second or something like that.

23:06 Right.

23:07 Right.

23:07 It was really, if it's 25 milliseconds, like just, I don't want to see it.

23:12 Yeah.

23:12 I'm not going to spend time trying to speed that up, but yeah.

23:15 Another cool thing brought up was a pie instrument, which is a way to, it's a very pretty way to

23:22 look at, you know, the times that you're spending on different things.

23:26 It's not just for testing, but you could use it for other stuff.

23:28 But apparently there's a, in the user guide, there is specifically how to profile your tests

23:34 with pytest using pie instrument.

23:36 So that's a cool, cool bit of documentation.

23:39 This doesn't, and this doesn't actually look obvious.

23:41 So maybe I'm, maybe I'm looking at this wrong, but I'm glad, I'm glad, I'm glad they wrote

23:46 this up.

23:47 So this is kind of cool.

23:48 Basically profiling your, oh, interesting.

23:51 And you do it as a fixture.

23:53 Yeah.

23:54 And so you create the profiler, you start the profiler, then you yield nothing, which triggers

24:01 the test to run.

24:02 And then you stop the profiler and do the output.

24:03 That's really cool.

24:04 Yeah.

24:05 Pretty cool way to do that.

24:06 So profiling each test.

24:08 Yeah.

24:08 Yeah.

24:10 It's a bit mind bending on the coroutines.

24:12 So it's kind of cool.

24:13 They're using it as a fixture because if you had the fixtures set up as a, like it's set

24:19 up by default as a function.

24:20 So it'll go around every function.

24:22 But if you set it up as a module, you could just find the slow module test modules in your

24:27 system, which might be an easier way to speed things up.

24:30 If you're looking.

24:31 Anyway.

24:31 Oh, I was thrilled that my little pytest skip slow plugin that I developed as part of it.

24:39 I didn't even come up with the ideas for the code, but that came out of the pytest documentation,

24:45 but it wasn't a plugin yet.

24:46 But I developed this plugin during writing the second edition of the book and it showed up

24:52 in his article, which is cool.

24:54 More interesting is pytest socket, which is a plugin that can turn off.

24:59 It just turns off a socket Python socket calls.

25:05 And, and then it raises a particular exception.

25:08 So it doesn't like if you just install it, it doesn't turn things off.

25:12 You have to pass in a disable socket to your test suite and then it turns off accessing the

25:18 external world.

25:19 So this is a kind of a cool way to easily find out which tests are failing because your network

25:25 is not connected.

25:26 So go figure out if you want to say, definitely don't talk to the network or don't talk to

25:31 the database.

25:31 Turn off the network and see what happens.

25:34 Yeah.

25:34 And then you can, I mean, but even if you did want part of your test suite to access the

25:37 network, you could test it to make sure that there aren't other parts of your test suite

25:41 that are accessing it when they shouldn't.

25:43 So it'd be cool debugging.

25:45 And then file system stuff too.

25:47 There's probably fake FS, fake file system that you can mock a file system.

25:52 So even things that you want to write, you don't actually have to have the files left around.

25:56 You can leave them around just long enough to test them.

25:58 So you can use this.

25:59 That's perfect.

26:00 And then the last thing I thought was cool was a way there's a thing called blue racer.

26:04 that, that you can attach to a get, GitHub, CI to check, to check in merges.

26:13 So if somebody merges something, you can test it.

26:15 You can check to see if they've terribly slowed down your test suite.

26:18 so it kind of reports that it doesn't, I don't think it fails on slower tests, but it

26:24 just sort of reports, reports what's going on.

26:28 So yeah, it gives you a little report of like, the nice what happened on the branch and

26:34 if the test suite slowed down.

26:35 So yeah, thanks to know.

26:37 Yeah.

26:37 That's a cool project.

26:38 blue racer.

26:39 Nice.

26:39 Okay.

26:40 And it's automatic, which is lovely.

26:42 Yeah.

26:43 So nice.

26:45 All right.

26:46 Well, I've got one more item for us as well, Brian.

26:49 Yay.

26:49 So we talked a little bit about, you talked about PI upgrade, the last show, I think

26:55 it was.

26:55 Yeah.

26:56 And we talked about some of these other ones.

26:58 So I want to talk about, I'm going to give a shout out to refurb, very active project last

27:02 updated two days ago, 1,600 stars.

27:06 And the idea is basically you can point this at your code and it'll just say, here are

27:12 the things that are making it seem like the old way of doing things.

27:15 You should try doing it the newer way.

27:18 So, for example, here's something, it's, it's asking if the file name is in a list,

27:24 right?

27:25 The, one of the ways you can see if, if file name equals X or file name equals Y or file

27:30 name equals Z, you can say if file name in X comma Y or comma Z, right?

27:35 And that's a more concise and often considered more Pythonic way.

27:39 But do you need a whole list allocated just to ask that question?

27:42 What about a tuple?

27:43 And here we have a with open file name as F then contents F dot read.

27:49 And then we have the split lines and so on.

27:51 And so, well, if you're using path lib, just say path dot read text.

27:55 You don't need the context manager.

27:57 You don't need two lines.

27:58 Just do it all in one.

28:00 And so on this simple little bit of code here, they just run refurb against your, this example,

28:05 Python file.

28:06 And it'll say, use duple X, Y, Z instead of list X, Y, Z for that in case.

28:12 And then what I really like about it is it finds like exactly the pattern that you're doing.

28:17 So it says you're using with open something as F, then value equals F dot read use,

28:24 you know, value equals path of X dot read text one line.

28:29 It gives you like pretty, it doesn't say you should use path read text.

28:32 It gives you in the syntax of here's the multiple lines you did do this instead.

28:37 Nice, right?

28:39 I don't, I don't think I've ever used read text.

28:41 So I learned something new.

28:42 I hadn't either, but you know what I do now.

28:44 It also says you can replace X starts with Y or starts with Z with, starts with X,

28:52 you know, or Y comma Z as a tuple.

28:54 And that'll actually test, yeah, one of the other.

28:58 Okay.

28:59 It says, instead of printing with an empty string, there's no reason to allocate an empty string.

29:03 Just call print blank.

29:04 That does the same effect.

29:05 And just, there's a whole bunch of things like that, that are really nice here.

29:08 And yeah, just, you can ask it to explain.

29:12 You're like, dude, what's going on here?

29:14 it told you told me to do one, two, three.

29:16 what, what's the motivation?

29:19 And you'll get kind of like a help text.

29:20 Here's the bad version.

29:21 Here's the good version.

29:22 Here's why you might consider that.

29:23 So for example, given a string, don't cast it again to a string, just use it.

29:28 maybe more important as you can ignore errors.

29:32 So you can ignore, just do a dash, dash, ignore a number.

29:35 There's one, which I'll show you in a second, which I've started adopting that for when I use

29:41 it, or you can put a hash, no QA.

29:43 And put a particular warning to be disabled.

29:46 Or you can just say, no, just leave this line alone.

29:49 Like, I just don't want to hear it.

29:49 Don't tell me.

29:51 so as you can say, hash, no QA.

29:52 And then it won't, it'll catch like all of them.

29:55 Okay.

29:55 Okay.

29:56 So I ran this on the Python bytes website and we got this.

30:00 It says, there's a part where it like builds up a list and then take some things out

30:06 trying to create a unique list.

30:07 I think this might be for like showing some of the testimonials.

30:11 It says, give me a list of all a bunch of testimonials and then randomly pick some out of it.

30:16 And then it'll delete the one it randomly picked and then pick another.

30:18 So it doesn't get duplication.

30:20 There's, there's other things like that as well.

30:21 Also in the search.

30:23 And so I write Dell X bracket Y to get rid of the element or whatever it's called item.

30:28 And they say, you know what?

30:30 on a dictionary, you should just use X dot pop of Y.

30:33 I think the Dell is kind of not obvious entirely what's going on.

30:36 Sometimes it means free memory.

30:37 Sometimes it means take the thing out of the list.

30:39 Right?

30:40 So they're like, okay, do this.

30:41 And I got the square bracket in warning instead of the, parenthesis, the tuple version.

30:47 And then also I had a list and I wanted to make a separate shallow copy of it.

30:53 So I said, list of that thing.

30:54 And I said, you can just do list dot copy or, you know, thing dot copy.

30:58 And it'll create the same thing, but it's a little more discoverable what the intention is.

31:01 Probably also more efficient.

31:03 Probably do it all at once instead of loop over it.

31:05 Who knows?

31:05 Anyway, this is what I got running against our stuff like this.

31:09 And you know what?

31:10 I fixed it all.

31:10 Cool.

31:11 Except there's this one part where it's got a whole bunch of different tests to transform a string.

31:18 And it's like line after line of dot replace, dot replace, dot replace, dot replace, dot replace.

31:22 One of those lines is to replace tabs with spaces.

31:26 Then eventually it finds all the spaces, turns them into single dashes and condenses them and whatnot.

31:31 And it says, oh, you should change X dot replace backslash T.

31:37 So tab with a space, replace that with X dot expand tabs one.

31:42 I'm like, no.

31:44 Maybe if it was just a single line where the only call was to replace the tabs, but there's like seven replaces.

31:53 And they all make sense.

31:55 Replace tabs, replace lowercase with that.

31:58 Like all these other things.

32:00 And if you just turn one of them into expand tabs, like why did, where did this come like into the sequence of replacements?

32:06 Like, why would you do this one thing?

32:07 Yeah.

32:08 And so I just put a no QA on that one and, and fixed it up.

32:12 But anyway, I found it to be pretty helpful in offering some nice recommendations.

32:16 People can check it out.

32:17 You can just run an entire directory.

32:19 You don't have to run it on one file.

32:20 Just say, you know, refurb dot slash go.

32:23 Cool.

32:24 Yeah.

32:24 We should run like several of these and then just do them in a loop and see if it ever settles down.

32:30 Exactly.

32:31 If you just keep taking its advice, does it upset the other one?

32:34 Yeah.

32:35 Like if you py upgrade and then, then refurb and then black and just, and some others.

32:40 Yeah.

32:40 Auto pepe.

32:41 Yeah.

32:43 The goal of this one is to modernize Python code bases.

32:46 If we had Python two code, I suspect it would go bonkers, but we don't.

32:50 So it's okay.

32:51 But one of the cool thing you, you mentioned you weren't going to do the expand tabs, but

32:54 I didn't know about the expand tabs.

32:57 So the tools like this also just like teach you stuff that you may not have known about

33:03 the language.

33:04 Yeah.

33:04 Like that read text versus a context manager and all sorts of stuff.

33:06 Yeah.

33:06 Yeah.

33:07 Yeah.

33:07 So the expand tabs, not where was it?

33:09 It was over here.

33:10 The expand tabs of one, that means replace the tab with one space.

33:14 So if you wanted like four spaces for every tab, you would just say expand tabs four.

33:17 Which is probably correct, right?

33:19 Yeah, of course.

33:20 Of course it is.

33:22 Of course it is.

33:23 All right.

33:24 Well, that's it for all of our items.

33:25 You got anything else you want to throw out there?

33:27 I don't.

33:29 How about you?

33:29 I do actually.

33:32 All right.

33:32 So let's, let's see.

33:33 I got a few things.

33:34 I'll go through them quick.

33:34 So another sequence of things that I think is pretty interesting.

33:38 This is not really the main thing, but it's kind of starting the motivation.

33:43 So we have over on all of our sites on Python bytes, on Talk Python and Talk Python training,

33:49 we have the ability to do search.

33:51 So for example, over on Talk Python training, I can say in Grok API postman.

33:56 And the results you got were just like previously were like this ugly list that you'd have to

33:59 kind of make sense of.

34:00 It was, it was really not something I was too proud of, but I'm like, I'm not inspired to

34:05 figure out a different UI, but I got inspired last week and said, okay, I'm going to come up with

34:09 this kind of like hierarchical view showing like, okay, if I search for say,

34:13 in Grok API postman, I want to see all the stuff that matches that out of the 240 hours

34:19 of spoken word, basically, right.

34:21 On the site and all the descriptions and titles and so on.

34:24 And so like, for example, this Twilio course I talked about used all those things and actually

34:30 has one lecture where exactly it talks about all three of those things.

34:35 And then others where they're in there, but like one, one video talks about in Grok, then

34:40 another one talks about an API or, you know, some, it's not really focused.

34:44 Right.

34:44 And here just in this course, like it doesn't even exist in a single chapter, but across

34:48 a hundred days of web and Python, like all those words are said.

34:51 Right.

34:52 So I came up with this search engine and well, the search engine existed, but it wasn't running

34:58 in a, it wasn't basically hosted in a way that I was real happy with.

35:01 So what I did is I took some of our advice from 2017.

35:05 I said, you know what?

35:06 I'm going to create, I'm going to create a system B service that just runs as part of

35:13 Linux.

35:14 When I turn it on, that is going to do all the indexing and a lot of the pre-processing.

35:18 So that page can be super fast.

35:19 So for example, like the response time for this page is effectively instant.

35:23 It's like 30, 40 milliseconds.

35:25 Right.

35:25 Even though it's, it's doing tons of searching.

35:27 So I'm going to run this Python script series of scripts in the little app as a system D service,

35:34 which is excellent.

35:36 And so we talked about how you can do that.

35:37 And, you know, if you look, here's a, an example, basically you just create a system D dot

35:42 service file.

35:43 And you say like Python space, your file with the arguments and you can set up and I'll

35:49 just auto start and be managed by, you know, system control, which is awesome.

35:53 So that's all neat.

35:56 The other thing I want to give, this is the main thing I really want to give some advice

36:00 about though, is those, these, these daemons, what they look like is while true, chill out

36:06 for a while, do your thing, wait for an event, do your thing, look for a file, do your thing,

36:12 then look for it for some more, right?

36:14 You're just going over and over in this loop, like running, but often it's not busy, right?

36:18 It's waiting for something in the search thing.

36:20 It's like waiting for an hour or something.

36:22 Then it'll rebuild the search, but it could just as well be waiting for a file to appear

36:27 in some kind of upload folder and then like start processing that.

36:31 I don't know.

36:32 Right.

36:32 So it ha they almost always have this pattern of like while true, either wait for an event

36:38 and then do it or chill for a while and then do the thing.

36:42 So my recommendation, my thought here is if you combine this with multi-processing, you

36:50 can often get much, much lower overhead on your server, right?

36:55 So check this out.

36:57 So here's a, an example of the search thing on Talk Python search out of Glances.

37:01 Notice it's using 78 megabytes of RAM.

37:06 This is in the beginning of show notes, of course.

37:07 This is it just running there in the background.

37:10 Before I started using multi-processing, it was using like 300 megs of RAM constantly on

37:16 the server because it would wait for an hour and then it would load up the entire 240 hours

37:22 of text and stuff and process it and do database calls and then generate like a search result,

37:28 a search set of keyword maps and then it would, you know, would refresh those again.

37:33 But normally it's just resting.

37:36 It puts that stuff back in the database.

37:37 But if you like let it actually do the work, it will basically not, not unload those modules

37:44 and unload all that other stuff that happened in there.

37:47 So if you take the function that says just do the one thing in the loop and you just call

37:52 that with multi-processing, it goes from 350 megs to 70 megs.

37:56 No other work.

37:57 Because it, that little thing fires up, it does all the work and then it shuts back down and

38:01 it doesn't get like all that extra stuff loaded into your process.

38:04 Okay.

38:04 A little cool, right?

38:05 It is cool.

38:07 You could, I mean, for special cases like ours, I mean, for yours, you could just kick it

38:12 off yourself, right?

38:13 Or as avid, be part of your published thing when you publish new show notes.

38:17 Yeah, exactly.

38:20 I mean, I could, I could base it on some of that.

38:23 Like, yeah, it could, it gets complicated because it's hard to tell when that happens.

38:29 Yeah.

38:30 There's, there's like a, as you can see, like in this example, there's like eight worker

38:34 processes, right?

38:35 So which one should be in charge of knowing that?

38:39 I don't know.

38:39 It's, so it's, it's easy to just have that thing running and just like, you know, the

38:43 search will be up to date and it's going, but please don't overwhelm the server by loading

38:48 the entire thing and hanging on to it forever.

38:50 Yeah, exactly.

38:51 Yeah.

38:52 So anyway, I thought that was a fun story to share.

38:54 Let's do this one next.

38:56 We talked about JetBrains Fleet.

38:58 Think PyCharm.

39:01 PyCharm's like little cousin that is very much like VS Code, I guess, but as like PyCharm

39:08 heritage.

39:09 So this thing is now out of private beta is now into public beta.

39:15 So it has like Google Docs type collaboration.

39:17 It has, but it has like PyCharm source code refactoring and deep understanding that seems

39:25 pretty excellent.

39:26 So people can check that out.

39:28 It looks, looks pretty neat.

39:29 I've done a little bit of playing with it, but not too much yet.

39:32 But if you're a VS Code type of person, like this might speak to you more than PyCharm.

39:37 So that's out.

39:38 Speaking of PyCharm, I'm going to be on a webcast with Paul Everett on Thursday.

39:44 We're talking about Django and PyCharm tips reloaded.

39:48 So just kind of a bunch of cool things you can do to, if you're working in a Django project

39:53 in PyCharm, you want to be awesome and quick and efficient.

39:56 Okay.

39:57 Last one.

39:57 How about this?

39:58 This, this Mark, go ahead.

40:01 This blows me away and it's interesting.

40:03 So this is interesting.

40:05 So we all have got to be familiar with the GDPR.

40:09 I did weeks worth of work reworking the various websites to be officially compliant with GDPR.

40:16 You know, like we weren't doing any creepy stuff to like, oh, now we've got to start, stop

40:21 our tracking or anything like that.

40:22 But like, there's certain things about you need to record the opt-in explicitly and be able

40:27 to associate a record like that kind of stuff.

40:28 Right.

40:29 So some of us did a bunch of work to make our code GDPR compliant.

40:34 Others, not so much.

40:37 But the news here is that Denmark has ruled that Google Analytics is illegal.

40:43 I mean, like, okay.

40:45 And illegal in the sense that the Google Analytics violates the GDPR and basically can't be used.

40:55 I believe France and two other countries whose name I'm forgetting.

41:00 I've also decided that as well.

41:03 And yeah, more or less, they, a significant number of European countries are deciding that

41:11 Google Analytics just can't be used if you are going to be following the GDPR, which I think

41:17 most companies in the West, at least, need to follow.

41:22 Yeah.

41:23 So I'm glad.

41:25 I mean, my early days of web stuff, I was using Google Analytics.

41:30 Of course, a lot of people do.

41:32 Yeah.

41:32 And it's free.

41:34 They give you all this information free.

41:36 Why not?

41:37 Why are they giving?

41:38 Oh, it's not.

41:39 Wait a minute.

41:40 Wait a second.

41:41 They're using you and your website to help collect data on everybody that uses your website.

41:46 Yeah.

41:46 It seems like such a good trade-off.

41:47 But yeah, I mean, you're basically giving like every single action on your website, giving

41:55 that information about your users, every one of their actions over to Google, which seems

42:00 like a little, I could see why that would be looked down upon from a GDPR perspective, no

42:05 doubt.

42:05 Just by the way, also on that, if you look over on Python Bytes, the pay, let's see, does

42:12 it say anything?

42:13 How many, how many blockers have we got or how many creepy things do we have to worry about

42:19 over here?

42:19 Zero.

42:20 Like we don't use Google Analytics.

42:22 We don't use, yeah, that's just global stats.

42:25 But yeah, we don't use Google Analytics or any other form of client-side analytics whatsoever.

42:31 So I'm pretty happy about that actually.

42:33 But check out the video by Steve Gibson.

42:36 It's an excerpt of a different podcast, but I think it's worth covering.

42:41 It's pretty interesting.

42:42 Yeah.

42:42 It's something to watch at least.

42:44 Yeah.

42:45 Yeah.

42:46 Ikivu points out in the audience, how can you enforce something like that?

42:49 That is Google Analytics being not allowed.

42:51 It's embedded in so many sites everywhere.

42:53 Sometimes you don't even manage it.

42:55 You just enter an analytics ID.

42:58 Yeah.

42:59 It's honestly a serious problem.

43:02 Like, for example, on our Python Bytes website, if you go to one of the newer episodes, they

43:08 all have a nice little picture.

43:11 That picture is from the YouTube thumbnail.

43:14 Like, it literally pulls it straight from YouTube.

43:17 The first thing I tried to do, Brian, was I said, well, here's the image that YouTube uses for the poster on the video.

43:24 So I'll just put a little image where the source is youtube.com slash video poster, whatever the heck the URL is.

43:32 Even for that, Google started putting tracking cookies on all of our visitors.

43:36 Like, come on, Google.

43:37 It's just an image.

43:38 No.

43:39 Tracking cookies.

43:39 Yeah.

43:40 Yeah.

43:41 Or cookies, right?

43:42 So what I had to end up doing is the website on the server side looks at the URL, downloads the images, puts it in MongoDB.

43:51 And when a visitor comes, we serve it directly out of MongoDB with no cookies.

43:55 Like, it is not trivial to avoid getting that kind of stuff in there.

44:00 Because even when you try not to, it shows up a lot of times, like Icavu mentioned.

44:05 Yeah.

44:05 The way it gets enforced, somebody says, here's a big website.

44:09 They're violating the GDPR.

44:12 We're going to recommend.

44:13 I'm going to report them, basically, is what happens, I think.

44:16 Yeah.

44:17 But I think for small fish, like me or something, it's just, if a country says, don't do that, maybe I won't.

44:26 Because they might have good reasons.

44:28 Yeah.

44:29 And if you're a business, you've got to worry a lot more.

44:31 I don't think any individual will ever get in trouble for that.

44:35 But it's also, I mean, think about how much you're exposing everybody's information.

44:41 And you can't know before you go to a website whether that's going to happen.

44:44 It's already happened once you get there.

44:46 So I guess, see our previous conversation about ad blockers, NextDNS, do we hate creators?

44:52 No.

44:53 Do we hate this kind of stuff?

44:54 Yes.

44:54 Yeah.

44:55 Anyway.

44:55 Also, information is interesting.

44:57 So, but just pay attention to what you have, because you don't need Google Analytics to just

45:02 find out which pages are viewed most.

45:05 Absolutely.

45:05 Things like that.

45:06 You can use other ways.

45:07 Yep.

45:08 All right.

45:09 Well, that's a bunch of extras, but there they are.

45:11 That's so serious, though.

45:13 Do we have something funny?

45:14 We do.

45:15 Okay.

45:16 Something, I got some, this is very much, I picked this one for you, Brian.

45:20 Okay.

45:20 So this has to do with testing.

45:22 Tell me what's in this picture here.

45:23 Describe for our listeners.

45:25 I love this picture.

45:26 This is great.

45:29 So it says, all unit test passing.

45:32 And it is a completely shattered sink.

45:35 The only thing left of the sink is the faucet is still attached to some porcelain.

45:40 You can turn it on.

45:41 You can turn it on.

45:42 And it goes down the drain.

45:43 Actually, so you've already, you've even got integration tests passing.

45:46 Yeah, you do.

45:47 This is pretty, yeah.

45:48 This is pretty, not 100% coverage, but.

45:50 Yeah.

45:51 Right.

45:53 Not 100% coverage of the sink.

45:54 Yeah.

45:56 There's this sink and it's completely smashed.

45:58 There's just like, just a little tiny chunk fragment of it left.

46:01 But it's got the drain and the faucet is still pouring into it.

46:04 Unit test pass.

46:05 I love it.

46:05 Yeah.

46:06 You might even cut yourself if you tried to wash your hands in this.

46:09 But, but funny.

46:11 You might.

46:12 You might.

46:13 Well.

46:14 That's good.

46:15 Fun as always.

46:16 Thanks for being here.

46:17 Thank you.

46:18 Yeah.

46:18 See you later.

46:19 Thank you everyone for listening.

46:20 Bye.